05 Classification And Prediction

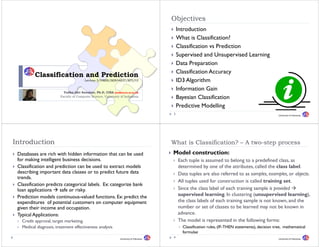

- 1. Objectives Introduction What is Classification? Classification vs Prediction Supervised and Unsupervised Learning Data Preparation D t P ti Classification Accuracy Classification and Prediction Lecture 5/DMBI/IKI83403T/MTI/UI ID3 Algorithm Yudho Giri Sucahyo, Ph.D, CISA (yudho@cs.ui.ac.id) Information Gain Faculty of Computer Science, University of Indonesia Bayesian Classification Predictive Modelling 2 University of Indonesia Introduction What is Classification? – A two step process two-step Databases are rich with hidden information that can be used Model construction: for making intelligent business decisions. Each tuple is assumed to belong to a predefined class, as Classification and prediction can be used to extract models determined by one of the attributes, called the class label. describing i d ibi important d classes or to predict f data l di future data d Data tuples are also referred to as samples, examples, or objects. trends. All tuples used for construction is called training set. Classification predicts categorical labels. Ex: categorize bank labels loan applications safe or risky. Since the class label of each training sample is provided Prediction models continuous-valued functions. Ex: predict the supervised learning. In clustering (unsupervised learning), expenditures of potential customers on computer equipment the l labels f th class l b l of each training sample i not known, and th ht i i l is t k d the given their income and occupation. number or set of classes to be learned may not be known in Typical Applications: advance. Credit approval, target marketing, The model is represented in the following forms: Medical diagnosis, treatment effectiveness analysis g , y Classification rules, ( , (IF-THEN statements), decision tree, mathematical ), , formulae University of Indonesia 4 University of Indonesia

- 2. What is Classification? – A two step process (2) two-step Classification Process (1) The model is used for classifying future or Classification unknown objects. Algorithms Training First, the predictive accuracy of the model is estimated Data The known label of test sample is compared with the classified result from the model. Accuracy rate is the percentage of test set samples that are correctly classified by the model. NAM E RANK YEARS TENURED Classifier (Model) Test set is independent of training set otherwise over-fitting (it may M ike Assistant Prof 3 no have incorporated some particular anomalies of the training data that M ary Assistant Prof 7 yes are not present in the overall sample population) will occur. Bill Professor 2 yes If the accuracy of the model is considered acceptable the acceptable, Jim Associate Prof 7 yes model can be used to classify future objects for which the Dave Assistant Prof 6 no IF rank = ‘professor’ OR years > 6 class label is not known (unknown, p ( previously unseen data). y ) Anne Associate Prof 3 no THEN tenured = ‘yes’ yes 6 5 University of Indonesia University of Indonesia Classification Process (2) What is Prediction? Prediction is similar to classification Classifier First, construct model. Second, use model to predict future or unknown objects p j Major method for prediction is regression: Testing Linear and multiple regression Data Unseen Data Non-liner regression (Jeff, Professor, 4) Prediction is different from classification Classification refers to predict categorical class label. NAM E RANK YEARS TENURED Tom Assistant Prof 2 no Tenured? Prediction refers to predict continuous value. M erlisa Associate Prof 7 no George Professor 5 yes Joseph Assistant Prof 7 yes es 7 University of Indonesia 8 University of Indonesia

- 3. Classification vs Prediction Supervised vs Unsupervised Learning Sending out promotional literature to every new Supervised learning (classification) customer in the database can be quite costly. A more cos- Supervision: The training data (observations, measurements, efficient method would be to target only those new etc.) are accompanied by labels indicating the class of the ) p y g customers who are likely to purchase a new computer h lik l h observations classification. Based on the training set to classify new data g y Predict h P d the number of major purchases that a customer b f h h will make during a fiscal year prediction. Unsupervised learning (clustering) We are given a set of measurements, observations etc with measurements observations, the aim of establishing the existence of classes or clusters in the data No training data, or the “training data” are not accompanied by class labels 9 University of Indonesia 10 University of Indonesia Issues – Data Preparation Issues – Data Preparation Data preprocessing can be used to help improve the Data Transformation accuracy, efficiency, and scalability of the classification or Data can be generalized to higher-level concepts. prediction process. Useful fot continuous-valued attributes. Data Cleaning Income can be generalized low, medium, high. Remove/reduce noise and the treatment of missing values Street city. Relevance Analysis Generalization compresses the original training data, fewer Many of the attributes in the data may be irrelevant to the input/output operations may be involved during learning. classification or prediction task. Ex: data recording the day of When i Wh using neural networks (or other methods involving l t k ( th th d i l i the week on which a bank loan application was filed is unlikely distance measurements), data may also be normalized. to be relevant to the success of the application application. Other attributes may be redundant. This step is known as feature selection selection. 11 University of Indonesia 12 University of Indonesia

- 4. Comparing Classification Method Classification Accuracy: Estimating Error Rates Predictive accuracy Partition: Training-and-testing Speed and scalability use two independent data sets, e.g., training set (2/3), test time to construct the model set(1/3) time to use the model used for data set with large number of samples Robustness handling noise and missing values Cross-validation Scalability divide the data set into k subsamples efficiency in large databases (not memory resident data) use k-1 subsamples as training data and one sub-sample as test p g p Interpretability: data --- k-fold cross-validation the level of understanding and insight provided by the model for data set with moderate size Goodness of rules decision tree size Bootstrapping (leave-one-out) the compactness of classification rules for small size data 13 University of Indonesia 14 University of Indonesia What is a decision tree? Training Dataset A decision tree is a flow-chart-like tree structure. An Example Outlook Tempreature Humidity Windy Class sunny hot high false N Internal node denotes a test on an attribute from Quinlan’s sunny hot high true N Branch represents an outcome of the test ID3 overcast hot high g false P All tuples in branch have the same value for the tested rain mild high false P attribute. rain cool normal false P Leaf node represents class label or class label distribution. rain cool normal tr e true N overcast cool normal true P To classify an unknown sample, the attribute values of the sunnyy mild high g false N sample are tested against the decision tree. A path is traced sunny cool normal false P from the root to a leaf node that holds the class prediction rain mild normal false P for h f that sample. l sunny mild ild normal t l true P overcast mild high true P Decision trees can easily be converted to classification rules. overcast hot normal false P rain mild high true N 15 University of Indonesia 16 University of Indonesia

- 5. A Sample Decision Tree Decision-Tree Decision Tree Classification Methods The basic top-down decision tree generation approach Outlook usually consists of two phases: sunny Tree construction overcast overcast rain At start, all the training examples are at the root. Partition examples recursively based on selected humidity P windy attributes. Tree pruning high normal true false Aiming at removing tree branches that may lead to errors when classifying t t d t (t i i d t may contain noise, h l if i test data (training data t i i N P N P outliers, …) 17 University of Indonesia 18 University of Indonesia Choosing Split Attribute – ID3 Algorithm Information Gain (ID3/C4 5) (1) (ID3/C4.5) All attributes are categorical Assume all attributes to be categorical (discrete-values). Create a node N; Continuous-valued attributes must be discretized. if samples are all of the same class C, then Used to select the test attribute at each node in the tree. return N as a leaf node labeled with C if attribute-list is empty then Also called measure of the goodness of split. return N as a leaf node labeled with the most common class The attribute with the highest information gain is chosen g g select split-attribute with highest information gain as the test attribute for the current node. label N with the split-attribute for f each value Ai of split-attribute, grow a branch from Node N h l f lit tt ib t b hf N d let Si be the branch in which all tuples have the value Ai for split- attribute if Si is empty then attach a leaf labeled with the most common class Else recursively run the algorithm at Node Si until all branches reach leaf nodes 19 University of Indonesia 20 University of Indonesia

- 6. Information Gain (ID3/C4 5) (2) (ID3/C4.5) Information Gain (ID3/C4 5) (3) (ID3/C4.5) Assume that there are two classes, P and N. The attribute A is selected such that the information gain Let h L the set of examples S contain p elements of class P and n f l l f l d gain(A) = I(p, n) - E(A) elements of class N. The amount of information needed to decide if an arbitrary information, is maximal, that is, E(A) is minimal since I(p, n) is the same to all , , ( ) (p, ) example in S belong to P or N is defined as attributes at a node. p p n n In the given sample data, attribute outlook is chosen to split at data I ( p, n ) = − lo g 2 − lo g 2 p+n p+n p+n p+n the root : Assume that using attribute A as the root in the tree will partition S in sets {S1, S2 , …, Sv} }. gain(outlook) = 0 246 i ( tl k) 0.246 If Si contains pi examples of P and ni examples of N, the information gain(temperature) = 0.029 needed to classify objects in all subtrees Si : gain(humidity) = 0.151 v pi + ni gain(windy) = 0.048 E( A) = ∑ I ( pi , ni) i =1 p+n 21 University of Indonesia 22 University of Indonesia Information Gain (ID3/C4 5) (3) (ID3/C4.5) Information Gain (ID3/C4 5) (4) (ID3/C4.5) Examples: Next, compute the entropy of each attribute. Let’s start with the See Table 7.1. attribute age. ib For age = “<= 30”: s11 = 2 s21 = 3 I (s11, s21) = 0.971 Class label: buys_computer. Two values:YES, NO. For age = “31 40”: s12 = 4 s22 = 0 I (s12, s22) = 0 31..40 : m = 2. C1 correspond to yes, C2 correspond to no. For age = “>40”: s13 = 3 s23 = 2 I (s13, s23) = 0.971 9 samples of class yes and 5 samples of class no. Using equation (7.2), the expected information needed to classify (7 2) Compute the expected information needed to classify a given a given sample if the samples are partitioned by age is sample 5 4 5 E ( age ) = I ( s 11 , s 21 ) + I ( s 12 , s 22 ) + I ( s 13 , s 23 ) = 0 . 694 9 9 5 5 14 14 14 I ( s1 , s 2 ) = I (9,5) = − log − log = 0 .940 Hence, the gain in information from such a partitioning: 14 2 14 14 2 14 Gain(age) = I (s1, s2) – E (age) = 0.246 ( g ) ( ( g ) Similarly, we can compute Gain(income) = 0.029, Gain(student) = 0.151, Gain(Credit_rating) = 0.048. 23 University of Indonesia 24 University of Indonesia

- 7. How to use a tree? Tree Pruning Directly A decision tree constructed using the training data may have test the attribute value of unknown sample against the tree. too many branches/leaf nodes. / f A path is traced from root to a leaf which holds the label Caused by noise, overfitting Indirectly May M result poor accuracy for unseen samples l f l decision tree is converted to classification rules Prune the tree: merge a subtree into a leaf node. one rule is created for each path from the root to a leaf Using U i a set of d different from the training data. f data diff f h i i d IF-THEN is easier for humans to understand At a tree node, if the accuracy without splitting is higher than Example: the accuracy with splitting replace the subtree with a leaf node splitting, node, label it using the majority class. IF age = “<=30” AND student = “no” THEN buys_computer = “no” Pruning Criterion: Pessimistic pruning: C4.5 MDL: SLIQ and SPRINT Cost complexity pruning: CART 25 University of Indonesia 26 University of Indonesia Classification and Databases Classifying Large Dataset Classification is a classical problem extensively studied by Decision trees seem to be a good choice statisticians relatively faster learning speed than other classification AI, especially machine learning researchers methods Database researchers re-examined the problem in the can be converted into simple and easy to understand classification rules context of large databases can be used to generate SQL queries for accessing databases most previous studies used small size data, and most algorithms are memory resident has comparable classification accuracy with other methods Recent data mining research contributes to Classifying data sets with millions of examples and a few data-sets Scalability hundred even thousands attributes with reasonable Generalization-based classification speed. speed Parallel and distributed processing 27 University of Indonesia 28 University of Indonesia

- 8. Scalable Decision Tree Methods Previous Efforts on Scalability Most algorithms assume data can fit in memory. Incremental tree construction (Quinlan’86) ( ) Data mining research contributes to the scalability issue, using partial data to build a tree. especially for decision trees. p y testing other examples and those mis-classified ones are used g p to rebuild the tree interactively. Successful examples Data reduction (Cattlet’91) SLIQ (EDBT’96 -- Mehta et al ’96) (EDBT 96 al. 96) reducing data size by sampling and discretization. SPRINT (VLDB96 -- J. Shafer et al.’96) still a main memory algorithm. PUBLIC (VLDB98 -- Rastogi & Shim 98) Shim’98) Data partition and merge (Chan and Stolfo’91) RainForest (VLDB98 -- Gehrke, et al.’98) partitioning data and building trees for each partition. merging multiple trees into a combined tree. experiment results indicated reduced classification accuracy. 29 University of Indonesia 30 University of Indonesia Presentation of Classification Rules Other Classification Methods Bayesian Classification Neural Networks Genetic Algorithm Rough Set Approach k-Nearest Neighbor Classifier Case-Based Reasoning (CBR) Fuzzy Logic Support Vector Machine (SVM) 31 University of Indonesia 32 University of Indonesia

- 9. Bayesian Classification Bayes Theorem (1) Bayesian classifiers are statistical classifiers. Let X be a data sample whose class label is unknown. They can predict class membership probabilities, such as Let H be some hypothesis, such as that the data sample X the probability that a given sample belongs to a particular belongs to a specified class C. class. We want to determine P(H|X), the probability the the Bayesian classification is based on Bayes theorem. hypothesis H holds given the observed data sample X. Naive Bayesian Classifier is comparable in performance P(H|X) is the posterior probability or a posteriori with decision tree and neural network classifiers. probability, of H conditioned on X. Bayesian classifiers also have high accuracy and speed Support the world of data samples consists of fruits, described when applied to large databases. by their color and shape., Suppose that X is red and round, and that H i the h h is h hypothesis that X i an apple. Th P(H|X) h i h is l Then reflects our confidence that X is an apple given that we have seen that X is red and round. 33 University of Indonesia 34 University of Indonesia Bayes Theorem (2) Predictive Modeling in Databases P(H) is the prior probability or a priori probability, of H. What if we would like to predict a continuous value, The probability that any given data sample is an apple, rather than a categorical label? regardless of how the data sample looks. Prediction of continuous values can be modeled by statistical The posterior probability is based on more information techniques of regression. h i f i (such as background knowledge) than the prior Example: probability which i i d b bili hi h is independent of X d f X. A m dle t predict the salary of c lle e graduates with 10 years of modle to redict salar f college rad ates ith ears f work experience. Bayes theorem is P(H | X ) = P( X | H )P(H ) Potential sales of a new product given its price. P( X ) (X Many problems can be solved by linear regression. See example 7.4 for example on Naive Bayesian Software packages for solving regression problems: Classification. SAS, SPSS, S-Plus 35 University of Indonesia 36 University of Indonesia

- 10. Linear Regression Prediction: Numerical Data Data are modeled using a straight line. The simplest form of regression Bivariate liner regressions models a random variable Y g (called a response variable), as a linear function of another random variable, X (called a predictor variable) Y=α+βX See Example 7.6 for an example of linear regression. p p g Other regression models Multiple regression Log-linear models 37 University of Indonesia 38 University of Indonesia Prediction: Categorical Data Conclusion Classification is an extensively studied problem (mainly in statistics, machine learning & neural networks) Classification is probably one of the most widely used data mining techniques with a lot of applications. Scalability is still an important issue for database applications. Combining classification with database techniques should be a g q promising research topic. Research Direction: Classification of non relational data e g non-relational data, e.g., text, spatial, multimedia, etc.. 39 University of Indonesia 40 University of Indonesia

- 11. References References (2) C. Apte and S. Weiss. Data mining with decision trees and decision rules. Future Generation J. Magidson. The chaid approach to segmentation modeling: Chi-squared automatic interaction detection. In R. P. Bagozzi, editor, Advanced Methods of Marketing Research, p g g , , g , pages Computer S t C t Systems, 13, 1997. 13 1997 118-159. Blackwell Business, Cambridge Massechusetts, 1994. L. Breiman, J. Friedman, R. Olshen, and C. Stone. Classification and Regression Trees. Wadsworth M. Mehta, R. Agrawal, and J. Rissanen. SLIQ : A fast scalable classifier for data mining. In Proc. International Group, 1984. p 1996 Int. Conf. Extending Database Technology (EDBT'96), Avignon, France, March 1996. P. K. Chan and S. J. Stolfo. Learning arbiter and combiner trees from partitioned data for scaling S. K. Murthy, Automatic Construction of Decision Trees from Data: A Multi-Diciplinary Survey, Data Mining and Knowledge Discovery 2(4): 345-389, 1998 machine learning. In Proc. 1st Int. Conf. Knowledge Discovery and Data Mining (KDD'95), pages J J. R. Quinlan. Bagging, boosting, and c4.5. In Proc. 13th Natl. Conf. on Artificial Intelligence gg g g g 39-44, M 39 44 Montreal, Canada, August 1995. l C d A 1995 (AAAI'96), 725-730, Portland, OR, Aug. 1996. U. M. Fayyad. Branching on attribute values in decision tree generation. In Proc. 1994 AAAI Conf., R. Rastogi and K. Shim. Public: A decision tree classifer that integrates building and pruning. In p g pages 601-606, AAAI Press, 1994. , , Proc. 1998 Int. Conf. Very Large Data Bases, 404-415, New York, NY, August 1998. J. Shafer, R. Agrawal, and M. Mehta. SPRINT : A scalable parallel classifier for data mining. In J. Gehrke, R. Ramakrishnan, and V. Ganti. Rainforest: A framework for fast decision tree Proc. 1996 Int. Conf. Very Large Data Bases, 544-555, Bombay, India, Sept. 1996. construction of large datasets. In Proc. 1998 Int. Conf. Very Large Data Bases, pages 416-427, New S. M. Weiss and C. A. Kulikowski. Computer Systems that Learn: Classification and Prediction York, NY, August 1998. Methods from Statistics, Neural Nets, Machine Learning, and Expert Systems. Morgan Kaufman, 1991. M. Kamber, L. Winstone, W. Gong, S. Cheng, and J. Han. Generalization and decision tree induction: Efficient classification in data mining. In Proc. 1997 Int. Workshop Research Issues on Data Engineering (RIDE'97), pages 111-120, Birmingham, England, April 1997. 41 University of Indonesia 42 University of Indonesia