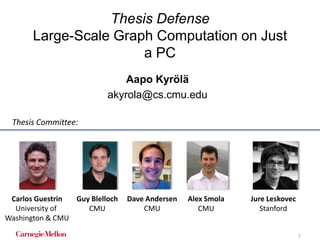

Large-Scale Graph Computation on Just a PC: Aapo Kyrola Ph.D. thesis defense

- 1. Thesis Defense Large-Scale Graph Computation on Just a PC Aapo Kyrölä akyrola@cs.cmu.edu Carlos Guestrin University of Washington & CMU Guy Blelloch CMU Alex Smola CMU Dave Andersen CMU Jure Leskovec Stanford Thesis Committee: 1

- 2. Research Fields This thesis Machine Learning / Data Mining Graph analysis and mining External memory algorithms research Systems research Databases Motivation and applications Research contributions 2

- 3. Why Graphs? Large-Scale Graph Computation on Just a PC 3

- 4. BigData with Structure: BigGraph social graph social graph follow-graph consumer- products graph user-movie ratings graph DNA interaction graph WWW link graph Communication networks (but “only 3 hops”) 4

- 5. Why on a single machine? Can’t we just use the Cloud? Large-Scale Graph Computation on a Just a PC 5

- 6. Why use a cluster? Two reasons: 1. One computer cannot handle my graph problem in a reasonable time. 1. I need to solve the problem very fast. 6

- 7. Why use a cluster? Two reasons: 1. One computer cannot handle my graph problem in a reasonable time. 1. I need to solve the problem very fast. Our work expands the space of feasible problems on one machine (PC): - Our experiments use the same graphs, or bigger, than previous papers on distributed graph computation. (+ we can do Twitter graph on a laptop) Our work raises the bar on required performance for a “complicated” system. 7

- 8. Benefits of single machine systems Assuming it can handle your big problems… 1. Programmer productivity – Global state, debuggers… 2. Inexpensive to install, administer, less power. 3. Scalability – Use cluster of single-machine systems to solve many tasks in parallel. Idea: Trade latency for throughput < 32K bits/sec 8

- 9. Computing on Big Graphs Large-Scale Graph Computation on Just a PC 9

- 10. Big Graphs != Big Data 10 Data size: 140 billion connections ≈ 1 TB Not a problem! Computation: Hard to scale Twitter network visualization, by Akshay Java, 2009

- 11. Research Goal Compute on graphs with billions of edges, in a reasonable time, on a single PC. – Reasonable = close to numbers previously reported for distributed systems in the literature. Experiment PC: Mac Mini (2012) 11

- 12. Terminology • (Analytical) Graph Computation: – Whole graph is processed, typically for several iterations vertex-centric computation. – Examples: Belief Propagation, Pagerank, Community detection, Triangle Counting, Matrix Factorization, Machine Learning… • Graph Queries (database) – Selective graph queries (compare to SQL queries) – Traversals: shortest-path, friends-of-friends,…12

- 13. GraphChi (Parallel Sliding Windows) GraphChi-DB (Partitioned Adjacency Lists) Online graph updates Batch comp. Evolving graph Incremental comp. DrunkardMob: Parallel Random walk simulation GraphChi^2 Triangle Counting Item-Item Similarity PageRank SALSA HITS Graph Contraction Minimum Spanning Forest k-Core Graph Computation Shortest Path Friends-of-Friends Induced Subgraphs Neighborhood query Edge and vertex properties Link prediction Weakly Connected Components Strongly Connected Components Community Detection Label Propagation Multi-BFS Graph sampling Graph Queries Matrix Factorization Loopy Belief Propagation Co-EM Graph traversal Thesis statement The Parallel Sliding Windows algorithm and the Partitioned Adjacency Lists data structure enable computation on very large graphs in external memory, on just a personal computer. 13

- 15. GraphChi (Parallel Sliding Windows) Batch comp. Evolving graph Triangle Counting Item-Item Similarity PageRank SALSA HITS Graph Contraction Minimum Spanning Forest k-Core Graph Computation Weakly Connected Components Strongly Connected Components Community Detection Label Propagation Multi-BFS Matrix Factorization Loopy Belief Propagation Co-EM GraphChi-DB (Partitioned Adjacency Lists) Online graph updates DrunkardMob: Parallel Random walk simulation GraphChi^2 Incremental comp. Shortest Path Friends-of-Friends Induced Subgraphs Neighborhood query Edge and vertex properties Link prediction Graph sampling Graph Queries Graph traversal 15

- 16. Computational Model • Graph G = (V, E) – directed edges: e = (source, destination) – each edge and vertex associated with a value (user-defined type) – vertex and edge values can be modified • (structure modification also supported) Data Data Data Data DataDataData Data Data Data 16 A B e Terms: e is an out-edge of A, and in-edge of B.

- 17. Data Data Data Data DataDataData Data Data Data Vertex-centric Programming • “Think like a vertex” • Popularized by the Pregel and GraphLab projects – Historically, systolic computation and the Connection Machine MyFunc(vertex) { // modify neighborhood }Data Data Data Data Data 17 function Pagerank(vertex) insum = sum(edge.value for edge in vertex.inedges) vertex.value = 0.85 + 0.15 * insum foreach edge in vertex.outedges: edge.value = vertex.value / vertex.num_outedges

- 18. Computational Setting Constraints: A. Not enough memory to store the whole graph in memory, nor all the vertex values. B. Enough memory to store one vertex and its edges w/ associated values. 18

- 19. The Main Challenge of Disk-based Graph Computation: Random Access ~ 100K reads / sec (commodity) ~ 1M reads / sec (high-end arrays) << 5-10 M random edges / sec to achieve “reasonable performance” 19

- 20. Random Access Problem 20 Disk File: edge-values A: in-edges A: out-edges A B B: in-edges B: out-edges x Moral: You can either access in- or out-edges sequentially, but not both! Random write! Random read! Processing sequentially

- 21. Our Solution Parallel Sliding Windows (PSW) 21

- 22. Parallel Sliding Windows: Phases • PSW processes the graph one sub-graph a time: • In one iteration, the whole graph is processed. – And typically, next iteration is started. 1. Load 2. Compute 3. Write 22

- 23. • Vertices are numbered from 1 to n – P intervals – sub-graph = interval of vertices PSW: Shards and Intervals shard(1) interval(1) interval(2) interval(P) shard(2) shard(P) 1 10000100 700 23 1. Load 2. Compute 3. Write <<partition-by>> source destination edge In shards, edges sorted by source.

- 24. Example: Layout Shard 1 Shards small enough to fit in memory; balance size of shards Shard: in-edges for interval of vertices; sorted by source-id in-edgesforvertices1..100 sortedbysource_id Vertices 1..100 Vertices 101..700 Vertices 701..1000 Vertices 1001..10000 Shard 2 Shard 3 Shard 4Shard 1 1. Load 2. Compute 3. Write 24

- 25. Vertices 1..100 Vertices 101..700 Vertices 701..1000 Vertices 1001..10000 Load all in-edges in memory Load subgraph for vertices 1..100 What about out-edges? Arranged in sequence in other shards Shard 2 Shard 3 Shard 4 PSW: Loading Sub-graph Shard 1 in-edgesforvertices1..100 sortedbysource_id 1. Load 2. Compute 3. Write 25

- 26. Shard 1 Load all in-edges in memory Load subgraph for vertices 101..700 Shard 2 Shard 3 Shard 4 PSW: Loading Sub-graph Vertices 1..100 Vertices 101..700 Vertices 701..1000 Vertices 1001..10000 Out-edge blocks in memory in-edgesforvertices1..100 sortedbysource_id 1. Load 2. Compute 3. Write 26

- 27. Parallel Sliding Windows Only P large reads and writes for each interval. = P2 random accesses on one full pass. 27 Works well on both SSD and magnetic hard disks!

- 28. “GAUSS-SEIDEL” / ASYNCHRONOUS How PSW computes Chapter 6 + Appendix28 Joint work: Julian Shun

- 29. Synchronous vs. Gauss-Seidel • Bulk-Synchronous Parallel (Jacobi iterations) – Updates see neighbors‟ values from previous iteration. [Most systems are synchronous] • Asynchronous (Gauss-Seidel iterations) – Updates see most recent values. – GraphLab is asynchronous. 29

- 30. Shard 1 Load all in-edges in memory Load subgraph for vertices 101..700 Shard 2 Shard 3 Shard 4 PSW runs Gauss-Seidel Vertices 1..100 Vertices 101..700 Vertices 701..1000 Vertices 1001..10000 Out-edge blocks in memory in-edgesforvertices1..100 sortedbysource_id Fresh values in this “window” from previous phase 30

- 31. Synchronous (Jacobi) 1 2 5 3 4 1 1 2 3 3 1 1 1 2 3 1 1 1 1 2 1 1 1 1 1 Bulk-Synchronous: requires graph diameter –many iterations to propagate the minimum label. Each vertex chooses minimum label of neighbor. 31

- 32. PSW is Asynchronous (Gauss- Seidel) 1 2 4 3 5 1 1 1 3 3 1 1 1 1 1 Gauss-Seidel: expected # iterations on random schedule on a chain graph = (N - 1) / (e – 1) ≈ 60% of synchronous Each vertex chooses minimum label of neighbor. 32

- 33. Open theoretical question! Label Propagation Side length = 100 Synchronous PSW: Gauss-Seidel (average, random schedule) 199 ~ 57 100 ~29 298 # iterations ~52 Natural graphs (web, social) graph diameter - 1 ~ 0.6 * diameter Joint work: Julian Shun Chapter 6 33

- 34. PSW & External Memory Algorithms Research • PSW is a new technique for implementing many fundamental graph algorithms – Especially simple (compared to previous work) for directed graph problems: PSW handles both in- and out-edges • We propose new graph contraction algorithm based on PSW – Minimum-Spanning Forest & Connected Components • … utilizing the Gauss-Seidel “acceleration” Joint work: Julian Shun SEA 2014, Chapter 6 + Appendix 34

- 35. GRAPHCHI: SYSTEM EVALUATION Sneak peek Consult the paper for a comprehensive evaluation: • HD vs. SSD • Striping data across multiple hard drives • Comparison to an in-memory version • Bottlenecks analysis • Effect of the number of shards • Block size and performance. 35

- 36. GraphChi • C++ implementation: 8,000 lines of code – Java-implementation also available • Several optimizations to PSW (see paper). Source code and examples: http://github.com/graphchi 37

- 37. Experiment Setting • Mac Mini (Apple Inc.) – 8 GB RAM – 256 GB SSD, 1TB hard drive – Intel Core i5, 2.5 GHz • Experiment graphs: Graph Vertices Edges P (shards) Preprocessing live-journal 4.8M 69M 3 0.5 min netflix 0.5M 99M 20 1 min twitter-2010 42M 1.5B 20 2 min uk-2007-05 106M 3.7B 40 31 min uk-union 133M 5.4B 50 33 min yahoo-web 1.4B 6.6B 50 37 min 38

- 38. Comparison to Existing Systems Notes: comparison results do not include time to transfer the data to cluster, preprocessing, or the time to load the graph from disk. GraphChi computes asynchronously, while all but GraphLab synchronously. PageRank See the paper for more comparisons. WebGraph Belief Propagation (U Kang et al.) Matrix Factorization (Alt. Least Sqr.) Triangle Counting GraphLab v1 (8 cores) GraphChi (Mac Mini) 0 2 4 6 8 10 12 Minutes Netflix (99B edges) Spark (50 machines) GraphChi (Mac Mini) 0 2 4 6 8 10 12 14 Minutes Twitter-2010 (1.5B edges) Pegasus / Hadoop (100 machines) GraphChi (Mac Mini) 0 5 10 15 20 25 30 Minutes Yahoo-web (6.7B edges) Hadoop (1636 machines) GraphChi (Mac Mini) 0 100 200 300 400 500 Minutes twitter-2010 (1.5B edges) On a Mac Mini: GraphChi can solve as big problems as existing large-scale systems. Comparable performance. 39

- 39. PowerGraph Comparison • PowerGraph / GraphLab 2 outperforms previous systems by a wide margin on natural graphs. • With 64 more machines, 512 more CPUs: – Pagerank: 40x faster than GraphChi – Triangle counting: 30x faster than GraphChi. OSDI’12 GraphChi has good performance / CPU. vs. GraphChi 40

- 40. In-memory Comparison 41 • Total runtime comparison to 1-shard GraphChi, with initial load + output write taken into account GraphChi Mac Mini – SSD 790 secs Ligra (J. Shun, Blelloch) 40-core Intel E7-8870 15 secs • Comparison to state-of-the-art: • - 5 iterations of Pageranks / Twitter (1.5B edges) However, sometimes better algorithm available for in-memory than external memory / distributed. Ligra (J. Shun, Blelloch) 8-core Xeon 5550 230 s + preproc 144 s PSW – inmem version, 700 shards (see Appendix) 8-core Xeon 5550 100 s + preproc 210 s

- 41. Scalability / Input Size [SSD] • Throughput: number of edges processed / second. Conclusion: the throughput remains roughly constant when graph size is increased. GraphChi with hard-drive is ~ 2x slower than SSD (if computational cost low). Graph size domain twitter-2010 uk-2007-05 uk-union yahoo-web 0.00E+00 5.00E+06 1.00E+07 1.50E+07 2.00E+07 2.50E+07 0.00E+00 2.00E+00 4.00E+00 6.00E+00 8.00E+00 Billions PageRank -- throughput (Mac Mini, SSD) Performance Paper: scalability of other applications. 42

- 43. GraphChi (Parallel Sliding Windows) GraphChi-DB (Partitioned Adjacency Lists) Online graph updates Batch comp. Evolving graph Incremental comp. DrunkardMob: Parallel Random walk simulation GraphChi^2 Triangle Counting Item-Item Similarity PageRank SALSA HITS Graph Contraction Minimum Spanning Forest k-Core Graph Computation Shortest Path Friends-of-Friends Induced Subgraphs Neighborhood query Edge and vertex properties Link prediction Weakly Connected Components Strongly Connected Components Community Detection Label Propagation Multi-BFS Graph sampling Graph Queries Matrix Factorization Loopy Belief Propagation Co-EM Graph traversal 44

- 44. Research Questions • What if there is lot of metadata associated with edges and vertices? • How to do graph queries efficiently while retaining computational capabilities? • How to add edges efficiently to the graph? Can we design a graph database based on GraphChi? 45

- 45. Existing Graph Database Solutions 46 1) Specialized single-machine graph databases Problems: • Poor performance with data >> memory • No/weak support for analytical computation 2) Relational / key-value databases as graph storage Problems: • Large indices • In-edge / out-edge dilemma • No/weak support for analytical computation

- 46. PARTITIONED ADJACENCY LISTS (PAL): DATA STRUCTURE Our solution 47

- 47. Review: Edges in Shards Shard 1 sortedbysource_id Shard 2 Shard 3 Shard PShard 1 0 100 101 700 N Edge = (src, dst) Partition by dst 48

- 48. Shard Structure (Basic) 49 Source Destination 1 8 1 193 1 76420 3 12 3 872 7 193 7 212 7 89139 …. …. src dst

- 49. Shard Structure (Basic) 50 Destination 8 193 76420 12 872 193 212 89139 …. Source File offset 1 0 3 3 7 5 …. ,… src dst Compressed Sparse Row (CSR) Problem 1: How to find in-edges of a vertex quickly? Note: We know the shard, but edges in random order.Pointer-array Edge-array

- 50. PAL: In-edge Linkage 51 Destination 8 193 76420 12 872 193 212 89139 …. Source File offset 1 0 3 3 7 5 …. ,… src dst Pointer-array Edge-array

- 51. PAL: In-edge Linkage 52 Destination Link 8 3339 193 3 76420 1092 12 289 872 40 193 2002 212 12 89139 22 …. Source File offset 1 0 3 3 7 5 …. ,… + Index to the first in-edge for each vertex in interval. Pointer-array Edge-array Problem 2: How to find out- edges quickly? Note: Sorted inside a shard, but partitioned across all shards. Augmented linked list for in-edges

- 52. Source File offset 1 0 3 3 7 5 …. ,… PAL: Out-edge Queries 53 Pointer-array Edge-array Problem: Can be big -- O(V) Binary search on disk slow. Destinat ion Next-in- offset 8 3339 193 3 76420 1092 12 289 872 40 193 2002 212 12 89139 22 …. 256 512 1024 …. Option 1: Sparse index (inmem) Option 2: Delta-coding with unary code (Elias-Gamma) Completely in-memory

- 53. Experiment: Indices No index No index Sparse index Sparse index Elias-Gamma Elias-Gamma 0 10 20 30 40 50 60 in-edges out-edges Time (ms) Median latency, Twitter-graph, 1.5B edges54

- 54. Queries: I/O costs 55 In-edge query: only one shard Out-edge query: each shard that has edges Trade-off: More shards Better locality for in- edge queries, worse for out-edge queries.

- 55. Edge Data & Searches Shard X - adjacency ‘weight‘: [float] ‘timestamp’: [long] ‘belief’: (factor) Edge (i) No foreign key required to find edge data! (fixed size) Note: vertex values stored similarly.

- 56. Efficient Ingest? Shards on Disk Buffers in RAM 57

- 57. Merging Buffers to Disk Shards on Disk Buffers in RAM 58

- 58. Merging Buffers to Disk (2) Shards on Disk Buffers in RAM Merge requires loading existing shard from disk Each edge will be rewritten always on a merge. Does not scale: number of rewrites: data size / buffer size = O(E) 59

- 59. Log-Structured Merge-tree (LSM) Ref: O‟Neil, Cheng et al. (1996) 60 Top shards with in-memory buffers On-disk shards LEVEL 1 (youngest) Downstream merge interval 1 interval 2 interval 3 interval 4 interval PInterval P-1 Intervals 1--4 Intervals (P-4) -- P Intervals 1--16 New edges LEVEL 2 LEVEL 3 (oldest) In-edge query: One shard on each level Out-edge query: All shards

- 60. Experiment: Ingest 61 0.0E+00 5.0E+08 1.0E+09 1.5E+09 0 2000 4000 6000 8000 10000 12000 14000 16000 Edges Time (seconds) GraphChi-DB with LSM GraphChi-No-LSM

- 61. Advantages of PAL • Only sparse and implicit indices – Pointer-array usually fits in RAM with Elias- Gamma. Small database size. • Columnar data model – Load only data you need. – Graph structure is separate from data. – Property graph model • Great insertion throughput with LSM Tree can be adjusted to match workload. 62

- 63. GraphChi-DB: Implementation • Written in Scala • Queries & Computation • Online database All experiments shown in this talk done on Mac Mini (8 GB, SSD) 64 Source code and examples: http://github.com/graphchi

- 64. Comparison: Database Size 65 Baseline: 4 + 4 bytes / edge. 0 10 20 30 40 50 60 70 MySQL (data + indices) Neo4j GraphChi-DB BASELINE Database file size (twitter-2010 graph, 1.5B edges)

- 65. Comparison: Ingest 66 System Time to ingest 1.5B edges GraphChi-DB (ONLINE) 1 hour 45 mins Neo4j (batch) 45 hours MySQL (batch) 3 hour 30 minutes (including index creation) If running Pagerank simultaneously, GraphChi-DB takes 3 hour 45 minutes

- 66. Comparison: Friends-of-Friends Query See thesis for shortest-path comparison. 0.379 0.127 0 0.05 0.1 0.15 0.2 0.25 0.3 0.35 0.4 GraphChi-DB Neo4j milliseconds Small graph - 50-percentile 6.653 8.078 0 1 2 3 4 5 6 7 8 9 GraphChi-DB Neo4j milliseconds Small graph - 99-percentile 22.4 5.9 0 50 100 150 200 GraphChi-DB Neo4j MySQL milliseconds Big graph - 50-percentile 1264 1631 4776 0 1000 2000 3000 4000 5000 6000 GraphChi-DB GraphChi-DB + Pagerank MySQL milliseconds Big graph - 99-percentile Latency percentiles over 100K random queries 68M edges 1.5B edges

- 67. LinkBench: Online Graph DB Benchmark by Facebook • Concurrent read/write workload – But only single-hop queries (“friends”). – 8 different operations, mixed workload. – Best performance with 64 parallel threads • Each edge and vertex has: – Version, timestamp, type, random string payload. 68 GraphChi-DB (Mac Mini) MySQL+FB patch, server, SSD-array, 144 GB RAM Edge-update (95p) 22 ms 25 ms Edge-get- neighbors (95p) 18 ms 9 ms Avg throughput 2,487 req/s 11,029 req/s Database size 350 GB 1.4 TBSee full results in the thesis.

- 68. Summary of Experiments • Efficient for mixed read/write workload. – See Facebook LinkBench experiments in thesis. – LSM-tree trade-off read performance (but, can adjust). • State-of-the-art performance for graphs that are much larger than RAM. – Neo4J‟s linked-list data structure good for RAM. – DEX performs poorly in practice. 69 More experiments in the thesis!

- 69. Discussion Greater Impact Hindsight Future Research Questions 70

- 71. Impact: “Big Data” Research • GraphChi‟s OSDI 2012 paper has received over 85 citations in just 18 months (Google Scholar). – Two major direct descendant papers in top conferences: • X-Stream: SOSP 2013 • TurboGraph: KDD 2013 • Challenging the mainstream: – You can do a lot on just a PC focus on right data structures, computational models. 72

- 72. Impact: Users • GraphChi‟s (C++, Java, -DB) have gained a lot of users – Currently ~50 unique visitors / day. • Enables „everyone‟ to tackle big graph problems – Especially the recommender toolkit (by Danny Bickson) has been very popular. – Typical users: students, non-systems researchers, small companies… 73

- 73. Impact: Users (cont.) I work in a [EU country] public university. I can't use a a distributed computing cluster for my research ... it is too expensive. Using GraphChi I was able to perform my experiments on my laptop. I thus have to admit that GraphChi saved my research. (...) 74

- 75. What is GraphChi Optimized for? • Original target algorithm: Belief Propagation on Probabilistic Graphical Models. 1. Changing value of an edge (both in- and out!). 2. Computation process whole, or most of the graph on each iteration. 3. Random access to all vertex‟s edges. – Vertex-centric vs. edge centric. 4. Async/Gauss-Seidel execution. 76 u v

- 76. GraphChi Not Good For • Very large vertex state. • Traversals, and two-hop dependencies. – Or dynamic scheduling (such as Splash BP). • High diameter graphs, such as planar graphs. – Unless the computation itself has short-range interactions. • Very large number of iterations. – Neural networks. – LDA with Collapsed Gibbs sampling. • No support for implicit graph structure. + Single PC performance is limited. 77

- 77. GraphChi (Parallel Sliding Windows) GraphChi-DB (Partitioned Adjacency Lists) Online graph updates Batch comp. Evolving graph Incremental comp. DrunkardMob: Parallel Random walk simulation GraphChi^2 Triangle Counting Item-Item Similarity PageRank SALSA HITS Graph Contraction Minimum Spanning Forest k-Core Graph Computation Shortest Path Friends-of-Friends Induced Subgraphs Neighborhood query Edge and vertex properties Link prediction Weakly Connected Components Strongly Connected Components Community Detection Label Propagation Multi-BFS Graph sampling Graph Queries Matrix Factorization Loopy Belief Propagation Co-EM Graph traversal Versatility of PSW and PAL 78

- 78. Future Research Directions • Distributed Setting 1. Distributed PSW (one shard / node) 1. PSW is inherently sequential 2. Low bandwidth in the Cloud 2. Co-operating GraphChi(-DB)‟s connected with a Parameter Server • New graph programming models and Tools – Vertex-centric programming sometimes too local: for example, two-hop interactions and many traversals cumbersome. – Abstractions for learning graph structure; Implicit graphs. – Hard to debug, especially async Better tools needed. • Graph-aware optimizations to GraphChi-DB. – Buffer management. – Smart caching. – Learning configuration. 79

- 79. WHAT IF WE HAVE PLENTY OF MEMORY? Semi-External Memory Setting 80

- 80. Observations • The I/O performance of PSW is only weakly affected by the amount of RAM. – Good: works with very little memory. – Bad: Does not benefit from more memory • Simple trick: cache some data. • Many graph algorithms have O(V) state. – Update function accesses neighbor vertex state. • Standard PSW: „broadcast‟ vertex value via edges. • Semi-external: Store vertex values in memory. 81

- 81. Using RAM efficiently • Assume that enough RAM to store many O(V) algorithm states in memory. – But not enough to store the whole graph. Graph working memory (PSW) disk computational state Computation 1 state Computation 2 state RAM 82

- 82. Parallel Computation Examples • DrunkardMob algorithm (Chapter 5): – Store billions of random walk states in RAM. • Multiple Breadth-First-Searches: – Analyze neighborhood sizes by starting hundreds of random BFSes. • Compute in parallel many different recommender algorithms (or with different parameterizations). – See Mayank Mohta, Shu-Hao Yu‟s Master‟s project. 83

- 83. CONCLUSION 84

- 84. Summary of Published Work 85 GraphLab: Parallel Framework for Machine Learning (with J. Gonzaled, Y.Low, D. Bickson, C.Guestrin) UAI 2010 Distributed GraphLab: Framework for Machine Learning and Data Mining in the Cloud (-- same --) VLDB 2012 GraphChi: Large-scale Graph Computation on Just a PC (with C.Guestrin, G. Blelloch) OSDI 2012 DrunkardMob: Billions of Random Walks on Just a PC ACM RecSys 2013 Beyond Synchronous: New Techniques for External Memory Graph Connectivity and Minimum Spanning Forest (with Julian Shun, G. Blelloch) SEA 2014 GraphChi-DB: Simple Design for a Scalable Graph Database – on Just a PC (with C. Guestrin) (submitted / arxiv) Parallel Coordinate Descent for L1-regularized :Loss Minimization (Shotgun) (with J. Bradley, D. Bickson, C.Guestrin) ICML 2011 First author papers in bold. THESIS

- 85. Summary of Main Contributions • Proposed Parallel Sliding Windows, a new algorithm for external memory graph computation GraphChi • Extended PSW to design Partitioned Adjacency Lists to build a scalable graph database GraphChi-DB • Proposed DrunkardMob for simulating billions of random walks in parallel. • Analyzed PSW and its Gauss-Seidel properties for fundamental graph algorithms New approach for EM graph algorithms research. 86 Thank You!

- 87. Economics GraphChi (40 Mac Minis) PowerGraph (64 EC2 cc1.4xlarge) Investments 67,320 $ - Operating costs Per node, hour 0.03 $ 1.30 $ Cluster, hour 1.19 $ 52.00 $ Daily 28.56 $ 1,248.00 $ It takes about 56 days to recoup Mac Mini investments. Assumptions: - Mac Mini: 85W (typical servers 500-1000W) - Most expensive US energy: 35c / KwH Equal throughput configurations (based on OSDI’12) 88

- 88. PSW for In-memory Computation • External memory setting: – Slow memory = hard disk / SSD – Fast memory = RAM • In-memory: – Slow = RAM – Fast = CPU caches Small (fast) Memory size: M Large (slow) Memory size: ‘unlimited’ B CPU block transfers Does PSW help in the in-memory setting? 89

- 89. PSW for in-memory 0 5 10 15 20 0.0e+005.0e+071.0e+081.5e+08 Working set memory footprint (MBs) Edgesprocessed/sec L3 cache size (3 MB) Update function execution throughput Total throughput Baseline −− total throughput Baseline −− update function execution Min−label Connected Components (edge−values; Mac Mini) Appendix PSW outperforms (slightly) Ligra*, the fastest in- memory graph computation system on Pagerank with the Twitter graph, including preprocessing steps of both systems. *: Julian Shun, Guy Blelloch, PPoPP 2013 90

- 90. Remarks (sync vs. async) • Bulk-Synchronous is embarrassingly parallel – But needs twice the amount of space • Async/G-S helps with high diameter graphs • Some algorithms converge much better asynchronously – Loopy BP, see Gonzalez et al. (2009) – Also Bertsekas & Tsitsiklis Parallel and Distributed Optimization (1989) • Asynchronous sometimes difficult to reason about and debug • Asynchronous can be used to implement BSP 91

- 91. I/O Complexity • See the paper for theoretical analysis in the Aggarwal-Vitter‟s I/O model. – Worst-case only 2x best-case. • Intuition: shard(1) interval(1) interval(2) interval(P) shard(2) shard(P) 1 |V|v1 v2 Inter-interval edge is loaded from disk only once / iteration. Edge spanning intervals is loaded twice / iteration.

- 92. Impact of Graph Structure • Algos with long range information propagation, need relatively small diameter would require too many iterations • Per iteration cost not much affected • Can we optimize partitioning? – Could help thanks to Gauss-Seidel (faster convergence inside “groups”) topological sort – Likely too expensive to do on single PC 93

- 93. Graph Compression: Would it help? • Graph Compression methods (e.g Blelloch et al., WebGraph Framework) can be used to compress edges to 3-4 bits / edge (web), ~ 10 bits / edge (social) – But require graph partitioning requires a lot of memory. – Compression of large graphs can take days (personal communication). • Compression problematic for evolving graphs, and associated data. • GraphChi can be used to compress graphs? – Layered label propagation (Boldi et al. 2011) 94

- 94. Previous research on (single computer) Graph Databases • 1990s, 2000s saw interest in object-oriented and graph databases: – GOOD, GraphDB, HyperGraphDB… – Focus was on modeling, graph storage on top of relational DB or key-value store • RDF databases – Most do not use graph storage but store triples as relations + use indexing. • Modern solutions have proposed graph-specific storage: – Neo4j: doubly linked list – TurboGraph: adjacency list chopped into pages – DEX: compressed bitmaps (details not clear) 95

- 95. LinkBench 96

- 96. Comparison to FB (cont.) • GraphChi load time 9 hours, FB‟s 12 hours • GraphChi database about 250 GB, FB > 1.4 terabytes – However, about 100 GB explained by different variable data (payload) size • Facebook/MySQL via JDBC, GraphChi embedded – But MySQL native code, GraphChi-DB Scala (JVM) • Important CPU bound bottleneck in sorting the results for high-degree vertices

- 97. LinkBench: GraphChi-DB performance / Size of DB 0 1000 2000 3000 4000 5000 6000 7000 8000 9000 10000 1.00E+07 1.00E+08 2.00E+08 1.00E+09 Number of nodes Requests / sec Why not even faster when everything in RAM? computationally bound requests

- 98. Possible Solutions 1. Use SSD as a memory- extension? [SSDAlloc, NSDI’11] 2. Compress the graph structure to fit into RAM? [ WebGraph framework] 3. Cluster the graph and handle each cluster separately in RAM? 4. Caching of hot nodes? Too many small objects, need millions / sec. Associated values do not compress well, and are mutated. Expensive; The number of inter- cluster edges is big. Unpredictable performance.

- 99. Number of Shards • If P is in the “dozens”, there is not much effect on performance.

- 100. Multiple hard-drives (RAIDish) • GraphChi supports striping shards to multiple disks Parallel I/O. 0 500 1000 1500 2000 2500 3000 Pagerank Conn. components Seconds/iteration 1 hard drive 2 hard drives 3 hard drives Experiment on a 16-core AMD server (from year 2007).

- 101. Bottlenecks 0 500 1000 1500 2000 2500 1 thread 2 threads 4 threads Disk IO Graph construction Exec. updates Connected Components on Mac Mini / SSD • Cost of constructing the sub-graph in memory is almost as large as the I/O cost on an SSD – Graph construction requires a lot of random access in RAM memory bandwidth becomes a bottleneck.

- 102. Bottlenecks / Multicore Experiment on MacBook Pro with 4 cores / SSD. • Computationally intensive applications benefit substantially from parallel execution. • GraphChi saturates SSD I/O with 2 threads. 0 20 40 60 80 100 120 140 160 1 2 4 Runtime(seconds) Number of threads Matrix Factorization (ALS) Loading Computation 0 200 400 600 800 1000 1 2 4 Runtime(seconds) Number of threads Connected Components Loading Computation

- 104. Experiment: Query latency See thesis for I/O cost analysis of in/out queries. 105

- 105. Example: Induced Subgraph Queries • Induced subgraph for vertex set S contains all edges in the graph that have both endpoints in S. • Very fast in GraphChi-DB: – Sufficient to query for out-edges – Parallelizes well multi-out-edge-query • Can be used for statistical graph analysis – Sample induced neighborhoods, induced FoF neighborhoods from graph 106

- 106. Vertices / Nodes • Vertices are partitioned similarly as edges – Similar “data shards” for columns • Lookup/update of vertex data is O(1) • No merge tree here: Vertex files are “dense” – Sparse structure could be supported 1 a a+1 2a Max-id

- 107. ID-mapping • Vertex IDs mapped to internal IDs to balance shards: – Interval length constant a 0 1 2 .. a a+1 a+2 .. .. 2a 2a+1 … 0 1 2 255 Original ID: 0 1 2 255 256 257 258 511

- 108. What if we have a Cluster? Graph working memory (PSW) disk computational state Computation 1 state Computation 2 state RAM Trade latency for throughput! 109

- 109. Graph Computation: Research Challenges 1. Lack of truly challenging (benchmark) applications 2. … which is caused by lack of good data available for the academics: big graphs with metadata – Industry co-operation But problem with reproducibility – Also: it is hard to ask good questions about graphs (especially with just structure) 3. Too much focus on performance More important to enable “extracting value” 110

- 110. Random walk in an in-memory graph • Compute one walk a time (multiple in parallel, of course):parfor walk in walks: for i=1 to : vertex = walk.atVertex() walk.takeStep(vertex.randomNeighbor()) DrunkardMob - RecSys '13

- 111. Problem: What if Graph does not fit in memory? Twitter network visualization, by Akshay Java, 2009 Distributed graph systems: - Each hop across partition boundary is costly. Disk-based “single- machine” graph systems: - “Paging” from disk is costly. (This talk) DrunkardMob - RecSys '13

- 112. Random walks in GraphChi • DrunkardMob –algorithm – Reverse thinking ForEach interval p: walkSnapshot = getWalksForInterval(p) ForEach vertex in interval(p): mywalks = walkSnapshot.getWalksAtVertex(vertex.id) ForEach walk in mywalks: walkManager.addHop(walk, vertex.randomNeighbor()) Note: Need to store only current position of each walk! DrunkardMob - RecSys '13

Notes de l'éditeur

- Let me first put this work on the map of computer science.This work really started as part of the GraphLab project by Carlos, which I joined when I started at CMU. Our idea was to create a parallel framework for machine learning, and we realized that many ML problems can be presented as graphs. Since that, the interest has extended also into applications in graph analysis and mining.But if we look at the core contributions of this work, they are really quite traditional computer science: external memory algorithms and systems research. So this work really spans across quite a many domains.Let me now motivate this work.

- Let’s open up my thesis title one by one.First: why are graphs interesting objects to study?

- Graphs are interesting by themselves. Recent years we have seen raise of social networks such as FB and Twitter. Of course the web. But graphs are a useful representation for many machine learning problems, such as recommendations: as example, the Amazon’s consumer-product relations can be presented as a graph.GraphLab project started with the insight that many problems in machine learning can be presented as problems on sparse graphs. My work is continuum on that, and recently we have started looking more into analysis of graphs themselves. Big Graphs are of course special breed of “Big Data”: they encode structure, and we want to somehow extract information encoded in the structure. It is a hard problem from many points of view. Just stating the problems if often very hard. Today we talk mostly about the Systems’ aspects.

- Let me ask it otherway round. Why would you want to use a cluster?

- Let me ask it otherway round. Why would you want to use a cluster?

- Problems with Big Graphs are really quite different than with rest of the Big Data. Why is that?Well, when we compute on graphs, we are interested about the structure. Facebook just last week announced that their network had about 140 billion connections. I believe it is the biggest graph out there. However, storing this network on a disk would take only roughly 1 terabyte of space. It is tiny! Smaller than tiny!The reason why Big Graphs are so hard from system perspective is therefore in the computation. Joey already talked about this, so I will be brief.These so called natural graphs are challenging, because they very asymmetric structure. Look at the picture of the Twitter graph. Let me give an example: Lady Gaga has 30 million followers ---- I have only 300 of them --- and my advisor does not have even thirty. This extreme skew makes distributing the computation very hard. This is why we have specialized graph systems. It is hard to split the problem into components of even size, which would not be very connected to each other. PowerGraph has a neat solution to this.But let me now introduce a completely different approach to the problem.

- Now we can state the goal of this research, or the research problem we stated for us when we started this project. The goal has some vagueness in it, so let me briefly explain. With reasonable time, I mean that if there have been papers reporting some numbers for other systems, I assume at least the authors were happy with the performance, and our system can do the same in the same ballpark, it is likely reasonable, given the lowe costs here. Now, as a consumer PC we used a Mac Mini. Not the cheapest computer there is, especially with the SSD, but still quite a small package. Now, we have since also run GraphChi with cheaper hardware, on hard drive instead of SSD, and can say it provides good performance on the lower end as well. But for this work, we used this computer. One outcome of this research is to come up with a single computer comparison point for computing on very large graphs. Now researchers who develop large scale graph computation platforms can compare their performance to this, and analyze the relative gain achieved by distributing the computation. Before, there has not been really understanding of whether many proposed distributed frameworks are efficient or not.

- Let’s now discuss what is the computational setting of this work. Let’s first introduce the basic computational model.

- Think like a vertex was used by the Google Pregel paper, and also adopted by GraphLab. Historical similar idea was used in the systolic computation or connection machine architectures, but with regular networks. Now, we had the data model where we associate a value with every vertex and edge, shown in the picture. As the primary computation model, user defines an update-function that operates on a vertex, and can access the values of the neighboring edges (shown in red). That is, we modify the data directly in the graph, one vertex a time. Of course, we can parallelize this, and take into account that neighboring vertices that share an edge should not be updated simultaneously (in general).

- Note, that bigger systems can also benefit from our work since we can move the graph from memory, and use that for algorithmic state. If you consider the Yahoo-web graph, which has 1.4B vertices. If we associate a 4-byte float with all the vertices, it already takes almost 6 gigabytes of memory already. On a laptop with 8gigs, you generally have not enough memory for the rest. The research contributions of this work are mostly in this setting, but the system, GraphChi, is actually very useful in settings with more memory as well. It is the flexibility that counts.

- I will now briefly demonstrate why disk-based graph computation was not a trivial problem. Perhaps we can assume it wasn’t, because no such system as stated in the goals clearly existed. But it makes sense to analyze why solving the problem required a small innovation, worthy of an OSDI publication. The main problem has been stated on the slide: random access, i.e when you need to read many times from many different locations on disk, is slow. This is especially true with hard drives: seek times are several milliseconds. On SSD, random access is much faster, but still far a far cry from the performance of RAM. Let’s now study this a bit.

- Now we finally move to what is our main contribution. We call it Parallel Sliding Windows, for the reason that comes apparent soon.

- The basic approach is that PSW loads one sub-graph of the graph a time, computes the update-functions for it, and saves the modifications back to disk. We will show soon how the sub-graphs are defined, and how we do this without doing almost no random access. Now, we usually use this for ITERATIVE computation. That is, we process all graph in sequence, to finish a full iteration, and then move to a next one.

- How are the sub-graphs defined?

- Let us show an example

- Writes are symmetric. So when the subgraph is loaded into memory, we execute the update functions on the vertices and then store the modified values back to disk.

- Before we go to see the experimental results, let me take an aside an talk about one special property of the PSW model. That it, that is not synchronous. Let me explain.

- I use term Gauss-Seidel since asynchronous has so many overloaded meanings.

- Let’s look back at this picture for the second subgraph. Look at the window on top of the first shard.This data was part of the first shard, which was loaded fully in memory on the first iteration. Thus, the new values are immediately visible to the computaiton.

- Let’s look at the simplest possible example. We have here a chain graph with vertices numbered from 1 to 5, in random order. The idea of this algorithm is simple: each vertex chooses the label of its neighbor that is smallest. Let’s see how this computes synchronously.It is easy to see that it takes as many iterations to converge as the diameter of the graph: in this case four. Diameter is the longest distance between any two vertices.

- But in the Gauss-Seidel model, we update the vertices in their order, and each update sees the latest value of its neighbor. If they were updated before them, on the current iteration, they see that.In this case, it takes only 2 iterations to converge.In the thesis we derive an exact formula for the chain graph on a random schedule. Shown here. G-S takes about 60% many iterations as synchronuos.What about more interesting graphs?

- For many dimensional meshes, the benefit of G-S is even bigger. These numbers are from simulations.For natural graphs, the benefit is about same as with chains. It is because those graphs tend to have whiskers that are chain-like. Unfortunately we - I could not derive theoretically the convergence speed for other graphs than the chain. This is a fun open question.

- Now we move to analyze how the system itself works: how it scales, what are the bottlenecks

- Because our goal was to make large scale graph computation available to a large audience, we also put a lot of effort in making GraphChi easy to use.

- The same graphs are typically used for benchmarking distributed graph processing systems.

- Unfortunately the literature is abundant with Pagerank experiments, but not much more. Pagerank is really not that interesting, and quite simple solutions work. Nevertheless, we get some idea. Pegasus is a hadoop-based graph mining system, and it has been used to implement a wide range of different algorithms. The best comparable result we got was for a machine learning algo “belief propagation”. Mac Mini can roughly match a 100node cluster of Pegasus. This also highlights the inefficiency of MapReduce. That said, the Hadoop ecosystem is pretty solid, and people choose it for the simplicity. Matrix factorization has been one of the core Graphlab applications, and here we show that our performance is pretty good compared to GraphLab running on a slightly older 8-core server. Last, triangle counting, which is a heavy-duty social network analysis algorithm. A paper in VLDB couple of years ago introduced a Hadoop algorithm for counting triangles. This comparison is a bit stunning. But, I remind that these are prior to PowerGraph: in OSDI, the map changed totally! However, we are confident in saying, that GraphChi is fast enough formany purposes. And indeed, it can solve as big problems as the other systems have been shown to execute. It is limited by the disk space.

- PowerGraph really resets the speed comparisons. However, the point of ease of use remain, and GraphChi likely provides sufficient performance for most people. But if you need peak performance and have the resources, PowerGraph is the answer. GraphChi has still a role as the development platform for PowerGraph.

- X5550 (bigbro5)specint rate 2006 = 242E7-8870 (ligra experiments, specint rate = 1890?

- Here, in this plot, the x-axis is the size of the graph as the number of edges. All the experiment graphs are presented here. On the y-axis, we have the performance: how many edges processed by second. Now the dots present different experiments (averaged), and the read line is a least-squares fit. On SSD, the throuhgput remains very closely constant when the graph size increases. Note that the structure of the graph actually has an effect on performance, but only by a factor of two. The largest graph, yahoo-web, has a challenging structure, and thus its results are comparatively worse.

- Problems: - Current graph databases perform poorly when the size of data >> memoryRDBMS has high storage overhead because of indicesNo support for graph computationOut-edge / in-edge problem

- Alternative would be to have a index over the in-edges.But this has better locality.

- Similar to LevelDB and RocksDB

- Neo4j’s linked list data structure works well in-memory, but is slow when RAM is not sufficient. GraphChi-DB is not optimized for in-memory graphs but provides still OK performance. Similar results for shortest paths.Notemysql in native, GraphChi-DB in Java/Scala. Probably explains the difference in small queries. Also the fact that grpahchi-db partitions the adjacency lists, while mysql stores them clustered by source-id gives some benefit for mysql.But for heavy hitters, GraphChi-DB wins: this is probably because indices fit in memory.

- Not go through, but Let me talk about an example

- About linear performance after problem clearly too big for RAM

- Now there are some potential remedies we pretended to consider. Compressing the graph and caching of hot nodes works in many cases, and for example for graph traversals, where you walk in the graph in a random manner, they are good solution, and there is other work on that. But for our computational model, where we actually need to modify the values of the edges, these won’t be sufficient given the constraints. Of course, if you have one terabyte of memory, you do not need any of that.

- Amdhal’s law

- So how would we do this if we could fit the graph in memory?