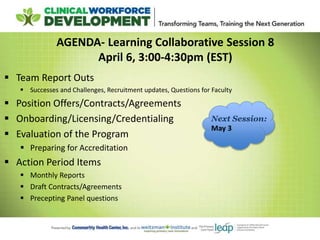

Learn Collaborative Session 8 Agenda

- 1. AGENDA- Learning Collaborative Session 8 April 6, 3:00-4:30pm (EST) Team Report Outs Successes and Challenges, Recruitment updates, Questions for Faculty Position Offers/Contracts/Agreements Onboarding/Licensing/Credentialing Evaluation of the Program Preparing for Accreditation Action Period Items Monthly Reports Draft Contracts/Agreements Precepting Panel questions Next Session: May 3

- 2. Team Report Outs Successes and Challenges, Recruitment updates, Questions for Faculty

- 3. Offers, Contracts and Agreements OFFERS: • Determine how and when to communicate offers. Offers and declinations and are done by use of the ranking log • Determine length of time for a decision- at CHC this is 48 hours. • In the case of a “tie”, interviewers must discuss candidates and choose. • Prepare for “back up offers” and a waiting list

- 4. Contracts and Agreements • Immediately following the offer should be a formal employment contract. • Determine method of delivery (electronic or direct mail) and length of time to return signed contract • The contract can be a modified version of your organization’s existing employment contract. Items that may differ in the contract include: • Term of the contract- 12 month residency program • Practice location • Salary • PTO • CME • Employment requirement post residency year- determine length of commitment and subsequent year salaries.

- 5. NEXT STEPS: Onboarding, tracking incoming resident credentialing, licensure certification material Sample Contract

- 6. Licensing and Credentialing • Offers have been made and accepted – start immediately! • The process is a domino effect and timelines are short • Follow your organizations general policy – adjust as needed • Be prepared for delays based on states candidates come from • Guide your candidates through the process and keep track of their status

- 7. Licensing and Credentialing NP Residents 1. Sit for and pass boards 2. Apply for state RN license 3. Apply for state APRN license 4. Apply for state controlled substance license 5. Apply for federal DEA license Post Doc Residents 1. Post docs are unlicensed and work under the supervisor’s license. 2. Verify that work under another’s license is a billable service in your state. There is wide variability. In CT Husky (Medicaid) is billable but most private insurances are not 3. Be aware of licensing requirements in your state or state post doc wishes to seek licensure in and provide appropriate supervision and documentation

- 8. Onboarding • In addition to licensing and credentialing process – Residents must be on boarded • Leverage your HR department to help apply the organizations process for onboarding all new staff • HR connects with Residents prior to start date and is also invited to orientation • Residents are employees and their onboarding should look very similar • We will cover orientation in more detail later!

- 9. Program Evaluation: Improving Performance Presented by Candice Rettie, PhD NCA Webinar April 2017

- 10. Overview of the Session Definitions and Process of Good Program Evaluation How to Design Meaningful Evaluation – Integrated Throughout the Program – Recruitment to Graduation – Creates explicit expectations for trainee – Documents programmatic success – Fosters improvement positive growth, creativity and innovation Characteristics of Useful Evidence

- 11. Learning Objectives Knowledge: – Understand the purpose of evaluation – Know the characteristics of good evaluation – Understand the process of evaluation – Understand the connection with curriculum Attitude: – Embrace the challenge – Value the outcomes Skills – To be gained by independent / group work focused on local training program

- 12. Definitions: Evaluation: systematic investigation of merit, worth, or significance of effort; Program evaluation: evaluate specific projects and activities that target audiences may take part in; Stakeholders: those who care about the program or effort. Approach: practical, ongoing evaluation involving program participants, community members, and other stakeholders. Importance: 1. Helping to clarify program plans; 2. Improving communication among participants and partners; 3. Gathering the feedback needed to improve and be accountable for program outcomes/effectiveness; 4. Gain Insight about best practices and innovation; 5. Determine the impact of the program; 6. Empower program participants and contribute to organizational growth.

- 13. 1. Develop a Written Plan Linked to Curriculum 2. Collect Data 3. Analyze Data 4. Communicate and Improve 4 Basic Steps to Program Evaluation

- 14. Fitting the Pieces Together: Program Evaluation Program Curriculum Preceptor Faculty Staff Trainee Institution Overall Program

- 15. Program Evaluation Feedback Loops Trainee performance Instructor and staff performance Program curriculum performance Programmatic and Institutional performance

- 16. Evaluation Process How Do You Do It? Steps in Evaluation: Engage stakeholders Describe the program Focus the evaluation design Gather credible evidence Justify conclusions Analyze, synthesize and interpret findings, provide alternate explanations Feedback, follow up and disseminate Ensure use and share lessons learned

- 17. Level 1: Reaction (Satisfaction Surveys) Was it worth time; was it successful? What were biggest strengths/weaknesses? Did they like physical plant? Level 2: Learning (Observations/interviews) observable/measurable behavior change before, during, after program. Level 3: Behavior (Observations/interviews) New or changed behavior on the job? Can they teach others? Are trainees aware of change? Level 4: Results (Program Goals/Institutional goals) Improved employee retention? Increased productivity for new employees? Higher morale? Kirkpatrick Model of Evaluation

- 18. • What will be evaluated? • What criteria will be used to judge program performance? • What standards of performance on the criteria must be reached for the program to be considered successful? • What evidence will indicate performance on the criteria relative to the standards? • What conclusions about program performance are justified based on the available evidence? Questions Guiding the Evaluation Process

- 19. Basic Questions – Administrative Example What? Postgraduate Training Program Criteria? # of qualified applicants; # of trainees who remain with the program; ROI Standards of Performance? # applicants; Half trainees hired at conclusion of year; On- boarding costs reduced; Billable hours increase w/ramp-up Evidence? HR data / reports; Financials Conclusions? Is the investment worthwhile?

- 20. Accuracy, Utility, Feasibility, Propriety Anchored in the goals and objectives of the curriculum Formative and summative Use measurable and observable criteria of acceptable performance Multiple, expert ratings/raters: Multiple observations give confidence in findings and provides an estimate of reliability (reproducibility or consistency in ratings). Conclusions need to be relevant and meaningful. Validity is based on a synthesis of measurements that are commonly accepted, meaningful, and accurate (to the extent that expert judgments are accurate). Goals of Good Evaluation

- 21. Credible evidence -- Raw material of a good evaluation. Believable, trustworthy, and relevant answers to evaluation questions Indicators (evidence) Translate general concepts about program and expected effects into specific, measurable parts. (eg: increase in patient panel / billable hours over 1 year) Sources People, documents, or observations (eg: trainees, faculty, patients, billable hours, reflective journals). Use multiple sources -- enhances the evaluation's credibility. Integrate qualitative and quantitative information -- more complete and more useful for needs and expectations of a wider range of stakeholders. Quantity Determine how much evidence will be gathered in an evaluation. All evidence collected should have a clear, anticipated use. Logistics Written Plan: Methods, timing (formative and summative), physical infrastructure to gather/handle evidence. Must be consistent with cultural norms of the community, must ensure confidentiality is protected.

- 22. Learning Objectives Knowledge: – Understand the goals and purpose of evaluation – Know the characteristics of good evaluation – Understand the process of evaluation – Understand the connection with curriculum Attitude: – Embrace the challenge – Value the outcomes Skills – To be gained by independent / group work focused on local training program

- 23. The Community Tool Box, (Work Group for Community Health at the U of Kansas): incredibly complete and understandable resource, provides theoretical overviews, practical suggestions, a tool box, checklists, and an extensive bibliography. Pell Institute: user-friendly toolbox that steps through every point in the evaluation process: designing a plan, data collection and analysis, dissemination and communication, program improvement. CDC has an evaluation workbook for obesity programs; concepts and detailed work products can be readily adapted to NP postgraduate programs. Another wonderful resource, Designing Your Program Evaluation Plans, provides a self- study approach to evaluation for nonprofit organizations and is easily adapted to training programs. There are checklists and suggested activities, as well as recommended readings. NNPRFTC website – blogs: http://www.nppostgradtraining.com/Education- Knowledge/Blog/ArtMID/593/ArticleID/2026/Accreditation-Standard-3-Evaluation Resources:

- 24. Action Items Action Period Items Monthly Reports Draft Contracts/Agreements Precepting Panel questions Next Session: May 3

Notes de l'éditeur

- Hello – I am so pleased to join you today. I want to thank CHC, the Weitzman Institute, and the NCA project team for inviting me here to discuss program evaluation and its relevance for postgraduate training programs. I am Candice Rettie, the Executive Director of the National Nurse Residency and Training Consortium, called the Consortium or NNPRFTC, for short. Next slide please.

- To gain insight.This happens, for example, when deciding whether to use a new approach (e.g., would a neighborhood watch program work for our community?) Knowledge from such an evaluation will provide information about its practicality. For a developing program, information from evaluations of similar programs can provide the insight needed to clarify how its activities should be designed. To improve how things get done.This is appropriate in the implementation stage when an established program tries to describe what it has done. This information can be used to describe program processes, to improve how the program operates, and to fine-tune the overall strategy. Evaluations done for this purpose include efforts to improve the quality, effectiveness, or efficiency of program activities. To determine what the effects of the program are. Evaluations done for this purpose examine the relationship between program activities and observed consequences. For example, are more students finishing high school as a result of the program? Programs most appropriate for this type of evaluation are mature programs that are able to state clearly what happened and who it happened to. Such evaluations should provide evidence about what the program's contribution was to reaching longer-term goals such as a decrease in child abuse or crime in the area. This type of evaluation helps establish the accountability, and thus, the credibility, of a program to funders and to the community. To affect those who participate in it. The logic and reflection required of evaluation participants can itself be a catalyst for self-directed change. And so, one of the purposes of evaluating a program is for the process and results to have a positive influence. Such influences may: Empower program participants (for example, being part of an evaluation can increase community members' sense of control over the program); Supplement the program (for example, using a follow-up questionnaire can reinforce the main messages of the program); Promote staff development (for example, by teaching staff how to collect, analyze, and interpret evidence); or Contribute to organizational growth (for example, the evaluation may clarify how the program relates to the organization's mission).

- Develop a Plan Collect Data Analyze Data Communicate & Improve

- 5 Components: Program curriculum; Trainee -- performance, feedback, and remediation as necessary; Clinical faculty/instructor and support staff -- performance, feedback, and remediation as necessary; Organizational -- Adequacy of support including operations and finances; Overall programmatic self-evaluation -- including outcome measures and corresponding action plans.

- The steps in evaluation always keep in mind the Standards for good evaluation: Engage stakeholders Describe the program Focus the evaluation design Gather credible evidence Justify conclusions -- Analyze, synthesize and interpret findings – provide alternate explanations Ensure use and share lessons learned – Feedback, follow up and disseminate The 4 Standards are used to assess the quality of the evaluation -- described in more detail in the Community tool box resource prepared by the University of Kansas – includes the accuracy of the data, the meaningfulness of the data,

- As you design your devaluation process these are important questions to ask the evaluation group. The answers will determine how effective / how meaningfull your evaluation is. You’ll want to revisit these questions periodically. You may find out that a specific criteria – the number of children who receive vacinations is difficult to gather, b/c they get vaccinated at other sites…. And the last question – what conclusions can be drawn – put on the hat of someone who doesn’t know your catchment area or what you do – are there other explianations for the outcomes? What is the context of the outcomes…

- As you design your devaluation process these are important questions to ask the evaluation group. The answers will determine how effective / how meaningfull your evaluation is. You’ll want to revisit these questions periodically. You may find out that a specific criteria – the number of children who receive vacinations is difficult to gather, b/c they get vaccinated at other sites…. And the last question – what conclusions can be drawn – put on the hat of someone who doesn’t know your catchment area or what you do – are there other explianations for the outcomes? What is the context of the outcomes…

- What – entity Criteria --

- This requires thinking broadly about what counts as "evidence." Such decisions are always situational; they depend on the question being posed and the motives for asking it Indicators The goal is to collect information that will convey a credible, well-rounded picture of the program and its efforts. Having credible evidence strengthens the evaluation results as well as the recommendations that follow from them. CREDIBILITY: evaluation's overall credibility. One way to do this is by using multiple procedures for gathering, analyzing, and interpreting data. Encouraging participation by stakeholders can also enhance perceived credibility. When stakeholders help define questions and gather data, they will be more likely to accept the evaluation's conclusions and to act on its recommendations. Sources The criteria used to select sources should be clearly stated so that users and other stakeholders can interpret the evidence accurately and assess if it may be biased. Use multiple sources provides an opportunity to include different perspectives about the program and enhances the evaluation's credibility. n addition, some sources provide information in narrative form (for example, a person's experience when taking part in the program) and others are numerical (for example, how many people were involved in the program). Logistics By logistics, we mean the methods, timing, and physical infrastructure for gathering and handling evidence. WRITTEN PLAN Techniques for gathering evidence in an evaluation must be in keeping with the cultural norms of the community. Data collection procedures should also ensure that confidentiality is protected.