The Quest for an Open Source Data Science Platform

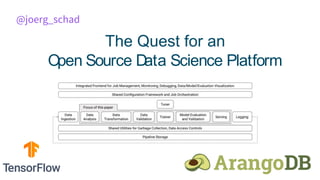

Cloud Native Night July 2019, Munich: Talk by Jörg Schad (@joerg_schad, Head of Engineering & ML at ArangoDB) === Please download slides if blurred! === Abstract: With the rapid and recent rise of data science, the Machine Learning Platforms being built are becoming more complex. For example, consider the various Kubeflow components: Distributed Training, Jupyter Notebooks, CI/CD, Hyperparameter Optimization, Feature store, and more. Each of these components is producing metadata: Different (versions) Datasets, different versions a of a jupyter notebooks, different training parameters, test/training accuracy, different features, model serving statistics, and many more. For production use it is critical to have a common view across all these metadata as we have to ask questions such as: Which jupyter notebook has been used to build Model xyz currently running in production? If there is new data for a given dataset, which models (currently serving in production) have to be updated? In this talk, we look at existing implementations, in particular MLMD as part of the TensorFlow ecosystem. Further, propose a first draft of a (MLMD compatible) universal Metadata API. We demo the first implementation of this API using ArangoDB.

Recommended

Recommended

More Related Content

What's hot

What's hot (20)

Similar to The Quest for an Open Source Data Science Platform

Similar to The Quest for an Open Source Data Science Platform (20)

More from QAware GmbH

More from QAware GmbH (20)

Recently uploaded

Recently uploaded (20)

The Quest for an Open Source Data Science Platform

- 1. The Quest for an Open Source Data Science Platform @joerg_schad

- 2. Jörg Schad, PhD ● Previous ○ Suki.ai ○ Mesosphere ○ PhD Distributed DB Systems ● @joerg_schad @joerg_schad

- 3. 3

- 4. What you want to be doing 4 Get Data Write intelligent machine learning code Train Model Run Model Repeat

- 5. 5 Sculley, D., Holt, G., Golovin, D. et al. Hidden Technical Debt in Machine Learning Systems What you’re actually doing

- 7. 8 Division of Labor Configuration Machine Resource Management and Monitoring Serving Infrastructure Data Collection Data Verification Process Management Tools Feature Extraction ML Analysis Tools Model Monitoring Inspired by “Sculley, D., Holt, G., Golovin, D. et al. Hidden Technical Debt in Machine Learning Systems” article System Admin/ DevOps Data Engineer/DataOps Data Scientist

- 9. Das Bild kann nicht angezeigt werden. Challenges 1. End-to-End pipelines as more than just infrastructure 2. Silos between Data Scientists and Ops 3. Reproducible model builds 4. Data management 5. Versioning: datasets, features, models, environments, pipelines, etc. 6. End-to-end metadata management 7. Resource management: CPU, GPU, TPU, etc. 8. …..

- 10. Do we need Data Science Engineering Principles? 11 Software Engineering The application of a systematic, disciplined, quantifiable approach to the development, operation, and maintenance of software IEEE Standard Glossary of Software Engineering Terminology

- 11. Do we need Data Science Engineering Principles? 12 Software Engineering The application of a systematic, disciplined, quantifiable approach to the development, operation, and maintenance of software IEEE Standard Glossary of Software Engineering Terminology

- 12. Das Bild kann nicht angezeigt werden. Challenges 1. End-to-End pipelines as more than just infrastructure 2. Silos between Data Scientists and Ops 3. Reproducible model builds 4. Data management 5. Versioning: datasets, features, models, environments, pipelines, etc. 6. End-to-end metadata management 7. Resource management: CPU, GPU, TPU, etc. 8. …..

- 15. 16https://www.youtube.com/playlist?list=PLQY2H8rRoyvzoUYI26kHmKSJBedn3SQuB TensorFlow Dev Summit

- 24. Logical Clocks Hops https://www.logicalclocks.com/ Raw Data Event Data Monitor HopsFS Feature Store Serving Feature StoreData Prep Ingest DeployExperiment/Train logs logs Metadata Store

- 25. Continuous Integration Monitoring & Operations Distributed Data Storage and Streaming Data Preparation and Analysis Storage of trained Models and Metadata Use trained Model for Inference Distributed Training using Machine Learning Frameworks Data & Streaming Model Engineering Model Management Model Serving Model Training Resource and Service Management TensorBoard Model Library Feature Catalogue Notebook Library

- 26. Metadata 27

- 27. 28 Metadata… Which model to pick? Common Metadata • Accuracy – Which... • Latency • Environments • Data Privacy • ….

- 30. 31 Metadata… How to store...

- 31. 32 ● Native Multi Model Database ○ Stores, K/V, Documents & Graphs ● Distributed ○ Graphs can span multiple nodes ● AQL - SQL-like multi -model query language ● ACID Transactions including Multi Collection Transactions

- 32. 33 ArangoSearch GraphsDocum ents - JSON { "type": "pants", "waist": 32, "length": 34, "color": "blue", "material": "cotton" } { "type": "television", "diagonal size": 46, "hdmi inputs": 3, "wall mountable": true, "built-in tuner": true, "dynamic contrast": "50,000:1", "Resolution": "1920x1080" } Key Values K => V K => V K => V K => V K => V K => V K => V K => V K => V K => V K => V K => V

- 33. 34 Metadata… How to store...

- 35. 36

- 37. Do we need Data Science Engineering Principles? 38 Software Engineering The application of a systematic, disciplined, quantifiable approach to the development, operation, and maintenance of software IEEE Standard Glossary of Software Engineering Terminology

- 38. Do we need Data Science Engineering Principles? 39 Software Engineering The application of a systematic, disciplined, quantifiable approach to the development, operation, and maintenance of software IEEE Standard Glossary of Software Engineering Terminology

- 39. 40 • Do I need Machine Learning? * • Do I need {Neural Networks, Regression,...}* • What dataset(s)? – Quality? • What target/serving environment? • What model architecture? • Pre-trained model available? • How many training resources? * Can I actually use ... Challenge: Requirements Engineering

- 40. 41 • Many adhocs model/training runs • Regulatory Requirements • Dependencies • CI/CD • Git • Time-dependent features Challenge: Reproducible Builds Step 1: Training (In Data Center - Over Hours/Days/Weeks) Dog Input: Lots of Labeled Data Output: Trained Model Deep neural network model

- 41. 42 MFlow

- 42. 43 MFlow Tracking import mlflow # Log parameters (key-value pairs) mlflow.log_param("num_dimensions", 8) mlflow.log_param("regularization", 0.1) # Log a metric; mlflow.log_metric("accuracy", 0.1) ... mlflow.log_metric("accuracy", 0.45) # Log artifacts (output files) mlflow.log_artifact("roc.png") mlflow.log_artifact("model.pkl")

- 43. 44 MFlow Project name: My Project conda_env: conda.yaml entry_points: main: parameters: data_file: path regularization: {type: float, default: 0.1} command: "python train.py -r {regularization} {data_file}" validate: parameters: data_file: path command: "python validate.py {data_file}" $mlflow run example/project -P alpha=0.5 $mlflow run git@github.com:databricks/mlflow-example.git

- 44. 45 MFlow Model time_created: 2018-02-21T13:21:34.12 flavors: sklearn: sklearn_version: 0.19.1 pickled_model: model.pkl python_function: loader_module: mlflow.sklearn pickled_model: model.pkl $mlflow run example/project -P alpha=0.5 $mlflow run git@github.com:databricks/mlflow-example.git

- 45. 46 Sculley, D., Holt, G., Golovin, D. et al. Hidden Technical Debt in Machine Learning Systems Challenge: Persona(s)

- 46. Continuous Integration Monitoring & Operations Distributed Data Storage and Streaming Data Preparation and Analysis Storage of trained Models and Metadata Use trained Model for Inference Distributed Training using Machine Learning Frameworks Data & Streaming Model Engineering Model Management Model Serving Model Training Resource and Service Management TensorBoard Model Library Feature Store Notebook Library Challenge: Metadata Metadata Layer

- 47. 1. https://medium.com/tensorflow/tensorflow-model-optimization-toolkit-post-training-integer-quantization- b4964a1ea9ba?postPublishedType=repub&linkId=68863403 2. https://blog.acolyer.org/2019/06/03/ease-ml-ci/ 3. https://blog.acolyer.org/2019/06/05/data-validation-for-machine-learning/ 4. https://databricks.com/blog/2019/06/06/announcing-the-mlflow-1-0-release.html 5. https://docs.google.com/document/d/104jv0BvQJ3unVEufmHVhJWgUAqJOk2CwsCpk5H5vYug/edit# 6. https://medium.com/tensorflow/from-research-to-production-with-tfx-pipelines-and-ml-metadata- 443a51dac188?linkId=68054243 7. https://github.com/tensorflow/tfx/tree/master/tfx/examples/chicago_taxi_pipeline 48

- 48. 49 Challenge: Data Science IDE

- 51. 52 Challenge: Testing • Training/Test/Validation Datasets • Unit Tests? • Different factors – Accuracy – Serving performance – …. • A/B Testing with live Data • Shadow Serving

- 52. 53 Challenge: Data Quality • Data is typically not ready to be consumed by ML job* – Data Cleaning • Missing/incorrect labels – Data Preparation • Same Format • Same Distribution * Demo datasets are a fortunate exception :)

- 55. 56 Challenge: Data (Preprocessing) Sharing Feature Catalogue Data & Streaming Model Engineering Model Training • Preprocessed Data Sets valuable – Sharing – Automatic Updating • Feature Catalogue ⩬ Preprocessing Cache + Discovery https://eng.uber.com/michelangelo/

- 56. Challenge: Features Feature Stores • Discoverability • Consistency • Versioning • Monitoring • Caching • Backfill (for time-dependent features) 57

- 57. 58 Challenge: Model Libraries • Existing architectures • Pretrained models

- 58. 59 Machine Learning Model Serving • Deploying models – Choice… – Metrics • Updating models – Zero downtime – Target environment • Testing models – Test model with live data • Ensemble Decision – Multiple models working together https://www.tensorflow.org/tfx/guide/serving

- 61. 62 Horovod https://eng.uber.com/horovod/ • All-Reduce to update Parameter – Bandwidth Optimal • Uber Horovod is MPI based – Difficult to set up – Other Spark based implementations • Wait for TensorFlow 2.0 ;)

- 62. 63 TF Distribution Strategy https://github.com/tensorflow/tensorflow/tree/master/tensorflow/contrib/distribute ● MirroredStrategy: This does in-graph replication with synchronous training on many GPUs on one machine. Essentially, we create copies of all variables in the model's layers on each device. We then use all-reduce to combine gradients across the devices before applying them to the variables to keep them in sync. ● CollectiveAllReduceStrategy: This is a version of MirroredStrategy for multi-working training. It uses a collective op to do all- reduce. This supports between-graph communication and synchronization, and delegates the specifics of the all-reduce implementation to the runtime (as opposed to encoding it in the graph). This allows it to perform optimizations like batching and switch between plugins that support different hardware or algorithms. In the future, this strategy will implement fault- tolerance to allow training to continue when there is worker failure. ● ParameterServerStrategy: This strategy supports using parameter servers either for multi-GPU local training or asynchronous multi-machine training. When used to train locally, variables are not mirrored, instead they placed on the CPU and operations are replicated across all local GPUs. In a multi-machine setting, some are designated as workers and some as parameter servers. Each variable is placed on one parameter server. Computation operations are replicated across all GPUs of the workers.

- 63. 64 Challenge: Writing Distributed Model Functions

- 65. 66 Profiling https://www.tensorflow.org/performance/performance_guide • Crucial when using “expensive” devices • Memory Access Pattern • “Secret knowledge” • More is not necessarily better....

- 66. 67 Hyperparameter Optimization Step 1: Training (In Data Center - Over Hours/Days/Weeks) Dog Input: Lots of Labeled Data Output: Trained Model Deep neural network model https://towardsdatascience.com/understanding-hyperparameters-and-its- optimisation-techniques-f0debba07568 ● Networks Shape ● Learning Rate ● ...

- 69. 70 Challenge: Monitoring • Understand {...} • Debug • Model Quality – Accuracy – Training Time – … • Overall Architecture – Availability – Latencies – ... • TensorBoard • Traditional Cluster Monitoring Tool