Presentation of the SEALS project

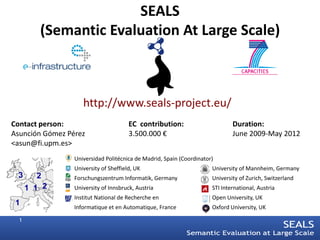

- 1. SEALS (Semantic Evaluation At Large Scale) http://www.seals-project.eu/ Contact person: EC contribution: Duration: Asunción Gómez Pérez 3.500.000 € June 2009-May 2012 <asun@fi.upm.es> Universidad Politécnica de Madrid, Spain (Coordinator) University of Sheffield, UK University of Mannheim, Germany 3 2 Forschungszentrum Informatik, Germany University of Zurich, Switzerland 1 1 2 University of Innsbruck, Austria STI International, Austria Institut National de Recherche en Open University, UK 1 Informatique et en Automatique, France Oxford University, UK 1

- 2. Motivation Not scalable 50 Execution problems T1 T2 Unstable 50 Max 50 ontology size Not interoperable T3 T4 T5 Triples per 80 millisecond ? ?? 80 ?? T6 T7

- 3. SEALS Objectives The SEALS Platform •A lasting reference infrastructure for semantic technology evaluation •The evaluations to be executed on-demand at the SEALS Platform The SEALS Evaluation Campaigns •Two public evaluation campaigns including the best-in-class semantic technologies: – Ontology engineering tools – Ontology storage and reasoning systems – Ontology matching tools – Semantic search tools – Semantic Web Service tools •Semantic technology roadmaps The SEALS Community Service •Around the evaluation of semantic technologies Activities 3

- 4. The SEALS Platform Provides the infrastructure for evaluating semantic technologies • Open (everybody can use it) • Scalable (to users, data size) • Extensible (to more tests, different technology, more measures) • Sustainable (beyond SEALS) • Independent (unbiased) • Repeatable (evaluations can be reproduced) A platform for remote evaluation of semantic technology: • Ontology engineering tools • Storage systems and reasoners • Ontology matching • Semantic search • Semantic web services According to criteria: • Interoperability • Scalability • Specific measures (e.g., completeness of query answers, matching precision) 4

- 5. Overall SEALS Platform Architecture Evaluation Organisers Technology Technology Providers Adopters SEALS Portal Evaluation Entity requests management requests Runtime SEALS Evaluation Service Manager Service Software agents, SEALS Repositories i.e., technology evaluators Test Data Tools Results Evaluation Repository Repository Repository Descriptions Service Service Service Repository Service

- 6. Project overview Networking Activities WP1: Project Management (UPM) WP2: Dissemination, Community Building WP3: Evaluation Campaigns and Semantic and Sustainability Technology Roadmaps (STI2) (USFD) Service Activities SEALS Platform WP4: SEALS Service Manager (UPM) WP5: Test Data WP6: Tools WP7: Results WP8: Evaluations WP9: Runtime Repository Service Repository Service Repository Service Repository Service Evaluation Service (UIBK) (UIBK) (UIBK) (FZI) (UPM) Joint Research Activities WP11: Storage and WP10: Ontology WP12: Matching WP13: Semantic WP14: Semantic Reasoning Engineering Tools Tools Search Tools Web Service Tools Systems (FZI) (INRIA) (USFD) (OU) (OXF) 6

- 7. Two-phase action plan Evaluation Evaluation results results SEALS Platform SEALS Technology SEALS Platform SEALS Technology Services roadmaps Services roadmaps Platform Platform New New requirements requirements 18 months 18 months Service Activities Evaluation results SEALS Platform SEALS Technology Services Platform roadmaps New requirements 7

- 8. Project overview Networking Activities WP1: Project Management (UPM) WP2: Dissemination, Community Building WP3: Evaluation Campaigns and Semantic and Sustainability Technology Roadmaps (STI2) (USFD) Service Activities SEALS Platform WP4: SEALS Service Manager (UPM) WP5: Test Data WP6: Tools WP7: Results WP8: Evaluations WP9: Runtime Repository Service Repository Service Repository Service Repository Service Evaluation Service (UIBK) (UIBK) (UIBK) (FZI) (UPM) Joint Research Activities WP11: Storage and WP10: Ontology WP12: Matching WP13: Semantic WP14: Semantic Reasoning Engineering Tools Tools Search Tools Web Service Tools Systems (FZI) (INRIA) (USFD) (OU) (OXF) 8

- 9. The SEALS Platform in the evaluation campaigns Evaluation Organisers SEALS Service Manager Technology Runtime Technology Developers Evaluation Users Service Evaluation Test Data Tool Result Repository Repository Repository Repository Service Service Service Service 9

- 10. The SEALS entities Tools Evaluation Results • Entities are described Evaluation using: description Test data – Data (the entity itself) – Metadata (that describes the entity) • Machine-interpretable descriptions of evaluations – Using BPEL 10

- 11. The SEALS ontologies • Describe: – Evaluations • + all relevant information – Evaluation campaigns • Reused existing ontologies (e.g.,Dublin Core, FOAF, VCard) http://www.seals-project.eu/ontologies/ 14.09.2010 11

- 12. Project overview Networking Activities WP1: Project Management (UPM) WP2: Dissemination, Community Building WP3: Evaluation Campaigns and Semantic and Sustainability Technology Roadmaps (STI2) (USFD) Service Activities SEALS Platform WP4: SEALS Service Manager (UPM) WP5: Test Data WP6: Tools WP7: Results WP8: Evaluations WP9: Runtime Repository Service Repository Service Repository Service Repository Service Evaluation Service (UIBK) (UIBK) (UIBK) (FZI) (UPM) Joint Research Activities WP11: Storage and WP10: Ontology WP12: Matching WP13: Semantic WP14: Semantic Reasoning Engineering Tools Tools Search Tools Web Service Tools Systems (FZI) (INRIA) (USFD) (OU) (OXF) 12

- 13. Ontology Engineering Tools • Goal: To evaluate the ontology management capabilities of ontology engineering tools – Ontology editors • Protégé • NeOn Toolkit • (your tool here) – Ontology management frameworks and APIs • Jena • Sesame • OWL API • (your tool here) 14.09.2010 13

- 14. Ontology Engineering Tools • Evaluation services for: – Conformance – Interoperability – Scalability • Test data: – RDF(S) Import Test Suite – OWL Lite Import Test Suite – OWL DL Import Test Suite – OWL Full Import Test Suite – Scalability test data 14

- 15. Storage and Reasoning Systems • Goals Evaluating the interoperability and performance of DLBSs • Standard reasoning services –Classification –Class satisfiability –Ontology satisfiability –Logical entailment 14.0 15 9.20 10

- 16. Storage and Reasoning Systems • Test Data – Gardiner evaluation suite (300 ontologies) – [Wang06] ontologies suite (600 ontologies) – Various versions of the GALEN ontology – Ontologies created in EU funded projects: SEMINTEC, VICODI, AEO, ... – Abox generator [Stoilos10] 14.0 16 9.20 10

- 17. Storage and Reasoning Systems • Evaluation Criteria – Interoperability – Performance • Metrics – The number of tests passed without I/O errors – Time • Tools – HermiT, Pellet, FaCT++, Racer Pro, CEL, CB, … 14.0 17 9.20 10

- 18. Matching Tools • Goals: • Criteria – To evaluate the – Conformance competence of matching • standard precision and systems with respect to recall different evaluation • restricted semantic criteria. precision and recall • alignment coherence – To demonstrate the feasibility and benefits of automating matching – Efficiency evaluation. • runtime • memory consumption

- 19. Matching Tools Data sets: Three subsets from OAEI Anatomy: matching the Adult Mouse Anatomy (2744 classes) and the NCI Thesaurus (3304 classes) describing the human anatomy. Benchmark: goal is to identify the areas in which each matching algorithm is strong or weak. One particular ontology of the bibliography domain is compared with a number of alternative ontologies on the same domain. Conference: collection of conference organization ontologies. The goal is to materialize in alignments aggregated statistical observations and/or implicit design patterns.

- 20. Matching Tools • Evaluation criteria and • Tools metrics – ASMOV – Conformance – Aroma • standard precision and – Falcon – AO recall – Lily • restricted semantic precision and recall – SAMBO • alignment coherence – Efficiency • runtime • memory consumption

- 21. Matching Tools Scenario 1 Test data: Benchmark Criteria: conformance with expected results Scenario 2 Test data: Anatomy Criteria: conformance with expected results, efficiency in terms of memory consumption and execution time Scenario 3 Test data: Conference Criteria: conformance with expected results and coherence

- 22. Semantic Search • Goals – Benchmark effectiveness of search tools – Emphasis on tool usability since search is a inherently user-centered activity. – Still interested in automated evaluation for other aspects – Two phase approach: • Automated evaluation: runs on SEALS Platform • User-in-the-loop: human experiment 14.0 22 9.20 10

- 23. Semantic Search: Data • User-in-the-loop: Mooney – Pre-existing dataset – Extended question set to create unseen questions a number of more 'complex' questions. – Well suited to human-based experiments: easy to understand domain • Automated: EvoOnt – Bespoke dataset – 5 different sizes (1k, 10k, 100k, 1M, 10M triples) – Well suited to automated experiments: range of sizes and questions can be of arbitrary complexity • Each of the 6 data sets (1 Mooney, 5 EvoOnt) has a set of natural language questions and associated groundtruths 14.09.2010 23

- 24. Semantic Search Criteria for User-centred search: Query expressiveness Usability (effectiveness, efficiency, satisfaction) Scalability Interoperability 14.0 24 9.20 10

- 25. Semantic Search • Metrics – Core metrics: precision, recall and f-measure of the triples returned for each query. – Other metrics: • tool performance metrics (e.g., memory usage, CPU load, etc) • user-centric metrics (e.g., time to obtain the final answer) • System Usability Scale (SUS) questionnaire – Also collect demographic information to correlate with metrics • Tools – K-Search (produced by K-Now, a Sheffield spin-out company) – Ginseng (Zurich) 25 14.0 9.20 10

- 26. Semantic Web Services • Goal: To evaluate Semantic Web Service discovery • Test data: – OWLS Test Collection (OWLS-TC) – SAWSDL Test Collection (SAWSDL-TC) – Seekda Services – OPOSSum Services 26

- 27. Project overview Networking Activities WP1: Project Management (UPM) WP2: Dissemination, Community Building WP3: Evaluation Campaigns and Semantic and Sustainability Technology Roadmaps (STI2) (USFD) Service Activities SEALS Platform WP4: SEALS Service Manager (UPM) WP5: Test Data WP6: Tools WP7: Results WP8: Evaluations WP9: Runtime Repository Service Repository Service Repository Service Repository Service Evaluation Service (UIBK) (UIBK) (UIBK) (FZI) (UPM) Joint Research Activities WP11: Storage and WP10: Ontology WP12: Matching WP13: Semantic WP14: Semantic Reasoning Engineering Tools Tools Search Tools Web Service Tools Systems (FZI) (INRIA) (USFD) (OU) (OXF) 27

- 30. Dissemination activities • Portal • Evaluation campaigns each have own section • News pages with RSS for announcements & updates • Events list • Next Events • IWEST Workshop at ISWC, November 2010 • Campaign events at ISWC: Ontology Matching workshop and S3 Semantic Service Selection • EKAW2010 sponsorship of best paper • Previous Events • ESWC2010 sponsorship, tutorial, News from the Front, material distribution • AAAI2010 Outstanding Paper

- 31. Community Building • Registration form on the portal – Community area provides a Web interface to SEALS portal functionalities, such as registering and uploading a tool, accessing evaluation results • SEALS Community launched in summer 2010 – >100 persons from research & industry • Provider Involvement Program – Invites to be sent out directly by the campaigns to tool vendors (116 tools from 100 vendors have been identified by the campaigns to date) 31

- 32. The SEALS Evaluation Campaigns 2010 Ontology Engineering Tools Reasoning Tools Ontology Matching Tools Semantic Search Tools Semantic Web Service Tools OET Conformance 2010 DLBS Classification 2010 MT Benchmark 2010 SST Automated Search SWS Tool Discovery Conformance Conformance Performance 2010 Evaluation 2010: DLBS Class satisfiability Efficiency Search quality Performance OET Interoperability 2010 2010 Interoperability Interoperability SST Automated SWS S3 (Semantic Service DLBS Ontology satisfiability MT Anatomy 2010 Performance and Scalability Selection) Contest 2010: OET Scalability 2010 2010 Conformance 2010 Performance Efficiency Efficiency Resource consumption Scalability DLBS Logical entailment Interoperability 2010 SST Automated Query MT Conference 2010 Expressiveness 2010 Conformance Query expressiveness Efficiency Interoperability SST Automated Quality of Alignment coherence Documentation 2010 Usability SST User Usability 2010 Usability SST User Query Expressiveness 2010 Query expressiveness Next evaluation campaigns during 2011 - 2012 32

- 33. Community participation in the 1st phase November 2009 May 2010 July 2010 November 2010 Provide requirements Definition of Comment on the evaluations evaluations and and test data test data Launch of the 1st Evaluation Join the Evaluation Campaign! Campaign First release of the SEALS Run your own evaluations Platform Results of the See and discuss 1st Evaluation Evaluation Campaign Campaign results

- 34. Conclusions • We will provide (1st prototype in 2010): – Evaluation services and datasets for the evaluation of semantic technologies: Ontology engineering tools Storage and reasoning systems Matching tools Semantic search tools Semantic Web Service tools • Benefits for: – Researchers. Validate their research and compare with others – Developers. Evaluate their tools, compare with others and monitor – Providers. Verify and show that their tools work, increase visibility – Users. Select tools or sets of tools between alternatives • We ask for: – Semantic technologies using the SEALS Platform for their evaluations – Semantic technologies participating in the evaluation campaigns 34

- 35. Semantic technology roadmaps Evaluations 50 Not scalable T1 T2 Max 50 ontology size Unstable 50 80 Not interoperable Execution T3 T4 T5 problems Triples per 80 millisecond 80 ? ?? ?? T6 80 T7 80 35

- 36. SEALS provides evaluation services to the community! Contact: Coordinator: Asunción Gómez Pérez <asun@fi.upm.es> SEALS Community Portal: http://www.seals-project.eu/ SEALS Evaluation Campaigns: 14.09.2010 http://www.seals-project.eu/seals-evaluation-campaigns 36