Vanishing & Exploding Gradients

•Download as PPT, PDF•

0 likes•341 views

Vanishing & Exploding Gradients issue in deep learning

Report

Share

Report

Share

Recommended

Recommended

https://telecombcn-dl.github.io/2018-dlai/

Deep learning technologies are at the core of the current revolution in artificial intelligence for multimedia data analysis. The convergence of large-scale annotated datasets and affordable GPU hardware has allowed the training of neural networks for data analysis tasks which were previously addressed with hand-crafted features. Architectures such as convolutional neural networks, recurrent neural networks or Q-nets for reinforcement learning have shaped a brand new scenario in signal processing. This course will cover the basic principles of deep learning from both an algorithmic and computational perspectives.Variational Autoencoders VAE - Santiago Pascual - UPC Barcelona 2018

Variational Autoencoders VAE - Santiago Pascual - UPC Barcelona 2018Universitat Politècnica de Catalunya

More Related Content

What's hot

https://telecombcn-dl.github.io/2018-dlai/

Deep learning technologies are at the core of the current revolution in artificial intelligence for multimedia data analysis. The convergence of large-scale annotated datasets and affordable GPU hardware has allowed the training of neural networks for data analysis tasks which were previously addressed with hand-crafted features. Architectures such as convolutional neural networks, recurrent neural networks or Q-nets for reinforcement learning have shaped a brand new scenario in signal processing. This course will cover the basic principles of deep learning from both an algorithmic and computational perspectives.Variational Autoencoders VAE - Santiago Pascual - UPC Barcelona 2018

Variational Autoencoders VAE - Santiago Pascual - UPC Barcelona 2018Universitat Politècnica de Catalunya

What's hot (20)

Classification by back propagation, multi layered feed forward neural network...

Classification by back propagation, multi layered feed forward neural network...

Deep Feed Forward Neural Networks and Regularization

Deep Feed Forward Neural Networks and Regularization

Convolutional Neural Network Models - Deep Learning

Convolutional Neural Network Models - Deep Learning

Recurrent Neural Network (RNN) | RNN LSTM Tutorial | Deep Learning Course | S...

Recurrent Neural Network (RNN) | RNN LSTM Tutorial | Deep Learning Course | S...

Variational Autoencoders VAE - Santiago Pascual - UPC Barcelona 2018

Variational Autoencoders VAE - Santiago Pascual - UPC Barcelona 2018

Similar to Vanishing & Exploding Gradients

Introducing nonlinear inverse problems some local optimization algorithms Levenberg - Marquardt (LM) algorithm_ aghazade

Levenberg - Marquardt (LM) algorithm_ aghazadeInistute of Geophysics, Tehran university , Tehran/ iran

Similar to Vanishing & Exploding Gradients (20)

Deep Learning for Computer Vision: Optimization (UPC 2016)

Deep Learning for Computer Vision: Optimization (UPC 2016)

Machine-Learning-with-Ridge-and-Lasso-Regression.pdf

Machine-Learning-with-Ridge-and-Lasso-Regression.pdf

[ICLR2021 (spotlight)] Benefit of deep learning with non-convex noisy gradien...![[ICLR2021 (spotlight)] Benefit of deep learning with non-convex noisy gradien...](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

![[ICLR2021 (spotlight)] Benefit of deep learning with non-convex noisy gradien...](data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7)

[ICLR2021 (spotlight)] Benefit of deep learning with non-convex noisy gradien...

Recently uploaded

Saudi Arabia [ Abortion pills) Jeddah/riaydh/dammam/+966572737505☎️] cytotec tablets uses abortion pills 💊💊

How effective is the abortion pill? 💊💊 +966572737505) "Abortion pills in Jeddah" how to get cytotec tablets in Riyadh " Abortion pills in dammam*💊💊

The abortion pill is very effective. If you’re taking mifepristone and misoprostol, it depends on how far along the pregnancy is, and how many doses of medicine you take:💊💊 +966572737505) how to buy cytotec pills

At 8 weeks pregnant or less, it works about 94-98% of the time. +966572737505[ 💊💊💊

At 8-9 weeks pregnant, it works about 94-96% of the time. +966572737505)

At 9-10 weeks pregnant, it works about 91-93% of the time. +966572737505)💊💊

If you take an extra dose of misoprostol, it works about 99% of the time.

At 10-11 weeks pregnant, it works about 87% of the time. +966572737505)

If you take an extra dose of misoprostol, it works about 98% of the time.

In general, taking both mifepristone and+966572737505 misoprostol works a bit better than taking misoprostol only.

+966572737505

Taking misoprostol alone works to end the+966572737505 pregnancy about 85-95% of the time — depending on how far along the+966572737505 pregnancy is and how you take the medicine.

+966572737505

The abortion pill usually works, but if it doesn’t, you can take more medicine or have an in-clinic abortion.

+966572737505

When can I take the abortion pill?+966572737505

In general, you can have a medication abortion up to 77 days (11 weeks)+966572737505 after the first day of your last period. If it’s been 78 days or more since the first day of your last+966572737505 period, you can have an in-clinic abortion to end your pregnancy.+966572737505

Why do people choose the abortion pill?

Which kind of abortion you choose all depends on your personal+966572737505 preference and situation. With+966572737505 medication+966572737505 abortion, some people like that you don’t need to have a procedure in a doctor’s office. You can have your medication abortion on your own+966572737505 schedule, at home or in another comfortable place that you choose.+966572737505 You get to decide who you want to be with during your abortion, or you can go it alone. Because+966572737505 medication abortion is similar to a miscarriage, many people feel like it’s more “natural” and less invasive. And some+966572737505 people may not have an in-clinic abortion provider close by, so abortion pills are more available to+966572737505 them.

+966572737505

Your doctor, nurse, or health center staff can help you decide which kind of abortion is best for you.

+966572737505

More questions from patients:

Saudi Arabia+966572737505

CYTOTEC Misoprostol Tablets. Misoprostol is a medication that can prevent stomach ulcers if you also take NSAID medications. It reduces the amount of acid in your stomach, which protects your stomach lining. The brand name of this medication is Cytotec®.+966573737505)

Unwanted Kit is a combination of two medicinAbortion pills in Jeddah | +966572737505 | Get Cytotec

Abortion pills in Jeddah | +966572737505 | Get CytotecAbortion pills in Riyadh +966572737505 get cytotec

Model Call Girl Services in Delhi reach out to us at 🔝 9953056974 🔝✔️✔️

Our agency presents a selection of young, charming call girls available for bookings at Oyo Hotels. Experience high-class escort services at pocket-friendly rates, with our female escorts exuding both beauty and a delightful personality, ready to meet your desires. Whether it's Housewives, College girls, Russian girls, Muslim girls, or any other preference, we offer a diverse range of options to cater to your tastes.

We provide both in-call and out-call services for your convenience. Our in-call location in Delhi ensures cleanliness, hygiene, and 100% safety, while our out-call services offer doorstep delivery for added ease.

We value your time and money, hence we kindly request pic collectors, time-passers, and bargain hunters to refrain from contacting us.

Our services feature various packages at competitive rates:

One shot: ₹2000/in-call, ₹5000/out-call

Two shots with one girl: ₹3500/in-call, ₹6000/out-call

Body to body massage with sex: ₹3000/in-call

Full night for one person: ₹7000/in-call, ₹10000/out-call

Full night for more than 1 person: Contact us at 🔝 9953056974 🔝. for details

Operating 24/7, we serve various locations in Delhi, including Green Park, Lajpat Nagar, Saket, and Hauz Khas near metro stations.

For premium call girl services in Delhi 🔝 9953056974 🔝. Thank you for considering us!CHEAP Call Girls in Rabindra Nagar (-DELHI )🔝 9953056974🔝(=)/CALL GIRLS SERVICE

CHEAP Call Girls in Rabindra Nagar (-DELHI )🔝 9953056974🔝(=)/CALL GIRLS SERVICE9953056974 Low Rate Call Girls In Saket, Delhi NCR

Saudi Arabia [ Abortion pills) Jeddah/riaydh/dammam/+966572737505☎️] cytotec tablets uses abortion pills 💊💊

How effective is the abortion pill? 💊💊 +966572737505) "Abortion pills in Jeddah" how to get cytotec tablets in Riyadh " Abortion pills in dammam*💊💊

The abortion pill is very effective. If you’re taking mifepristone and misoprostol, it depends on how far along the pregnancy is, and how many doses of medicine you take:💊💊 +966572737505) how to buy cytotec pills

At 8 weeks pregnant or less, it works about 94-98% of the time. +966572737505[ 💊💊💊

At 8-9 weeks pregnant, it works about 94-96% of the time. +966572737505)

At 9-10 weeks pregnant, it works about 91-93% of the time. +966572737505)💊💊

If you take an extra dose of misoprostol, it works about 99% of the time.

At 10-11 weeks pregnant, it works about 87% of the time. +966572737505)

If you take an extra dose of misoprostol, it works about 98% of the time.

In general, taking both mifepristone and+966572737505 misoprostol works a bit better than taking misoprostol only.

+966572737505

Taking misoprostol alone works to end the+966572737505 pregnancy about 85-95% of the time — depending on how far along the+966572737505 pregnancy is and how you take the medicine.

+966572737505

The abortion pill usually works, but if it doesn’t, you can take more medicine or have an in-clinic abortion.

+966572737505

When can I take the abortion pill?+966572737505

In general, you can have a medication abortion up to 77 days (11 weeks)+966572737505 after the first day of your last period. If it’s been 78 days or more since the first day of your last+966572737505 period, you can have an in-clinic abortion to end your pregnancy.+966572737505

Why do people choose the abortion pill?

Which kind of abortion you choose all depends on your personal+966572737505 preference and situation. With+966572737505 medication+966572737505 abortion, some people like that you don’t need to have a procedure in a doctor’s office. You can have your medication abortion on your own+966572737505 schedule, at home or in another comfortable place that you choose.+966572737505 You get to decide who you want to be with during your abortion, or you can go it alone. Because+966572737505 medication abortion is similar to a miscarriage, many people feel like it’s more “natural” and less invasive. And some+966572737505 people may not have an in-clinic abortion provider close by, so abortion pills are more available to+966572737505 them.

+966572737505

Your doctor, nurse, or health center staff can help you decide which kind of abortion is best for you.

+966572737505

More questions from patients:

Saudi Arabia+966572737505

CYTOTEC Misoprostol Tablets. Misoprostol is a medication that can prevent stomach ulcers if you also take NSAID medications. It reduces the amount of acid in your stomach, which protects your stomach lining. The brand name of this medication is Cytotec®.+966573737505)

Unwanted Kit is a combination of two mediciAbortion pills in Doha Qatar (+966572737505 ! Get Cytotec

Abortion pills in Doha Qatar (+966572737505 ! Get CytotecAbortion pills in Riyadh +966572737505 get cytotec

Recently uploaded (20)

➥🔝 7737669865 🔝▻ Mathura Call-girls in Women Seeking Men 🔝Mathura🔝 Escorts...

➥🔝 7737669865 🔝▻ Mathura Call-girls in Women Seeking Men 🔝Mathura🔝 Escorts...

➥🔝 7737669865 🔝▻ malwa Call-girls in Women Seeking Men 🔝malwa🔝 Escorts Ser...

➥🔝 7737669865 🔝▻ malwa Call-girls in Women Seeking Men 🔝malwa🔝 Escorts Ser...

Call Girls Jalahalli Just Call 👗 7737669865 👗 Top Class Call Girl Service Ban...

Call Girls Jalahalli Just Call 👗 7737669865 👗 Top Class Call Girl Service Ban...

Call Girls Bannerghatta Road Just Call 👗 7737669865 👗 Top Class Call Girl Ser...

Call Girls Bannerghatta Road Just Call 👗 7737669865 👗 Top Class Call Girl Ser...

Thane Call Girls 7091864438 Call Girls in Thane Escort service book now -

Thane Call Girls 7091864438 Call Girls in Thane Escort service book now -

Abortion pills in Jeddah | +966572737505 | Get Cytotec

Abortion pills in Jeddah | +966572737505 | Get Cytotec

Call Girls In Nandini Layout ☎ 7737669865 🥵 Book Your One night Stand

Call Girls In Nandini Layout ☎ 7737669865 🥵 Book Your One night Stand

Detecting Credit Card Fraud: A Machine Learning Approach

Detecting Credit Card Fraud: A Machine Learning Approach

Call Girls Begur Just Call 👗 7737669865 👗 Top Class Call Girl Service Bangalore

Call Girls Begur Just Call 👗 7737669865 👗 Top Class Call Girl Service Bangalore

CHEAP Call Girls in Rabindra Nagar (-DELHI )🔝 9953056974🔝(=)/CALL GIRLS SERVICE

CHEAP Call Girls in Rabindra Nagar (-DELHI )🔝 9953056974🔝(=)/CALL GIRLS SERVICE

Call Girls Bommasandra Just Call 👗 7737669865 👗 Top Class Call Girl Service B...

Call Girls Bommasandra Just Call 👗 7737669865 👗 Top Class Call Girl Service B...

Mg Road Call Girls Service: 🍓 7737669865 🍓 High Profile Model Escorts | Banga...

Mg Road Call Girls Service: 🍓 7737669865 🍓 High Profile Model Escorts | Banga...

Vip Mumbai Call Girls Thane West Call On 9920725232 With Body to body massage...

Vip Mumbai Call Girls Thane West Call On 9920725232 With Body to body massage...

Abortion pills in Doha Qatar (+966572737505 ! Get Cytotec

Abortion pills in Doha Qatar (+966572737505 ! Get Cytotec

BDSM⚡Call Girls in Mandawali Delhi >༒8448380779 Escort Service

BDSM⚡Call Girls in Mandawali Delhi >༒8448380779 Escort Service

Vanishing & Exploding Gradients

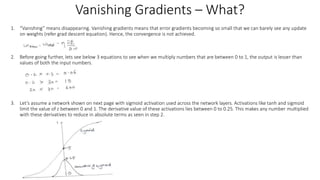

- 1. Vanishing Gradients – What? 1. “Vanishing” means disappearing. Vanishing gradients means that error gradients becoming so small that we can barely see any update on weights (refer grad descent equation). Hence, the convergence is not achieved. 2. Before going further, lets see below 3 equations to see when we multiply numbers that are between 0 to 1, the output is lesser than values of both the input numbers. 3. Let’s assume a network shown on next page with sigmoid activation used across the network layers. Activations like tanh and sigmoid limit the value of z between 0 and 1. The derivative value of these activations lies between 0 to 0.25. This makes any number multiplied with these derivatives to reduce in absolute terms as seen in step 2.

- 3. Vanishing Gradients – How to Avoid? 1. Reason Let’s see the equation for gradient of error w.r.t w17 and gradient of error w.r.t w23. The number of items required to be multiplied to calculate gradient of error w.r.t w17 (a weight in initial layer) is way more than number of items required to be multiplied to calculate gradient of error w.r.t w23 (a weight in later layers). Now, the terms in these gradients that do partial derivative of activation will be valued between 0 to 0.25 (refer point 3). Since number of terms less than 1 is more for error gradients in initial layers, hence, vanishing gradient effect is seen more prominently in the initial layers of network. The number of terms required to compute gradient w.r.t w1, w2 etc. will be quite high. Resolution The way to avoid the chances of a vanishing gradient problem is to use activations whose derivative is not limited to values less than 1. We can use Relu activation. Relu’s derivative for positive values is 1. The issue with Relu is it’s derivative for negative values is 0 which makes contribution of some nodes 0. This can be managed by using Leaky Relu instead.

- 4. Vanishing Gradients – How to Avoid?

- 5. Vanishing Gradients – How to Avoid? 2. Reason The first problem that we discussed was the usage of activations whose derivatives are low. The second problem deals with low value of initialized weights. We can understand this from simple example as shown in network on previous page. The equations for error grad w.r.t w1 includes value of w5 as well. Hence, if value of w5 is initialized very low, it will also plays a role in making the gradient w.r.t w1 smaller i.e vanishing gradient. We can also say Vanishing gradient problems will be more prominent in deep networks. This is because the number of multiplicative terms to compute the gradient of initial layers in a deep network is very high. Resolution As we can see from below equations, the derivative of activation function along with weights play a role in causing vanishing gradients because both are there in equation for computation of error gradient. We need to initialize the weights properly to avoid vanishing gradient problem. We will discuss about it further in weight initialization strategy section.

- 6. Exploding Gradients – What? 1. “Exploding” means increasing to a large extent. Exploding gradients means that error gradients becoming so big that the update on weights is too high in every iteration. This causes the weights to swindle a lot and causes error to keep missing the global minima. Hence, the convergence becomes tough to be achieved. 2. Exploding gradients are caused due to usage of bigger weights used in the network. 3. Probable resolutions 1. Keep low learning rate to accommodate for higher weights 2. Gradient clipping 3. Gradient scaling 4. Gradient scaling 1. For every batch, get all the gradient vectors for all samples. 2. Find L2 norm of the concatenated error gradient vector. 1. If L2 norm > 1 (1 is used as an example here) 2. Scale/normalize the gradient terms such that L2 norm becomes 1 3. Code example opt = SGD(lr=0.01, momentum=0.9, clipnorm=1.0) 5. Gradient clipping 1. For every sample in a batch, if the gradient value w.r.t any weight is outside a range (let’s say -0.5 <= gradient_value <= 0.5), we clip the gradient value to the border values. If gradient value is 0.6, we clip it to make it 0.5. 2. Code example opt = SGD(lr=0.01, momentum=0.9, clipvalue=0.5) 6. Generic practice is to use same values of clipping / scaling throughout the network.

Editor's Notes

- Why BN is not applied in batch or stochastic mode? Whe using RELU, you can encounter dying RELU problem, then use leaky RELU with He initialization strategy – do in activation function video

- Why BN is not applied in batch or stochastic mode? Whe using RELU, you can encounter dying RELU problem, then use leaky RELU with He initialization strategy – do in activation function video

- Why BN is not applied in batch or stochastic mode? Whe using RELU, you can encounter dying RELU problem, then use leaky RELU with He initialization strategy – do in activation function video

- Why BN is not applied in batch or stochastic mode? Whe using RELU, you can encounter dying RELU problem, then use leaky RELU with He initialization strategy – do in activation function video

- Why BN is not applied in batch or stochastic mode? Whe using RELU, you can encounter dying RELU problem, then use leaky RELU with He initialization strategy – do in activation function video

- Why BN is not applied in batch or stochastic mode? Whe using RELU, you can encounter dying RELU problem, then use leaky RELU with He initialization strategy – do in activation function video