Anomaly Detection in Sequences of Short Text Using Iterative Language Models

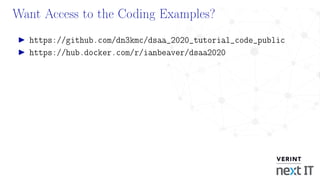

- 1. Want Access to the Coding Examples? https://github.com/dn3kmc/dsaa_2020_tutorial_code_public https://hub.docker.com/r/ianbeaver/dsaa2020

- 2. How to Determine the Optimal Anomaly Detection Method For Your Application Cynthia Freeman Research Scientist Ian Beaver Chief Scientist

- 3. Overview 1. Background Dene: time series, anomalies Why is anomaly detection hard? 2. Time Series Characteristics and How to Detect Them Seasonality, Trend, Concept Drift, Missing Time Steps 3. Dataset Resources 4. Anomaly Detection Methods STL, SARIMA, Prophet, GPs, RNNs, etc. 5. Evaluation Methods Numenta Benchmark Scores, Windowed F-Scores 6. Which anomaly detection method given a characteristic? 7. Human-in-the-Loop Methods

- 5. Background

- 6. Time Series A time series is a sequence of data points indexed in order of time. How are time series used? Stock Market Tracking KPIs Medical Sensors Weather Patterns

- 7. Anomalies An anomaly in a time series is a pattern that does not conform to past patterns of behavior. Applications: Ecient troubleshooting Fraud detection Ensuring undisrupted business Saving lives in system health monitoring Anomaly Detection is hard!

- 8. What exactly is anomalous?

- 9. The need for ONLINE anomaly detection

- 10. The lack of labeled data

- 11. Data imbalance

- 12. The need to minimize false positives

- 13. What anomaly detection method should I use?

- 14. Which anomaly detection method should I use? Base this decision o of the characteristics the time series possesses Evaluate anomaly detection methods on time series characteristics as an example Experiment with 2 evaluation criteria Window-based F-score Numenta Anomaly Benchmark (NAB) Score Human-in-the-loop methodologies

- 15. Signal Processing Flow for Anomaly Detection signal residual detect lter score

- 16. Simple Example: Sliding Gaussian Window Detector Estimate mean and variance over sliding window Compute a score based on the tail probability S(yt) = P(yt ≤ τ|µ, σ2 ) Use max relative to upper and lower extremes 02-24 00 02-24 12 02-25 00 02-25 12 02-26 00 02-26 12 02-27 00 02-27 12 02-28 00 10 0 10 20 30

- 17. Simple Example: Sliding Gaussian Window Detector 2014-02-24 2014-02-25 2014-02-26 2014-02-27 2014-02-28 0.5 0.6 0.7 0.8 0.9 1.0 AnomalyScore 2014-02-20 2014-02-21 2014-02-22 2014-02-23 2014-02-24 2014-02-25 2014-02-26 2014-02-27 2014-02-28 2014-03-01 0 5 10 15 20 25 30 35 log

- 19. Stationarity A time series is stationary if the mean, variance, and autocorrelation structure are constant for all time Autocorrelation: the correlation of a signal with a delayed copy of itself A white noise process is stationary.

- 20. How can a time series be non-stationary? Several possibilities: Seasonality Trend Concept Drift

- 21. Seasonality Presence of variations that occur at specic regular intervals Real data often exhibits seasonal eects at multiple time scales. Day-of-week Hour-of-day Can be irregular Day-of-month Holidays Can be Additive or Multiplicative If multiplicative, amplitude of seasonal behavior is dependent on the mean 01 Jul 2014 30 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 timestamp

- 22. Seasonality is not always obvious at a glance! Time Series 0 20 40 60 80 100 Autocorrelation The autocorrelation plot can help identify seasonality The autocorrelation plot displays autocorrelation coecients

- 23. Autocorrelation Plots Autocorrelation coecient equation: rk = T t=k+1 (yt − ¯y)(yt−k − ¯y) T t=1 (yt − ¯y)2 where rk = correlation between yt and yt−k and T = length of time series Time Series 0 20 40 60 80 100 Autocorrelation x-axis is k (lag), y-axis is rk seasonality present → denitive repeated spikes

- 24. Automatic Detection of Seasonality What about a function to automatically detect seasonality? R's findfrequency will return the period with the maximum spectral amplitude of the signal What does this mean? Quick Review: Period = # of time steps required to complete a single cycle Frequency = fraction of a cycle that's completed in a single time step Frequency = 1 period Amplitude = measure of change in a single period The spectral density is a frequency domain representation of a time series; we want to represent the time series as a sum of sine and cosine waves!

- 25. Automatic Detection of Seasonality Given a time series with n distinct values, we can represent it as a sum of sine and cosine waves! xt = n/2 j=1 β1 j n cos(2πωjt) + β2 j n sin(2πωjt) . ωj = 1 n, 2 n, ... n 2 n, are the harmonic frequencies (positive integer) β1 j n and β2 j n are parameters that can be estimated using FFT

- 26. Automatic Detection of Seasonality Periodogram graphs importances of possible frequency values that might explain the oscillation pattern of the data. After FFT, we can plot the periodogram. x-axis is frequency j n y-axis is P j n = β2 1 j n + β2 2 j n Large P(j n) → Frequency j n is important in explaining the oscillation in the observed series.

- 27. Example 1981 1982 1983 1984 1985 1986 1987 1988 1989 1990 1991 Date 0 5 10 15 20 25 Temperature Daily minimum temperatures in Melbourne, Australia, 1981-1990 0 500 1000 1500 2000 2500 3000 3500 −0.6 −0.4 −0.2 0.0 0.2 0.4 0.6 0.8 1.0 Autocorrelation 0.0000 0.0025 0.0050 0.0075 0.0100 0.0125 0.0150 0.0175 0.0200 f 0 5000 10000 15000 20000 25000 30000 P

- 28. Seasonality with Python and R

- 29. Trend The process mean can change over time. Two types of trends: Deterministic and Stochastic

- 30. Deterministic vs Stochastic Trends Which trend is present is dependent on how we eliminate them Stochastic trends (dierence-stationary) The mean trend is stochastic. Eliminated by dierencing Detected via the Augmented Dickey Fuller Test Deterministic trends (trend-stationary) The mean trend is deterministic. Eliminated by detrending Detected via the Cox-Stuart Test

- 31. Trend Detection with Python and R

- 32. Concept Drift The underlying process can change over time. 30 40 50 60

- 33. Bayesian Online Changepoint Detection with Python

- 34. Missing Time Steps 0 1000 2000 3000 4000 5000 6000 7000 8000 60 65 70 75 80 85

- 35. Data Resources Numenta Anomaly Benchmark Repository https://github.com/numenta/NAB/tree/master/data Annotation Instructions: https://drive.google.com/file/d/0B1_XUjaAXeV3YlgwRXdsb3Voa1k/view UCR Time Series Classication Archive https://www.cs.ucr.edu/~eamonn/time_series_data/ Time Series Data Library https://pkg.yangzhuoranyang.com/tsdl/ Kaggle https://www.kaggle.com/datasets?tagids=6618

- 36. Time Series Modeling for Anomaly Detection

- 37. Nonstationarity: Dierencing First-order dierence to remove trend: [∆y](t) = y(t) − y(t − 1) Seasonal dierencing with period s: [∆sy](t) = y(t) − y(t − s) 02-24 00 02-24 12 02-25 00 02-25 12 02-26 00 02-26 12 02-27 00 02-27 12 02-28 00 10 0 10 20 30 02-24 00 02-24 12 02-25 00 02-25 12 02-26 00 02-26 12 02-27 00 02-27 12 02-28 00 20 10 0 10 20

- 38. STL Local regression with LOESS y(t) = S(t) + T(t) + (t) Decompose into season and trend LOESS smoothing can interpolate missing data Residual should look more stationary STL: A seasonal-trend decomposition by Cleveland, Robert B., et al.

- 39. STL with R

- 41. ARMA A family of Gaussian models with temporal correlation. y(t) − p i=1 θiy(t − i) AR = (t) + q j=1 φj (t − j) MA Autoregressive (AR) The value at time t is a linear combination of p past values plus current noise signal. Moving Average (MA) The value at time t is a linear combination of q past values of noise.

- 42. ARIMA and SARIMA ARIMA (p,d,q) ARMA on dierenced signal. SARIMA (p,d,q,P,D,Q,s) Extend ARIMA to incorporate longer-term seasonal correlation.

- 44. Facebook Prophet Uses an additive model: y(t) = g(t) + s(t) + h(t) + t g(t) is linear/logistic growth trend s(t) is yearly/weekly seasonal component h(t) is user-provided list of holidays Forecasting at Scale by Taylor, Sean J., and Benjamin Letham.

- 45. Facebook Prophet with Python

- 46. What is a Gaussian Process? A Gaussian distribution over functions consistent with our data p(f (x)) = N(µ(x), K(x, x)) µ(x) is the mean function1 K(x, x) is the covariance matrix K(x, x) gives us power of expression... 1 Usually at functions are used here

- 47. Covariance Matrix Assuming we have n many points, the covariance matrix2 is... K(x, x) = k(x1, x1) . . . k(x1, xn) ... ... k(xn, x1) k(xn, xn) k is the covariance kernel function. If my data has... Stationarity → k(x, x ) = σ2 exp −(x−x )2 2 2 Periodicity → k(x, x ) = σ2 exp −2 sin2(π|x−x |/p) 2 Trend → k(x, x ) = σ2 b + σ2 v(x − c)(x − c) 2 K has to be a positive semidenite matrix

- 48. Prediction Once I have my mean and covariance functions, I can predict the future!3 1. Given x∗, I want to know what f (x∗) is 2. We just select a point from p(f (x∗)) = N(m∗, C∗) where m∗ = µ(x∗) + K(x∗, x)K(x, x)−1 (f (x) − µ(x)) C∗ = K(x∗, x∗) − K(x∗, x)K(x, x)−1 K(x∗, x)T Time complexity is O(n3 ) because we have to nd the inverse of K. 3 Or interpolate

- 49. Gaussian Processes with Python

- 50. Recurrent Neural Network Given a window of nlag time steps in the past, predict a window of nseq time steps in the future Anomaly score is an average of the prediction error Adaptive: uses online gradient-based optimizer, built to deal with concept drift Choice of nseq can greatly aect false positive rate Online Anomaly Detection with Concept Drift Adaptation using RNNs CoDS-COMAD ’18, January 11–13, 2018, Goa, India where T is length of the time series: reset gate : r (i) t = (W(i) r · [D(z (i 1) t ), z (i) t 1]) update gate : u (i) t = (W(i) u · [D(z (i 1) t ), z (i) t 1]) proposed state : ˜z (i) t = tanh(W(i) p · [D(z (i 1) t ), rt z (i) t 1]) hidden state : z (i) t = (1 u (i) t ) z (i) t 1 + u (i) t ˜z (i) t (1) where is Hadamard product, [a, b] is concatenation of vectors a and b, D(·) is dropout operator that randomly sets the dimensions of its argument to zero with probability equal to dropout rate, z0 t equals the value of the input time series at time t. Wr, Wu, and Wp are weight matrices of appropriate dimensions s.t. r (i) t , u (i) t ,˜z (i) t , and z (i) t are vectors in Rc(i) , where c(i) is the number of units in layer i. The sigmoid ( ) and tanh activation functions are applied element-wise. The hidden state z (i) t is used to obtain the output via a linear or non-linear output layer. The parameters W = [Wr, Wu, Wp] of the RNN consist of the weight matrices in Equations 1. Dropout is used for regularization [28, 33] and is applied only to the non-recurrent connections, ensuring information flow across time-steps. 3 APPROACH We assume that a model that is able to predict the next few Anomaly Score Computation Prediction using RNN Anomaly Score Computation RNN Updation using BPTT At time t At time t+1 Prediction using RNN RNN Updation using BPTT Figure 1: Steps in Online RNN-AD approach obtained using RNNs and then used for anomaly score com- putation as well as incremental model updation. Overall steps of the algorithm are depicted in Figure 1. 3.1 Online RNN-AD Consider a multivariate time series x = {x1, x2, ..., xt}, where m Illustration from Saurav et al. '18

- 51. RNNs with Python

- 53. Anomaly Scores Anomaly detectors are adapted to output a score between 0 and 1 STL: Apply Q-function to residuals SARIMA, Prophet, Gaussian: Apply Q-function to forecasting error RNN: Apply Q-function to unnormalized anomaly score

- 54. Numenta Anomaly Benchmark Scoring For every predicted anomaly y, its score σ(y) is determined by its position relative to its containing window or an immediately preceding window For every ground truth anomaly, construct an anomaly window with the anomaly in the center. .1×length of time series # of true anomalies (FN) are not applicable for evaluating algorithms for the above requirements. Fig. 2. Shaded red regions represent the anomaly windows for this data file. The shaded purple region is the first 15% of the data file, representing the probationary period. During this period the detector is allowed to learn the data patterns without being tested. To promote early detection NAB defines anomaly windows. Each window represents a range of data points that is centered around a ground truth anomaly label. Fig. 2 shows an example using the data from Fig 1. A scoring function (described in more detail below) uses these windows to identify and weight true positives, false positives, and false negatives. If there are multiple detections within a window, the earliest detection is given credit and counted as a true positive. Additional positive detections within the window are ignored. The sigmoidal scoring function gives higher positive scores to true positive detections earlier in a window and negative scores to detections outside the window (i.e. the false positives). These properties are illustrated in Fig. 3 with an example. How large should the windows be? The earlier a detector can reliably identify anomalies the better, implying these windows should be as large as possible. The tradeoff with extremely large windows is that random or unreliable the cost of a false negative is far higher than the cost of a false positive. Alternatively, an application monitoring the statuses of individual servers in a datacenter might be sensitive to the number of false positives and be fine with the occasional missed anomaly since most server clusters are relatively fault tolerant. To gauge how algorithms operate within these different application scenarios, NAB introduces the notion of application profiles. For TPs, FPs, FNs, and TNs, NAB applies different relative weights associated with each profile to obtain a separate score per profile. Fig. 3. Scoring example for a sample anomaly window, where the values represent the scaled sigmoid function, the second term in Eq. (1). The first point is an FP preceding the anomaly window (red dashed lines) and contributes -1.0 to the score. Within the window we see two detections, and only count the earliest TP for the score. There are two FPs after the window. The first is less detrimental because it is close to the window, and the second yields -1.0 because it’s too far after the window to be associated with the true anomaly. TNs make no score contributions. The scaled sigmoid values are multiplied by the relevant application profile weight, as shown in Eq. (1), the NAB score for this example would calculate as: −1.0!! + 0.9999!! − 0.8093!! − 1.0!!. With the standard application profile this would result in a total score of 0.6909. Illustration from Lavin Ahmad '15

- 55. Numenta Anomaly Benchmark Scoring (Continued) The raw score is computed as: Sd = y∈Yd σ(y) + AFNfd AFN is cost of false negatives Then rescale to get summary score: 100 × S − Snull Sperfect − Snull Choose threshold that maximizes score

- 56. Window-based F-score Segment into nonoverlapping windows Window is anomalous if it contains an anomaly Treat like binary classication and report F1 Choose threshold that minimizes # of errors Prefer detection in case of tie

- 57. Results

- 58. Characteristic Corpora Seasonality 10 datasets 63,336 samples 23 ground truth anomalies Trend 10 datasets 31,596 samples 17 ground truth anomalies Concept Drift 10 datasets 32,402 samples 27 ground truth anomalies Missing Timesteps 10 datasets 33,245 samples 22 ground truth anomalies 1,254 missing samples https://github.com/numenta/NAB

- 59. Example

- 60. Which methods are promising given a characteristic? Seasonality and Trend STL, SARIMA, Prophet Concept Drift Requires more complex methods such as HTMs Missing Time Steps Performance varies based on evaluation strategy Area for future work: more methods needed!

- 61. Which evaluation strategy should I use? F-score scheme is more restrictive NAB scores have more wiggle room for false positives due to reward for early detection What evaluation metric to use is entirely based on the needs of the user

- 63. Human-in-the-Loop Not advisable to completely remove the human element Predicted anomalies given to user to annotate (Is the predicted anomaly truly an anomaly?) Based on user decision: Idea One: The parameters for that method can be tuned to reduce the error. Idea Two: The anomaly score is tuned to reduce the error.

- 64. Concept One Avoid predicted anomaly clusters. 0 1000 2000 3000 4000 5000 6000 7000 8000 60 65 70 75 80 85 Weight anomaly scores after a prediction by multiplying to a sigmoid function erf (x) = 1√ π x −x e−t2 dt to briey reduce the anomaly scores of clustered anomalies

- 65. Concept Two Users disagree with a prediction → similar instances should not be detected. 60 80 100 120 140 160 180 200 64 66 68 70 72 74 520 540 560 580 600 620 640 660 66 68 70 72 74 76 1. Use MASS4 to nd similar subsequences (motifs) 2. Reduce the anomaly scores corresponding to these motifs by multiplying them to a sigmoid function: y = 1 1 + e−kx+b where b = ln(1−min_weight min_weight ), k = ln( )−b −max_distance min_weight = minimum weight multiplied to the anomaly scores max_distance=max discord distance from the query 4 Mueen's Algorithm for Similarity Search [14]

- 66. 0 1000 2000 3000 4000 5000 6000 7000 8000 60 65 70 75 80 85 0 1000 2000 3000 4000 5000 6000 7000 8000 60 65 70 75 80 85 0 1000 2000 3000 4000 5000 6000 7000 8000 60 65 70 75 80 85 0 1000 2000 3000 4000 5000 6000 7000 8000 60 65 70 75 80 85

- 69. In Summary The existence of an anomaly detection method that is optimal for all domains is a myth Determine the characteristics present in the data to narrow down the choices for anomaly detection methods Incorporate user feedback on predicted outliers by utilizing subsequence similarity search, reducing the need for annotation while also increasing evaluation scores