Sanath pabba hadoop resume 1.0

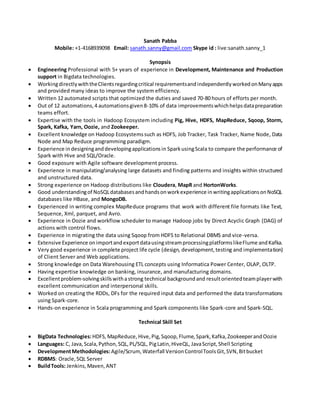

- 1. Sanath Pabba Mobile: +1-4168939098 Email: sanath.sanny@gmail.com Skype id : live:sanath.sanny_1 Synopsis Engineering Professional with 5+ years of experience in Development, Maintenance and Production support in Bigdata technologies. WorkingdirectlywiththeClientsregardingcritical requirementsandindependently workedonManyapps and provided many ideas to improve the system efficiency. Written 12 automated scripts that optimized the duties and saved 70-80 hours of efforts per month. Out of 12 automations,4 automationsgiven8-10% of data improvementswhichhelpsdatapreparation teams effort. Expertise with the tools in Hadoop Ecosystem including Pig, Hive, HDFS, MapReduce, Sqoop, Storm, Spark, Kafka, Yarn, Oozie, and Zookeeper. Excellent knowledge on Hadoop Ecosystemssuch as HDFS, Job Tracker, Task Tracker, Name Node, Data Node and Map Reduce programming paradigm. Experience indesigninganddevelopingapplicationsin SparkusingScala to compare the performance of Spark with Hive and SQL/Oracle. Good exposure with Agile software development process. Experience in manipulating/analysing large datasets and finding patterns and insights within structured and unstructured data. Strong experience on Hadoop distributions like Cloudera, MapR and HortonWorks. Good understandingof NoSQLdatabasesandhandsonworkexperience inwritingapplicationsonNoSQL databases like HBase, and MongoDB. Experienced in writing complex MapReduce programs that work with different file formats like Text, Sequence, Xml, parquet, and Avro. Experience in Oozie and workflow scheduler to manage Hadoop jobs by Direct Acyclic Graph (DAG) of actions with control flows. Experience in migrating the data using Sqoop from HDFS to Relational DBMS and vice-versa. Extensive Experience onimportandexportdatausingstreamprocessingplatformslikeFlume andKafka. Very good experience in complete project life cycle (design, development,testing and implementation) of Client Server and Web applications. Strong knowledge on Data Warehousing ETL concepts using Informatica Power Center, OLAP, OLTP. Having expertise knowledge on banking, insurance, and manufacturing domains. Excellentproblem-solvingskillswithastrong technical backgroundand resultorientedteamplayerwith excellent communication and interpersonal skills. Worked on creating the RDDs, DFs for the required input data and performed the data transformations using Spark-core. Hands-on experience in Scala programming and Spark components like Spark-core and Spark-SQL. Technical Skill Set BigData Technologies:HDFS,MapReduce,Hive,Pig,Sqoop,Flume,Spark,Kafka,ZookeeperandOozie Languages: C, Java,Scala,Python,SQL,PL/SQL, PigLatin,HiveQL, JavaScript,Shell Scripting DevelopmentMethodologies:Agile/Scrum, Waterfall VersionControlToolsGit,SVN,Bitbucket RDBMS: Oracle,SQL Server BuildTools: Jenkins,Maven,ANT

- 2. BusinessIntelligence Tools:Tableau,Splunk,QlikView,Alteryx Tools: IntelliJIDE CloudEnvironment:AWS Scripting: Unix shell scripting,Pythonscripting Scheduling:Maestro Career Highlights ProficiencyForte Extensivelyworkedondataextraction,Transformationandloadingdatafromvarioussourceslike DB2, Oracle and Flat files. StrongskillsinData RequirementAnalysisandDataMappingfor ETL processes. Well versedindevelopingthe SQLqueries,unions,andmultiple table joins. Well versedwithUNIXCommands&able towrite shellscriptsanddevelopedfew scriptsto reduce the manual interventionaspartof JobMonitoringAutomationProcess. Work experience Sep2019 till currentdate in WalmartCanada as CustomerExperienceSpecialist(Part-time). Jan 2018 to Mar 2019 in Infosys Limitedas SeniorSystemEngineer. Apr 2015 to Jan2018 in NTT Data Global DeliveryServices asApplicationsoftware dev.Consultant. Project Details Company : InfosysLimited. Project : Enterprise businesssolution(Metlife Inc.) Environment : Hadoop, Spark,Spark SQL, Scala,SQL Server, shell scripting. Scope: EBS is a project where Informatica pulls data from SFDC and sends to Big Data at RDZ. Big Data kicksitsprocesswhenthe triggerfile,control fileanddatafilesare received.Allthe filescheckvalidations. Afterall the transformationsare done,the data is storedin hive,pointingtoHDFS locations.The data is synced to bigsql and down streaming process is done by QlikView team. Roles& Responsibilities: Writing Sqoop Jobs that loads data from DBMS to Hadoop environments. Preparedcode thatinvokessparkscriptsinScalacode thatinvolvesinDataloads,pre-validations,data preparation and post validations. Prepared automation scripts using shell scripting that fetches the data utilizations across the cluster and notifies admins for every hour that helps admin team to avoid regular monitoring checks. Company : InfosysLimited. Project : BluePrism(MetlifeInc.) Environment : Spark,Spark SQL, Scala,Sqoop,SQL Server,shell scripting. Scope: BluePrism is a Source application with SQL Server as its Database. Big Data will extract the data fromBluePrism Environmentsmergetwosourcesintooneandloadthe dataintoHive Database.BigData also archives the data into corresponding history tables either monthly or ad-hoc basis basedon trigger file receivedfromBluePrism.ThisisaweeklyextractfromSQLserverusingSqoopandthenloadthe data through Scala. Jobs have been scheduled in Maestro. Roles& Responsibilities: Prepared data loading scripts using shell scripting which invokes Sqoop jobs. Implemented data merging functionality which pulls data from various environments. Developed scripts that backup data using AVRO technique.

- 3. Company : Infosys Limited. Project : Gross ProcessingMarginreports(Massmutual) Environment : Spark,Spark SQL, Scala,Sqoop,SQL Server,shell scripting. Scope:In GPMReports,we receive the input.csvfromthe Business. Basedonthe clientrequestwe need to generate 6 reports.We will receive the triggerfile anddata file for each report.Using shell script,we will perform validationon the trigger files, input file and paths that representation in Linux and HDFS, if everyvalidationsuccessfulandinvoke the hive scripttogenerate the outputfile andplacedinLinux.We will append the data in hive tables based on the output file. Migrating the Pig Scripts into spark scripts using Scala and report generation will be taken place by the spark and stores in Linux directory. Roles& Responsibilities: Based on the business need, used to prepare the data using hive QL and Spark RDD’s. By using Hadoop, we load the data, prepare the data, implement filters to remove unwanted and uncertain fields and merging all 6 reports from various teams. Implemented8pre-validationrulesand7 postvalidationruleswhichinvolvesindata count,required fields and needful changes and in post validations we move the data to HDFS archive path. Company : Ntt Data Global deliveryservices. Project : Compliance Apps(National Lifegroup) Environment : Spark,Spark SQL, Scala,SQL Server,shell scripting,pig. Scope:Compliance appisagroupof nine adminsystems(AnnuityHost,ERL,MRPS,CDI,Smartapp,WMA, VRPS,PMACS,SBR).The processistoload the datafilesbasedonthe triggerfileswe receivedforthe nine admin systems in to HDFS. There are three types of Load that takes place. They are: 1. Base load 2. Full load 3. Deltaload Tobuildthe workflowtoloadthe datafilesintoHDFSlocationsandintohive tables.We needtocreate hive tableswithoptimizedcompressedformatandload the data into the tables.To write hive script for full load and write the shell script to create a workflow. We use Pig/spark for the delta loads and shell script to invoke the hive for the full load/Historyprocessing.Then,schedule the jobsinMaestro for the daily run. Initially for delta load, we were using Pig scripts. Company : NTT Data Global DeliveryServices. Project : ManufacturingCostWalk Analysis(Honeywell) Environment : Sqoop,Shell scripting,Hive. Scope: The Manufacturingcost walkapplicationusedtostore the informationaboutthe productswhich are beenmanufacturedbyHoneywell.Theyusedtostore the datainSharePointlistsonweeklybasis.But still it is very difficult for them to handle the data using share point because of long time processing. So we proposeda solutionforthemwithHive andSqoop.But theirsource of file generationisfromcsvand xlsfiles.So,we have startedimporting data into hive and processing data based on their requirement. Academia Completed Post graduation from Loyalist college in Project Management (Sep 19- May 20) Completed diploma from Indian institute of Information Technology (Hyderabad) in Artificial Intelligence and data visualization (April 2019 – Aug 2019) Completed Graduation Under JNTU-HYD in Electronics and Communications (Aug 11 – May 14)