Maxwell W Libbrecht - pomegranate: fast and flexible probabilistic modeling in python

•

5 j'aime•1,532 vues

PyData Seattle 2017

Signaler

Partager

Signaler

Partager

Télécharger pour lire hors ligne

Recommandé

The machine learning libraries in Apache Spark are an impressive piece of software engineering, and are maturing rapidly. What advantages does Spark.ml offer over scikit-learn? At Data Science Retreat we've taken a real-world dataset and worked through the stages of building a predictive model -- exploration, data cleaning, feature engineering, and model fitting; which would you use in production?

The machine learning libraries in Apache Spark are an impressive piece of software engineering, and are maturing rapidly. What advantages does Spark.ml offer over scikit-learn?

At Data Science Retreat we've taken a real-world dataset and worked through the stages of building a predictive model -- exploration, data cleaning, feature engineering, and model fitting -- in several different frameworks. We'll show what it's like to work with native Spark.ml, and compare it to scikit-learn along several dimensions: ease of use, productivity, feature set, and performance.

In some ways Spark.ml is still rather immature, but it also conveys new superpowers to those who know how to use it.A full Machine learning pipeline in Scikit-learn vs in scala-Spark: pros and ...

A full Machine learning pipeline in Scikit-learn vs in scala-Spark: pros and ...Jose Quesada (hiring)

Recommandé

The machine learning libraries in Apache Spark are an impressive piece of software engineering, and are maturing rapidly. What advantages does Spark.ml offer over scikit-learn? At Data Science Retreat we've taken a real-world dataset and worked through the stages of building a predictive model -- exploration, data cleaning, feature engineering, and model fitting; which would you use in production?

The machine learning libraries in Apache Spark are an impressive piece of software engineering, and are maturing rapidly. What advantages does Spark.ml offer over scikit-learn?

At Data Science Retreat we've taken a real-world dataset and worked through the stages of building a predictive model -- exploration, data cleaning, feature engineering, and model fitting -- in several different frameworks. We'll show what it's like to work with native Spark.ml, and compare it to scikit-learn along several dimensions: ease of use, productivity, feature set, and performance.

In some ways Spark.ml is still rather immature, but it also conveys new superpowers to those who know how to use it.A full Machine learning pipeline in Scikit-learn vs in scala-Spark: pros and ...

A full Machine learning pipeline in Scikit-learn vs in scala-Spark: pros and ...Jose Quesada (hiring)

Contenu connexe

Tendances

Tendances (20)

Data Scientist Salary, Skills, Jobs And Resume | Data Scientist Career | Data...

Data Scientist Salary, Skills, Jobs And Resume | Data Scientist Career | Data...

Scaling Databricks to Run Data and ML Workloads on Millions of VMs

Scaling Databricks to Run Data and ML Workloads on Millions of VMs

What Is Data Science? Data Science Course - Data Science Tutorial For Beginne...

What Is Data Science? Data Science Course - Data Science Tutorial For Beginne...

Power BI vs Tableau - An Overview from EPC Group.pptx

Power BI vs Tableau - An Overview from EPC Group.pptx

Pipelines and Data Flows: Introduction to Data Integration in Azure Synapse A...

Pipelines and Data Flows: Introduction to Data Integration in Azure Synapse A...

Big Data and Data Warehousing Together with Azure Synapse Analytics (SQLBits ...

Big Data and Data Warehousing Together with Azure Synapse Analytics (SQLBits ...

Similaire à Maxwell W Libbrecht - pomegranate: fast and flexible probabilistic modeling in python

Biological Network Inference via Gaussian Graphical Models

Biological Network Inference via Gaussian Graphical ModelsCTBE - Brazilian Bioethanol Sci&Tech Laboratory

Similaire à Maxwell W Libbrecht - pomegranate: fast and flexible probabilistic modeling in python (20)

Distance-based bias in model-directed optimization of additively decomposable...

Distance-based bias in model-directed optimization of additively decomposable...

Simplicial closure and higher-order link prediction (SIAMNS18)

Simplicial closure and higher-order link prediction (SIAMNS18)

Composing graphical models with neural networks for structured representatio...

Composing graphical models with neural networks for structured representatio...

Are you sure about that?! Uncertainty Quantification in AI

Are you sure about that?! Uncertainty Quantification in AI

Biological Network Inference via Gaussian Graphical Models

Biological Network Inference via Gaussian Graphical Models

PPT - Deep and Confident Prediction For Time Series at Uber

PPT - Deep and Confident Prediction For Time Series at Uber

Dynamic Programming Algorithm for the Prediction for Gene Structure

Dynamic Programming Algorithm for the Prediction for Gene Structure

Plus de PyData

Plus de PyData (20)

Michal Mucha: Build and Deploy an End-to-end Streaming NLP Insight System | P...

Michal Mucha: Build and Deploy an End-to-end Streaming NLP Insight System | P...

Unit testing data with marbles - Jane Stewart Adams, Leif Walsh

Unit testing data with marbles - Jane Stewart Adams, Leif Walsh

The TileDB Array Data Storage Manager - Stavros Papadopoulos, Jake Bolewski

The TileDB Array Data Storage Manager - Stavros Papadopoulos, Jake Bolewski

Using Embeddings to Understand the Variance and Evolution of Data Science... ...

Using Embeddings to Understand the Variance and Evolution of Data Science... ...

Deploying Data Science for Distribution of The New York Times - Anne Bauer

Deploying Data Science for Distribution of The New York Times - Anne Bauer

Graph Analytics - From the Whiteboard to Your Toolbox - Sam Lerma

Graph Analytics - From the Whiteboard to Your Toolbox - Sam Lerma

Do Your Homework! Writing tests for Data Science and Stochastic Code - David ...

Do Your Homework! Writing tests for Data Science and Stochastic Code - David ...

RESTful Machine Learning with Flask and TensorFlow Serving - Carlo Mazzaferro

RESTful Machine Learning with Flask and TensorFlow Serving - Carlo Mazzaferro

Mining dockless bikeshare and dockless scootershare trip data - Stefanie Brod...

Mining dockless bikeshare and dockless scootershare trip data - Stefanie Brod...

Avoiding Bad Database Surprises: Simulation and Scalability - Steven Lott

Avoiding Bad Database Surprises: Simulation and Scalability - Steven Lott

End-to-End Machine learning pipelines for Python driven organizations - Nick ...

End-to-End Machine learning pipelines for Python driven organizations - Nick ...

1D Convolutional Neural Networks for Time Series Modeling - Nathan Janos, Jef...

1D Convolutional Neural Networks for Time Series Modeling - Nathan Janos, Jef...

What's the Science in Data Science? - Skipper Seabold

What's the Science in Data Science? - Skipper Seabold

Applying Statistical Modeling and Machine Learning to Perform Time-Series For...

Applying Statistical Modeling and Machine Learning to Perform Time-Series For...

Solving very simple substitution ciphers algorithmically - Stephen Enright-Ward

Solving very simple substitution ciphers algorithmically - Stephen Enright-Ward

The Face of Nanomaterials: Insightful Classification Using Deep Learning - An...

The Face of Nanomaterials: Insightful Classification Using Deep Learning - An...

Dernier

💉💊+971581248768>> SAFE AND ORIGINAL ABORTION PILLS FOR SALE IN DUBAI AND ABUDHABI}}+971581248768

+971581248768 Mtp-Kit (500MG) Prices » Dubai [(+971581248768**)] Abortion Pills For Sale In Dubai, UAE, Mifepristone and Misoprostol Tablets Available In Dubai, UAE CONTACT DR.Maya Whatsapp +971581248768 We Have Abortion Pills / Cytotec Tablets /Mifegest Kit Available in Dubai, Sharjah, Abudhabi, Ajman, Alain, Fujairah, Ras Al Khaimah, Umm Al Quwain, UAE, Buy cytotec in Dubai +971581248768''''Abortion Pills near me DUBAI | ABU DHABI|UAE. Price of Misoprostol, Cytotec” +971581248768' Dr.DEEM ''BUY ABORTION PILLS MIFEGEST KIT, MISOPROTONE, CYTOTEC PILLS IN DUBAI, ABU DHABI,UAE'' Contact me now via What's App…… abortion Pills Cytotec also available Oman Qatar Doha Saudi Arabia Bahrain Above all, Cytotec Abortion Pills are Available In Dubai / UAE, you will be very happy to do abortion in Dubai we are providing cytotec 200mg abortion pill in Dubai, UAE. Medication abortion offers an alternative to Surgical Abortion for women in the early weeks of pregnancy. We only offer abortion pills from 1 week-6 Months. We then advise you to use surgery if its beyond 6 months. Our Abu Dhabi, Ajman, Al Ain, Dubai, Fujairah, Ras Al Khaimah (RAK), Sharjah, Umm Al Quwain (UAQ) United Arab Emirates Abortion Clinic provides the safest and most advanced techniques for providing non-surgical, medical and surgical abortion methods for early through late second trimester, including the Abortion By Pill Procedure (RU 486, Mifeprex, Mifepristone, early options French Abortion Pill), Tamoxifen, Methotrexate and Cytotec (Misoprostol). The Abu Dhabi, United Arab Emirates Abortion Clinic performs Same Day Abortion Procedure using medications that are taken on the first day of the office visit and will cause the abortion to occur generally within 4 to 6 hours (as early as 30 minutes) for patients who are 3 to 12 weeks pregnant. When Mifepristone and Misoprostol are used, 50% of patients complete in 4 to 6 hours; 75% to 80% in 12 hours; and 90% in 24 hours. We use a regimen that allows for completion without the need for surgery 99% of the time. All advanced second trimester and late term pregnancies at our Tampa clinic (17 to 24 weeks or greater) can be completed within 24 hours or less 99% of the time without the need surgery. The procedure is completed with minimal to no complications. Our Women's Health Center located in Abu Dhabi, United Arab Emirates, uses the latest medications for medical abortions (RU-486, Mifeprex, Mifegyne, Mifepristone, early options French abortion pill), Methotrexate and Cytotec (Misoprostol). The safety standards of our Abu Dhabi, United Arab Emirates Abortion Doctors remain unparalleled. They consistently maintain the lowest complication rates throughout the nation. Our Physicians and staff are always available to answer questions and care for women in one of the most difficult times in their lives. The decision to have an abortion at the Abortion Cl+971581248768>> SAFE AND ORIGINAL ABORTION PILLS FOR SALE IN DUBAI AND ABUDHA...

+971581248768>> SAFE AND ORIGINAL ABORTION PILLS FOR SALE IN DUBAI AND ABUDHA...?#DUbAI#??##{{(☎️+971_581248768%)**%*]'#abortion pills for sale in dubai@

Dernier (20)

Boost Fertility New Invention Ups Success Rates.pdf

Boost Fertility New Invention Ups Success Rates.pdf

Apidays Singapore 2024 - Building Digital Trust in a Digital Economy by Veron...

Apidays Singapore 2024 - Building Digital Trust in a Digital Economy by Veron...

Strategize a Smooth Tenant-to-tenant Migration and Copilot Takeoff

Strategize a Smooth Tenant-to-tenant Migration and Copilot Takeoff

Apidays New York 2024 - Scaling API-first by Ian Reasor and Radu Cotescu, Adobe

Apidays New York 2024 - Scaling API-first by Ian Reasor and Radu Cotescu, Adobe

+971581248768>> SAFE AND ORIGINAL ABORTION PILLS FOR SALE IN DUBAI AND ABUDHA...

+971581248768>> SAFE AND ORIGINAL ABORTION PILLS FOR SALE IN DUBAI AND ABUDHA...

AWS Community Day CPH - Three problems of Terraform

AWS Community Day CPH - Three problems of Terraform

Bajaj Allianz Life Insurance Company - Insurer Innovation Award 2024

Bajaj Allianz Life Insurance Company - Insurer Innovation Award 2024

Polkadot JAM Slides - Token2049 - By Dr. Gavin Wood

Polkadot JAM Slides - Token2049 - By Dr. Gavin Wood

Tata AIG General Insurance Company - Insurer Innovation Award 2024

Tata AIG General Insurance Company - Insurer Innovation Award 2024

Bajaj Allianz Life Insurance Company - Insurer Innovation Award 2024

Bajaj Allianz Life Insurance Company - Insurer Innovation Award 2024

Exploring the Future Potential of AI-Enabled Smartphone Processors

Exploring the Future Potential of AI-Enabled Smartphone Processors

Boost PC performance: How more available memory can improve productivity

Boost PC performance: How more available memory can improve productivity

Cloud Frontiers: A Deep Dive into Serverless Spatial Data and FME

Cloud Frontiers: A Deep Dive into Serverless Spatial Data and FME

Axa Assurance Maroc - Insurer Innovation Award 2024

Axa Assurance Maroc - Insurer Innovation Award 2024

From Event to Action: Accelerate Your Decision Making with Real-Time Automation

From Event to Action: Accelerate Your Decision Making with Real-Time Automation

2024: Domino Containers - The Next Step. News from the Domino Container commu...

2024: Domino Containers - The Next Step. News from the Domino Container commu...

Maxwell W Libbrecht - pomegranate: fast and flexible probabilistic modeling in python

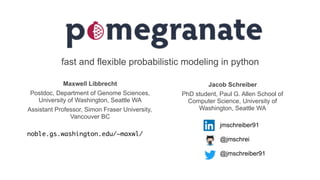

- 1. fast and flexible probabilistic modeling in python jmschreiber91 @jmschrei @jmschreiber91 Jacob Schreiber PhD student, Paul G. Allen School of Computer Science, University of Washington, Seattle WA Maxwell Libbrecht Postdoc, Department of Genome Sciences, University of Washington, Seattle WA Assistant Professor, Simon Fraser University, Vancouver BC noble.gs.washington.edu/~maxwl/

- 2. fast and flexible probabilistic modeling in python jmschreiber91 @jmschrei @jmschreiber91 Jacob Schreiber PhD student, Paul G. Allen School of Computer Science, University of Washington, Seattle WA Maxwell Libbrecht Postdoc, Department of Genome Sciences, University of Washington, Seattle WA Assistant Professor, Simon Fraser University, Vancouver BC noble.gs.washington.edu/~maxwl/

- 3. Follow along with the Jupyter tutorial 3 goo.gl/cbE7rs

- 4. What is probabilistic modeling? 4goo.gl/cbE7rs Tasks: • Sample from a distribution. • Learn parameters of a distribution from data. • Predict which distribution generated a data example.

- 5. When should you use pomegranate? 5 complexity of probabilistic modeling task simple complexz }| { • logistic regression • sample from a Gaussian distribution • speed is not important Use scipy or scikit-learn z }| { • distributions not implemented in pomegranate • problem-specific inference algorithms Use custom packages (in R or stand-alone) z }| { Use pomegranate goo.gl/cbE7rs

- 6. When should you use pomegranate? 6 z }| { Use pomegranate probabilistic modeling tasks orthogonaltasks • Scientific computing: Use numpy, scipy • Non-probabilistic supervised learning (SVMs, neural networks): Use scikit-learn • Visualizing probabilistic models: Use matplotlib • Bayesian statistics: Check out PyMC3 goo.gl/cbE7rs

- 7. Overview: this talk 7 Overview Major Models/Model Stacks 1. General Mixture Models 2. Hidden Markov Models 3. Bayesian Networks 4. Bayes Classifiers Finale: Train a mixture of HMMs in parallel

- 8. The API is common to all models 8 All models have these methods! All models composed of distributions (like GMM, HMM...) have these methods too! model.log_probability(X) / model.probability(X) model.sample() model.fit(X, weights, inertia) model.summarize(X, weights) model.from_summaries(inertia) model.predict(X) model.predict_proba(X) model.predict_log_proba(X) Model.from_samples(X, weights) goo.gl/cbE7rs

- 9. Overview: supported models Six Main Models: 1. Probability Distributions 2. General Mixture Models 3. Markov Chains 4. Hidden Markov Models 5. Bayes Classifiers / Naive Bayes 6. Bayesian Networks 9 Two Helper Models: 1. k-means++/kmeans|| 2. Factor Graphs

- 10. pomegranate supports many distributions 10 Univariate Distributions 1. UniformDistribution 2. BernoulliDistribution 3. NormalDistribution 4. LogNormalDistribution 5. ExponentialDistribution 6. BetaDistribution 7. GammaDistribution 8. DiscreteDistribution 9. PoissonDistribution Kernel Densities 1. GaussianKernelDensity 2. UniformKernelDensity 3. TriangleKernelDensity Multivariate Distributions 1. IndependentComponentsDistributio n 2. MultivariateGaussianDistribution 3. DirichletDistribution 4. ConditionalProbabilityTable 5. JointProbabilityTable

- 11. 11 mu, sig = 0, 2 a = NormalDistribution(mu, sig) X = [0, 1, 1, 2, 1.5, 6, 7, 8, 7] a = GaussianKernelDensity(X) Models can be created from known values

- 12. 12 Models can be learned from data X = numpy.random.normal(0, 1, 100) a = NormalDistribution.from_samples(X)

- 13. Overview: model stacking in pomegranate 13 Distributions Bayes Classifiers Markov Chains General Mixture Models Hidden Markov Models Bayesian Networks D BC MC GMM HMM BN Sub-model Wrapper model

- 14. Overview: model stacking in pomegranate 14 Distributions Bayes Classifiers Markov Chains General Mixture Models Hidden Markov Models Bayesian Networks D BC MC GMM HMM BN Sub-model Wrapper model

- 15. Overview: model stacking in pomegranate 15 Distributions Bayes Classifiers Markov Chains General Mixture Models Hidden Markov Models Bayesian Networks D BC MC GMM HMM BN Sub-model Wrapper model

- 16. Overview: model stacking in pomegranate 16 Distributions Bayes Classifiers Markov Chains General Mixture Models Hidden Markov Models Bayesian Networks D BC MC GMM HMM BN

- 17. 17 pomegranate can be faster than numpy Fitting a Normal Distribution to 1,000 samples

- 18. 18 pomegranate can be faster than numpy Fitting Multivariate Gaussian to 10,000,000 samples of 10 dimensions

- 19. 19 pomegranate uses BLAS internally

- 20. 20 pomegranate just merged GPU support

- 21. 21 pomegranate uses additive summarization pomegranate reduces data to sufficient statistics for updates and so only has to go datasets once (for all models). Here is an example of the Normal Distribution sufficient statistics

- 22. 22 pomegranate supports out-of-core learning Batches from a dataset can be reduced to additive summary statistics, enabling exact updates from data that can’t fit in memory.

- 23. 23 Parallelization exploits additive summaries Extract summaries + + + + New Parameters

- 24. 24 pomegranate supports semisupervised learning Summary statistics from supervised models can be added to summary statistics from unsupervised models to train a single model on a mixture of labeled and unlabeled data.

- 25. 25 pomegranate supports semisupervised learning Supervised Accuracy: 0.93 Semisupervised Accuracy: 0.96

- 26. 26 pomegranate can be faster than scipy

- 27. 27 pomegranate uses aggressive caching

- 29. 29

- 30. 30 Example ‘blast’ from Gossip Girl Spotted: Lonely Boy. Can't believe the love of his life has returned. If only she knew who he was. But everyone knows Serena. And everyone is talking. Wonder what Blair Waldorf thinks. Sure, they're BFF's, but we always thought Blair's boyfriend Nate had a thing for Serena.

- 31. 31 How do we encode these ‘blasts’? Better lock it down with Nate, B. Clock's ticking. +1 Nate -1 Blair

- 32. 32 How do we encode these ‘blasts’? This just in: S and B committing a crime of fashion. Who doesn't love a five-finger discount. Especially if it's the middle one. -1 Blair -1 Serena

- 33. 33 Simple summations don’t work well

- 34. 34 Beta distributions can model uncertainty

- 35. 35 Beta distributions can model uncertainty

- 36. 36 Beta distributions can model uncertainty

- 37. Overview: this talk 37 Overview Major Models/Model Stacks 1. General Mixture Models 2. Hidden Markov Models 3. Bayesian Networks 4. Bayes Classifiers Finale: Train a mixture of HMMs in parallel

- 38. GMMs can model complex distributions 38

- 39. GMMs can model complex distributions 39 model = GeneralMixtureModel.from_samples(NormalDistribution, 2, X)

- 40. GMMs can model complex distributions 40

- 41. An exponential distribution is not right 41 model = ExponentialDistribution.from_samples(X)

- 42. A mixture of exponentials is better 42 model = GeneralMixtureModel.from_samples(ExponentialDistribution, 2, X)

- 43. Heterogeneous mixtures natively supported 43 model = GeneralMixtureModel.from_samples([ExponentialDistribution, UniformDistribution], 2, X)

- 44. GMMs faster than sklearn 44

- 45. Overview: this talk 45 Overview Major Models/Model Stacks 1. General Mixture Models 2. Hidden Markov Models 3. Bayesian Networks 4. Bayes Classifiers Finale: Train a mixture of HMMs in parallel

- 46. CG enrichment detection HMM 46 GACTACGACTCGCGCTCGCACGTCGCTCGACATCATCGACA

- 47. CG enrichment detection HMM GACTACGACTCGCGCTCGCACGTCGCTCGACATCATCGACA 47

- 48. pomegranate HMMs are feature rich 48

- 49. GMM-HMM easy to define 49

- 50. HMMs are faster than hmmlearn 50

- 51. Overview: this talk 51 Overview Major Models/Model Stacks 1. General Mixture Models 2. Hidden Markov Models 3. Bayesian Networks 4. Bayes Classifiers Finale: Train a mixture of HMMs in parallel

- 52. Bayesian networks 52 Bayesian networks are powerful inference tools which define a dependency structure between variables. Sprinkler Wet Grass Rain

- 53. Bayesian networks 53 Sprinkler Wet Grass Rain Two main difficult tasks: (1) Inference given incomplete information (2) Learning the dependency structure from data

- 54. Bayesian network inference 54 Inference is done using Belief Bropagation on a factor graph. Messages are sent from one side to the other until convergence. Rain Sprinkler Grass Wet R/S/GW Factor Grass Wet Rain Sprinkler Marginals Factors

- 55. Inference in a medical diagnostic network 55 BRCA 2 BRCA 1 LCT BLOATLE LOA VOM AC PREGLIOC

- 56. Inference in a medical diagnostic network 56 BRCA 2 BRCA 1 LCT BLOATLE LOA VOM AC PREGLIOC

- 57. Bayesian network structure learning 57 ??? Three primary ways: ● “Search and score” / Exact ● “Constraint Learning” / PC ● Heuristics

- 58. Bayesian network structure learning 58 ??? pomegranate supports: ● “Search and score” / Exact ● “Constraint Learning” / PC ● Heuristics

- 59. Exact structure learning is intractable ??? 59

- 60. Chow-Liu trees are fast approximations 60

- 61. pomegranate supports four algorithms 61

- 62. Constraint graphs merge data + knowledge 62 BRCA 2 BRCA 1 LCT BLOATLE LOA VOM AC PREGLIOC genetic conditions conditions symptoms

- 63. Constraint graphs merge data + knowledge 63 genetic conditions diseases symptoms

- 64. Modeling the global stock market 64

- 65. Constraint graph published in PeerJ CS 65

- 66. Overview: this talk 66 Overview Major Models/Model Stacks 1. General Mixture Models 2. Hidden Markov Models 3. Bayesian Networks 4. Bayes Classifiers Finale: Train a mixture of HMMs in parallel

- 67. Bayes classifiers rely on Bayes’ rule 67

- 68. 68 Let’s build a classifier on this data

- 69. 69 Likelihood function alone is not good

- 70. 70 Priors can model class imbalance

- 71. 71 The posterior is a good model of the data

- 72. Naive Bayes assumes independent features 72

- 73. Naive Bayes produces ellipsoid boundaries 73model = NaiveBayes.from_samples(NormalDistribution, X, y)

- 74. Naive Bayes can be heterogeneous 74

- 75. Data can fall under different distributions 75

- 76. Using appropriate distributions is better 76 model = NaiveBayes.from_samples(NormalDistribution, X_train, y_train) print "Gaussian Naive Bayes: ", (model.predict(X_test) == y_test).mean() clf = GaussianNB().fit(X_train, y_train) print "sklearn Gaussian Naive Bayes: ", (clf.predict(X_test) == y_test).mean() model = NaiveBayes.from_samples([NormalDistribution, LogNormalDistribution, ExponentialDistribution], X_train, y_train print "Heterogeneous Naive Bayes: ", (model.predict(X_test) == y_test).mean() Gaussian Naive Bayes: 0.798 sklearn Gaussian Naive Bayes: 0.798 Heterogeneous Naive Bayes: 0.844

- 77. This additional flexibility is just as fast 77

- 78. Bayes classifiers don’t require independence 78 naive accuracy: 0.929 bayes classifier accuracy: 0.966

- 79. Gaussian mixture model Bayes classifier 79

- 80. Creating complex Bayes classifiers is easy 80 gmm_a = GeneralMixtureModel.from_samples(MultivariateGaussianDistribution, 2, X[y == 0]) gmm_b = GeneralMixtureModel.from_samples(MultivariateGaussianDistribution, 2, X[y == 1]) model_b = BayesClassifier([gmm_a, gmm_b], weights=numpy.array([1-y.mean(), y.mean()]))

- 81. Creating complex Bayes classifiers is easy 81 mc_a = MarkovChain.from_samples(X[y == 0]) mc_b = MarkovChain.from_samples(X[y == 1]) model_b = BayesClassifier([mc_a, mc_b], weights=numpy.array([1-y.mean(), y.mean()])) hmm_a = HiddenMarkovModel.from_samples(X[y == 0]) hmm_b = HiddenMarkovModel.from_samples(X[y == 1]) model_b = BayesClassifier([hmm_a, hmm_b], weights=numpy.array([1-y.mean(), y.mean()])) bn_a = BayesianNetwork.from_samples(X[y == 0]) bn_b = BayesianNetwork.from_samples(X[y == 1]) model_b = BayesClassifier([bn_a, bn_b], weights=numpy.array([1-y.mean(), y.mean()]))

- 82. Overview: this talk 82 Overview Major Models/Model Stacks 1. General Mixture Models 2. Hidden Markov Models 3. Bayesian Networks 4. Bayes Classifiers Finale: Train a mixture of HMMs in parallel

- 83. Training a mixture of HMMs in parallel 83 Creating a mixture of HMMs is just as simple as passing the HMMs into a GMM as if it were any other distribution

- 84. Training a mixture of HMMs in parallel 84fit(model, X, n_jobs=n)

- 85. Documentation available at Readthedocs 85

- 86. Tutorials available on github 86 https://github.com/jmschrei/pomegranate/tree/master/tutorials

- 88. fast and flexible probabilistic modeling in python jmschreiber91 @jmschrei @jmschreiber91 Jacob Schreiber PhD student, Paul G. Allen School of Computer Science, University of Washington, Seattle WA Maxwell Libbrecht Postdoc, Department of Genome Sciences, University of Washington, Seattle WA Assistant Professor, Simon Fraser University, Vancouver BC noble.gs.washington.edu/~maxwl/