Richa_Profile

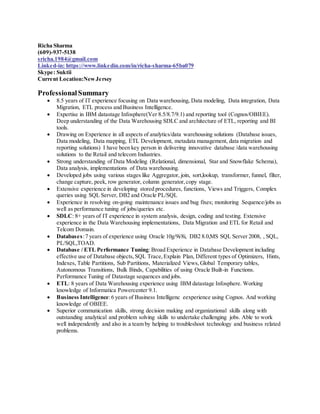

- 1. Richa Sharma (609)-937-5138 sricha.1984@gmail.com Linked-in: https://www.linkedin.com/in/richa-sharma-65ba079 Skype: Suktii Current Location:NewJersey ProfessionalSummary 8.5 years of IT experience focusing on Data warehousing, Data modeling, Data integration, Data Migration, ETL process and Business Intelligence. Expertise in IBM datastage Infosphere(Ver 8.5/8.7/9.1) and reporting tool (Cognos/OBIEE). Deep understanding of the Data Warehousing SDLC and architecture of ETL, reporting and BI tools. Drawing on Experience in all aspects of analytics/data warehousing solutions (Database issues, Data modeling, Data mapping, ETL Development, metadata management, data migration and reporting solutions) I have been key person in delivering innovative database /data warehousing solutions to the Retail and telecom Industries. Strong understanding of Data Modeling (Relational, dimensional, Star and Snowflake Schema), Data analysis, implementations of Data warehousing. Developed jobs using various stages like Aggregator, join, sort,lookup, transformer, funnel, filter, change capture, peek, row generator, column generator,copy stage. Extensive experience in developing stored procedures, functions, Views and Triggers, Complex queries using SQL Server, DB2 and Oracle PL/SQL Experience in resolving on-going maintenance issues and bug fixes; monitoring Sequence/jobs as well as performance tuning of jobs/queries etc. SDLC: 8+ years of IT experience in system analysis, design, coding and testing. Extensive experience in the Data Warehousing implementations, Data Migration and ETL for Retail and Telcom Domain. Databases:7 years of experience using Oracle 10g/9i/8i, DB2 8.0,MS SQL Server 2008, , SQL, PL/SQL,TOAD. Database / ETL Performance Tuning:Broad Experience in Database Development including effective use of Database objects,SQL Trace,Explain Plan, Different types of Optimizers, Hints, Indexes, Table Partitions, Sub Partitions, Materialized Views,Global Temporary tables, Autonomous Transitions, Bulk Binds, Capabilities of using Oracle Built-in Functions. Performance Tuning of Datastage sequences and jobs. ETL: 8 years of Data Warehousing experience using IBM datastage Infosphere. Working knowledge of Informatica Powercenter 9.1. Business Intelligence:6 years of Business Intelligenc eexperience using Cognos. And working knowledge of OBIEE. Superior communication skills, strong decision making and organizational skills along with outstanding analytical and problem solving skills to undertake challenging jobs. Able to work well independently and also in a team by helping to troubleshoot technology and business related problems.

- 2. Page 2 Skill Set Name ETL Tools IBM (Formerly Ascential) Datastage V7.5.1,IBM Datastage V8 (8.5/8.7), IBM Datastage V9.1 , Informatica 9.1 Reporting Tool Cognos Impromptu V7.2,Cognos 8.3(Report studio, Query studio,Framework manager), OBIEE ( Have worked with reporting team as liason from ETL) Database RDBMS (Oracle 9i), DB2, SQL Server , PL/SQL Operating System UNIX Education MCA (Masters Degree in Computer applications) From Banasthali Vidyapith, Jaipur, India, in 2007. Experience Summary Working as a Datastage Consultant o Company: K-Force o Client: UPS o Duration: August 2013 to till date o Location: New Jersey,USA Working as an ETL lead and technology analyst. o Company: Infosys Ltd. o Client: Dowjones. o Duration: September 2011 to August 2013. o Location: New Jersey,USA Worked as ETL developer o Company: Infosys Ltd. o Client:Telenet. o Duration:October 2007 to August 2011 o Pune, India Professional Experience #1 Name: Mainframe to Datastage Migration , Production Support, AR roadmap Client: UPS(September 2013- till date) Team Size: 8 Environment:Datastage V8, ORACLE,DB2, LINUX , PL/SQL

- 3. Page 3 Description:United ParcelService of North America, Inc.,typically referred to as UPS, is an American global package delivery company It delivers more than 15 million packages a day to more than 6.1 million customers in more than 220 countries and territories around the world. UPS have a complex costing process. It involves categorizing the data into domestic and international and do separate costing on the basis of different territory, services,shipper etc. Objective of this migration project is to migrate the logic of domestic costing from mainframe to datastage environment, which will create different files that would act as a feed for Calculator to do the costing. This involves understanding the existing mainframe logic, converting it into datastage object, comparing the result of both system and matching it till accepting limit, debugging and release support. Responsibilities as ETL Consultant: Inolved in all the phases of SDLC ie planning, designing, coding, testing, implementation and support. Understanding the mainframe logic of costing process and streamline the datastage code to that process. Use of different extraction stages of datastage ie, Sequential file stage, dataset stage, Oracle enterprise stage, DB2 connector stage. Use of different transformation stages of datastage like transformer, funnel, filter, sort, remove duplicate, aggregator, change capture, copy, checksum, join, lookup, merge, pivot stage. Use of different loading stages like sequential file stage, dataset stage, peek stage. Use of datastage director to schedule, monitor, trigger, stop, reset, validate diffetnt jobs/sequence. Usage of different partitioning technique to improve the performance of jobs. Consultation and coordination in implementing datastage jobs. Use of shell commands to navigate through directories, validating data in files, running the job through shell command. Acting as an Datastage SME for all queries related to datastage, bringing Mainframe resources ,who are moved to datastage, up to speed. Working on alternate effiicient design approach for conversion while keeping the core design of mainframe intact. Doing parallel run and comparing the legacy and new results and ensure it’s same from both side. Acting as an SME of datastage and mentoring a team of 8. End to end implementation of Datastage code. #2 Name: Mainframe Migration (MFM), Account Merge Client: DOWJONES (September 2011- August 2013) Team Size: 14 (Onsite-Offshore model) Environment:Datastage V8,Datastage V9,Cognos 8.3, DB2, UNIX, PL/SQL Description: Dowjones is a leader in news and business information world-wide, Dow Jones is newswires,Web sites, newspapers,newsletters,databases,magazines, radio and television. Objective of this project is to migrate print customers from the legacy mainframe system to the new framework (MOSAIC) and to provide Dowjones users a single view to access all accounts. These projecs are to provide different reports and support adhoc queries for making intelligent business decisions based on data available in various tables/views collected over a period of time. The activities of the project ranged from data extraction, staging and loading into data warehouse through Datastage.

- 4. Page 4 Responsibilities as ETL Lead Involed in all the stages of System development life cycle. Worked closely with Business Analysts, end users to determine data sources, targets, business rules to implement ETL code and cognos reports. Preparation of Technical specification, Detail Design Document(DDD) for the development of DataStage Extraction, Transformation and Loading (ETL) mappings to load data into various tables and defining ETL standards. Used the Datastage Designer to develop processes for extracting, cleansing, transforming and loading data into DB2 Datawarehouse database. Coding of new programs as per client’s specifications, modifying existing programs and review of the coded programs to ensure that they meet the requirements and standards. Used Datastage Designer for importing metadata into repository, for importing and exporting jobs into different projects. Used Datastage Director for validating, execution, monitoring jobs and check the log files for errors. Used different stages of Datastage Designer like Sequential/Hash file, Transformer, Merge, Oracle Enterprise/Connector, Sort, Lookup, Join, Merge, Funnel, Filter, Copy, Aggregator. Developed several jobs to improve performance by reducing runtime using different partitioning techniques. Populated Type I and Type II slowly changing dimension tables from several operational source files. Performed unit testing of all monitoring jobs manually and monitored the data to see whether the data is matched. Used several stages in Sequencer like Abort Job, Wait for Job and Mail Notification stages to build an overall main Sequencer and to accomplish Re-start ability. Created views, MQT and other DB2 objects depending upon the application group requirement. Involved in alteration of objects definition in DB2. Worked with Toad tool to access database. Involved in basic shell scripting. Coordination with offshore as an onsite coordinator. #3 Name: Various DWH applications, Production Support Client: TELENET (October 2007- August 2011) Team Size: 10 Environment:Datastage V8,Cognos 8.3, Oracle,SQL Server, UNIX Description:Telenet Group is the largest provider of broadband cable services in Belgium. Its business comprises the provision of analog and digital cable television, high speed internet and fixed and mobile telephony services,primarily to residential customers in Flanders and Brussels. In addition, Telenet offers services to business customers all across Belgium and in Luxembourg under its brand Telenet Solutions. Project was about building and maintaining the datawarehouse application for various products and

- 5. Page 5 customers of telenet so that strategic decision by higher management can be taken up. This includes ETL creation, report build, writing unix scripts and writing the procedure in SQL. Responsibilities as ETL developer Used the Datastage Designer to develop processes for extracting, cleansing, transforming and loading data into Oracle Datawarehouse database. Prepared technical specification, Detail Design Document(DDD) for the development of DataStage Extraction, Transformation and Loading (ETL) mappings to load data into various tables and defining ETL standards. Worked with migration of data and jobs from Datastage v7.5 to latest version of Datastage v8.7. Coded new programs as per client’s specifications, modifying existing programs and review of the coded programs to ensure that they meet the requirements and standards. Used Datastage Designer for importing metadata into repository, for importing and exporting jobs into different projects. Used Datastage Director for validating, execution, monitoring jobs and check the log files for errors. Used different stages of Datastage Designer like Sequential/Hash file, Transformer, Merge, Oracle Enterprise/Connector, Sort, Lookup, Join, Merge, Funnel, Filter, Copy, Aggregator. Developed several jobs to improve performance by reducing runtime using different partitioning techniques. Populated Type I and Type II slowly changing dimension tables from several operational source files. Developed complex ETL using dimensional modeling. Performed unit testing of all jobs manually and monitored the data to see whether the data is matched. Used several stages in Sequencer like Abort Job, Wait for Job and Mail Notification stages to build an overall main Sequencer and to accomplish Re-start ability. Co-ordinated with Onsite team members, perform production deployment, monitor production jobs and fix production aborts based on SLA. Certifications Domain: 1. OSS-BSS (Telecommunication Sector Fundamental Concepts) 2. Infosys Quality Systems. Technical: 1. ORACLE – OCA (Paper1. Introduction to Oracke 9i SQL 1z0-007) 2. ORACLE –OCA (Paper2. Program with PL/SQL 1z0-147) 3. COGNOS 8 – BI0-112 cognos developer certification 4. Big data and hadoop Certification from Edureka. 5. Informatica Powercenter 9.1 Developer Certification from Edureka.