An In-Depth Explanation of Recurrent Neural Networks (RNNs) - InsideAIML

- 1. An In-Depth Explanation of Recurrent Neural Networks (RNNs) In my previous article’s, we saw what are neural networks, Artificial neural network (ANN) and Convolutional Neural Network (CNN) and how they actually, work. In this tutorial, we will try to learn about one of another type of neural networks architecture known as Recurrent Neural Networks or commonly called as RNNs. Let me take an example, I gave a sentence – “learning amazing Deep is”, did this make any sense to you? I think not really – But read this sentence – “Deep learning is amazing”. I think this made perfect sense to you! Did you notice? If we little jumble the words in a given sentence, the sentence becomes incoherent to us. Now the question arises can we expect a neural network to make sense out of it? Not really! If the human brain was confused about what it meant I am sure a neural network is going to have a tough time to understand such sentences. Do you ever imagine when you start typing an email, Gmail suddenly predict next word for you sentence, while typing a WhatsApp message if you typed wrong word, and suddenly it gets autocorrected? Yes, you are thinking correct! Recurrent Neural Network and others deep learning techniques are involved behind it.

- 2. There are different tasks present in our everyday life which gets completely disrupted when their sequence is disturbed. For example, language as we saw earlier- it's a sequence of words defines their meaning, a time series data – where time defines the occurrence of events and the data of a genome sequence- where every sequence of data has a different meaning. There are many such scenarios wherein the sequence of information determines the event itself. Now, let's consider, If we are trying to use such data for any reasonable output, we need a network which has access to some prior knowledge about the data to completely understand it. So there comes into the picture a special type of network-Recurrent neural networks. So, your next question is what is Recurrent Neural Network? Let’s imagine, I want to make breakfast today. Suppose I had a burger yesterday so maybe I should consider a bread omelette today. Essentially, what we will have a network that is similar to the feed forward neural networks. What you have learnt before. But with the addition of “memory”. You may have noticed that in the application of feed forward neural networks applications. You have seen before only the current input matters. For example, in a problem of classifying an image of a dog. If this is a image of a dog or not? But perhaps this is not a static dog maybe when the picture was shot, the dog was moving. From a single image it may be difficult to determine, if its indeed walking or may be its running. Maybe it’s a very energetic and talented dog that is standing in a funny pose with two his two legs up in the air. When we try to observe the dog frame by frame, we remember what we saw before. So, we know if the Dog is still or its walking. We can also distinguish it from walking to running. But Can a machine do the same? This is where Recurrent neural networks or RNNs comes into picture. Recurrent neural networks are neural networks, well, artificial neural networks that can capture temporal dependencies which are dependencies over time.

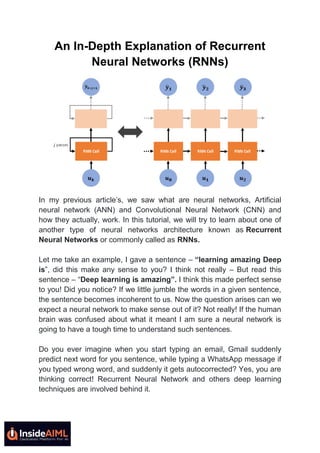

- 3. Now let’s look at the definition of the word Recurrent in Recurrent Neural Networks. We can find its simply means occurring often or repeatedly. So why are these networks called as Recurrent Neural networks, its simply because in Recurrent neural networks we perform the task for each element in the input sequence. Let me take an example and show how it’s actually works. Let’s say we have a task to predict the next word in a sentence. Let’s try accomplishing it using a Multi-layer perceptron (MLP). So, what happens in an MLP? In the simplest way, we may say that we have an input layer, a hidden layer and an output layer into our neural network. The input layer receives the input, the hidden layer activations functions are applied and then we finally receive the output. Let’s have a deeper network, where multiple hidden layers are present. Here, the input layer receives the input, on the first hidden layer activations functions are applied and then these activations functions are sent to the next hidden layer, and successive activation functions are applied on the layers to produce the output. Each hidden layer has its own weights and biases respectively. Now, as each hidden layer has its own weights and activation functions, they behave independently. Now the goal is to find the relationship between successive inputs.

- 4. Now, you might be thinking Can we supply the inputs to hidden layers? And the answer is: Yes, we can! Here, these hidden layers have different weights and bias. That's why each of these layers behaves independently and cannot be combined together. So now to combine these hidden layers together, we shall have the same weights and bias for these hidden layers.

- 5. We can now combine these layers together, that the weights and bias of all the hidden layers is the same. All these hidden layers can be combined together in a single recurrent layer. So, it’s like supplying the input to the hidden layer. We can say that at all the time steps weights of the recurrent neuron would be the same since it’s a single neuron now. So, a recurrent neuron stores the state of a previous input and combines with the current input thereby preserving some relationship of the current input with the previous input. Now, the next questions arise what is a Recurrent Neuron? Let’s consider a simple task at first. Let’s take a character level RNN where we have a word “Please”. So, we provide the first 5 letters i.e. P, l, e, a, s and ask the network to predict the last letter i.e. “e”. So here the vocabulary of the task is just 5 letters {P, l, e, a, s}. But in real case scenarios involving natural language processing tasks, the vocabularies include the words in the entire Wikipedia database or all the words in a language. Note: for simplicity, we have taken a very small set of vocabulary for our problem.

- 6. Let’s try to understand, how the network is used to predict the sixth letter in the word “Please”. In the figure, we can see that the middle block is the RNN block, applies something called as a recurrence formula to the input vector and also its previous state. In this case, the letter “P” has nothing preceding it, let’s take the letter “l”. So, at the time the letter “l” is supplied to the network, a recurrence formula is applied to the letter “l” and the previous state which is the letter “P”. These are called as various time steps of the given input. So, if at time t, the input is “l”, at time t-1, the input was “P”. The recurrence formula is applied to “l” and “P” both and we receive a new state. We can give the formula for the current state as shown – We can see from the formula, Ht represents the new state, ht- 1 represents the previous state and xt represents the current input. We now have a state of the previous input instead of the input itself, because the input neuron would have applied the transformations on our previous input. So, each successive input is called as a time step. In this case we have five inputs to be given to the network, during a recurrence formula, the same function and the same weights are applied to the network at each time step.

- 7. Considering the simplest form of a recurrent neural network, let’s consider that the activation function is tanh, the weight at the recurrent neuron is Whh and the weight at the input neuron is Wxh. So, the equation for the state at time t can be given as – The Recurrent neuron in this case is just taking the immediate previous state into consideration. For longer sequences the equation can involve multiple such states. Once the final state is calculated we can go on to produce the output. Now, once the current state is calculated we can calculate the output state as- That how the Recurrent Neural networks works, in our example the word “e” is predicted because its depends on the previous word occurrence. So with the help of this previous word dependency we are able to predict the next word. This is very simple example which I have taken here. But RNN is having a very huge application in many real world problems. As of now you understood how Recurrent neural networks are and how its works. Let me summarize it for you. 1. A single time step of the input is supplied to the network i.e. xt is supplied to the network 2. We then calculate its current state using a combination of the current input and the previous state i.e. we calculate ht 3. The current ht becomes ht-1 for the next time step 4. We can go as many time steps as the problem demands and combine the information from all the previous states 5. Once all the time steps are completed the final current state is used to calculate the output yt. 6. The output is then compared to the actual output and the error is generated

- 8. 7. The error is then back propagated to the network to update the weights (we shall go into the details of back propagation in further sections) and the network is trained Problems with a standard Recurrent Neural network Unfortunately, Standard RNN are comfortable with to solve some small problem as mentioned above but as the problem gets more complicated such as predicting stock prices, predicting rainfall etc. and if you implement the Standard RNN, you won’t be so delighted with the results. That is because the simplest RNN model has a major drawback, called vanishing gradient problem, which prevents it from being accurate. So, what is vanishing gradient problem? As you may recall, while training our network we use backpropagation. In the backpropagation process, we adjust our weight matrices with the use of a gradient. In the process, gradients are calculated by continuous multiplications of derivatives. The value of these derivatives may be so small, that these continuous multiplications may cause the gradient to practically "vanish". In a nutshell, the problem comes from the fact that at each time step during training we are using the same weights to calculate the output. That multiplication is also done during back-propagation. The further we move backwards, the bigger or smaller our error signal becomes. This means that the network experiences difficulty in memorizing words from far away in the sequence and makes predictions based on only the most recent ones. That is why more powerful models like LSTM and GRU come in the picture. Solving the above issue, they have become the accepted way of

- 9. implementing recurrent neural networks to solve more complex problems. I hope after reading this article, finally, you came to know about what is Recurrent Neural Networks is and how it actually works? In the next articles, I will come with a detailed explanation of some other topics like LSTM, GRU etc. For more blogs/courses on data science, machine learning, artificial intelligence and new technologies do visit us at InsideAIML. Thanks for reading…