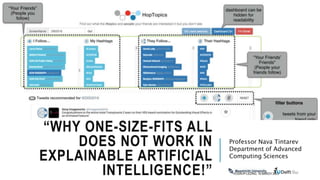

Why one-size-fits all does not work in Explainable Artificial Intelligence!

- 1. “WHY ONE-SIZE-FITS ALL DOES NOT WORK IN EXPLAINABLE ARTIFICIAL INTELLIGENCE!” Professor Nava Tintarev Department of Advanced Computing Sciences VOGIN-IP-LEZING, 16 MARCH 2023

- 2. MOTIVATION Computer User VOGIN-IP-LEZING, 16 MARCH 2023

- 3. MOTIVATION Interpretability is the degree to which a human can understand the cause of a decision (Miller 2017) the degree to which a human can consistently predict the model’s result (Kim et al 2016) To which extent the model and/or the prediction are human-understandable (Guidotti et al 2018, Amparore et al 2020) VOGIN-IP-LEZING, 16 MARCH 2023

- 4. MOTIVATION More human (non-expert) and system collaborations. Potential in leveraging joint strengths. We might not accept advice from systems We do not know which information has been used, or if our opinion is considered… Many barriers are not technical, but cognitive. Explanations can help us make better decisions together with systems. (Tintarev, 2016) Why? VOGIN-IP-LEZING, 16 MARCH 2023

- 5. INTERFACE MATTERS (EXAMPLE 1) VOGIN-IP-LEZING, 16 MARCH 2023 Best variation is 66% more effective than the worst one (stat. sign.; p<0.05). Measured clickthrough rate Title+thumbnail+abstr act did best (vii) Beel & Dixon UMAP’21

- 6. Mats Mulder, Oana Inel, Nava Tintarev, Jasper Oosterman, FAccT’21 INTERFACE MATTERS (EXAMPLE 2) Diversification of viewpoint on a Dutch news aggregator (Mulder et al, FACCT’21) Mats Mulder MSc Oana Inel Postdoc Jasper Oosterman Blendle

- 7. INTERFACE MATTERS! Click-through rate for “diverse viewpoint” condition depended on: Thumbnail 3.1% more Number of hearts VOGIN-IP-LEZING, 16 MARCH 2023

- 8. CONFESSION: I DON’T WORK IN ALL OF AI! VOGIN-IP-LEZING, 16 MARCH 2023

- 9. OVERVIEW • VOGIN-IP-LEZING, 16 MARCH 2023 •Explanations can serve different purposes Different people need different explanations Different situations call for different explanations There is a benefit in interactive explanations (especially for complex domains)

- 10. EXPLANATIONS CAN SERVE DIFFERENT PURPOSES VOGIN-IP-LEZING, 16 MARCH 2023

- 11. Purpose Description Transparency Explain how the system works Effectiveness Help users make good decisions Trust Increase users' confidence in the system Persuasiveness Convince users to try or buy Satisfaction Increase the ease of use or enjoyment Scrutability Allow users to tell the system it is wrong Efficiency Help users make decisions faster Tintarev and Masthoff, 2007 (more recent Tintarev and Masthoff 2022) WHY EXPLAIN (RECSYS) Trade-offs? How was this recommendation made? Why (not) this item? Why must you buy this item?

- 12. SATISFYING, BUT NOT EFFECTIVE Benefit of personalized explanations? Personalized explanations worse for decisions but people were more satisfied! [Tintarev & Masthoff, 2012] What you measure matters! Non-personalized: “This movie belongs to the genre(s): Action & Adventure and Comedy. On average other users rated this movie 4/5.0” Personalized: “Unfortunately, this movie belongs to at least one genre you do not want to see: Action & Adventure. It also belongs to the genre(s): Comedy. This movie stars Jo Marr and Robert Redford.” Baseline: “This movie is not one of the top 250 movies in the Internet Movie Database (IMDB). ” VOGIN-IP-LEZING, 16 MARCH 2023

- 13. • VOGIN-IP-LEZING, 16 MARCH 2023 •Explanations can serve different purposes Different people need different explanations Different situations call for different explanations There is a benefit in interactive explanations (especially for complex domains)

- 14. DIFFERENT PEOPLE NEED DIFFERENT EXPLANATIONS VOGIN-IP-LEZING, 16 MARCH 2023

- 15. INTELLIGENT USER INTERFACES User interface – what the system shows of its internal state to a user, and allows the user to modify it to something resembling their internal model. NB: Includes chatbots! Intelligent – Containing some measure of reasoning (human or artificial). Can be classification, recommendation, natural language processing, planning etc In particular – adaptive systems which change their behavior in response to their environment, or interactive systems, which change their behavior to user interaction specifically. VOGIN-IP-LEZING, 16 MARCH 2023

- 16. ADAPTIVE INTERFACES (TINTAREV, MASTHOFF, 2016) Modality E.g., text versus graphics Layout E.g., horizontal timeline Aggregation E.g., level of detail Emphasis E.g. color or bold (see image) Degree of Interactivity Later in the talk VOGIN-IP-LEZING, 16 MARCH 2023

- 17. PERSONALITY Personality traits Personality ---``a person's nature or disposition; the qualities that give one's character individuality’’ Common model: OCEAN/FFM - Five Factor Model: Openness to Experience (O), Conscientiousness (C), Extroversion (E). Agreeableness (A), and Neuroticism (N) Locus of Control The extent to which a person believes they can control events that affect them Internal LoC believe they can control their own fate, external LoC believes that their fate is determined by external forces Resilience ``the ability to bounce back from stress'' VOGIN-IP-LEZING, 16 MARCH 2023

- 18. EXPERTISE (1) • Domain-specific or technology specific Example 1: 4th year student versus 1st year student VOGIN-IP-LEZING, 16 MARCH 2023

- 19. EXPERTISE (2) • Example 2: Goldsmith’s Music sophistication Index: Active Musical Engagement, e.g. how much time and money resources spent on music Self-reported Perceptual Abilities, e.g. accuracy of musical listening skills Musical Training, e.g. amount of formal musical training received Self-reported Singing Abilities, e.g. accuracy of one’s own singing Sophisticated Emotional Engagement with Music, e.g. ability to talk about emotions that music expresses VOGIN-IP-LEZING, 16 MARCH 2023

- 20. COGNITIVE TRAITS • Perceptual speed - quickly and accurately compare letters, numbers, objects, pictures, or patterns • Verbal working memory – temporary memory for words and verbal items • Visual working memory – temporary memory for visual items VOGIN-IP-LEZING, 16 MARCH 2023

- 21. PERSONAL CHARACTERISTICS EXAMPLE 1 YUCHENG JIN ET AL, INTRS WORKSHOP@RECSYS’17 • Working memory did not influence cognitive load. • Musical sophistication affected both acceptance and cognitive load. VOGIN-IP-LEZING, 16 MARCH 2023 Yucheng Jin Katrien Verbert

- 22. PERSONAL CHARACTERISTICS EXAMPLE 2 YUCHENG ET AL, UMAP’2018 Perceptions of diversity differed between two visualizations for people with high musical sophistication. They also differed for people with high visual working memory. Individual differences matter! VOGIN-IP-LEZING, 16 MARCH 2023

- 23. • VOGIN-IP-LEZING, 16 MARCH 2023 •Explanations can serve different purposes Different people need different explanations Different situations call for different explanations There is a benefit in interactive explanations (especially for complex domains)

- 24. DIFFERENT SITUATIONS CALL FOR DIFFERENT EXPLANATIONS

- 25. EXPLANATION INTERFACES FOR BIAS MITIGATION? VOGIN-IP-LEZING, 16 MARCH 2023

- 26. ADAPTING TO CONTEXT: VIEWPOINT DIVERSITY • Search results are biased, no variety on viewpoint (different from topic or source). • Humans are highly influenced by search result rankings (SEME). • During online information search, users tend to select search results that confirm pre-existing beliefs or values and ignore competing possibilities (Confirmation bias) • Potential harmful effects on individuals, businesses, and society. • e.g., by affecting individual health choices, important public debates, or election outcomes • Recommendations need to be explained if we care about user perceptions! VOGIN-IP-LEZING, 16 MARCH 2023

- 27. EXPLANATION INTERFACES FOR BIAS MITIGATION? Rieger et al. ACM HyperText’2021 🏆 Tintarev et al. ACM Symposium On Applied Computing, 2018 Sullivan et al. Collaboration with FD Media @ICTwIndustry, ExUm Workshop, UMAP, 2019 VOGIN-IP-LEZING, 16 MARCH 2023

- 28. INTERACTIONS IN SEARCH INTERFACE VOGIN-IP-LEZING, 16 MARCH 2023

- 29. TAKE HOME PREVIEW • VOGIN-IP-LEZING, 16 MARCH 2023 •Explanations can serve different purposes Different people need different explanations Different situations call for different explanations There is a benefit in interactive explanations (especially for complex domains)

- 30. THERE IS A BENEFIT IN INTERACTIVE EXPLANATIONS VOGIN-IP-LEZING, 16 MARCH 2023

- 31. MOVIE RECOMMENDATIONS SCAFFER ET AL, 2015 Left to right: 1) user’s profile items 2) top-k similar users; 3) top-n recommendations. Adds, deletes, or re- rates produce updated recommendations VOGIN-IP-LEZING, 16 MARCH 2023

- 32. SETFUSION PARRA & BRUSILOVSKY, 2015 Which items or people Look at the intersections Contribution to results (color-coded) VOGIN-IP-LEZING, 16 MARCH 2023

- 33. BENEFITS (i) dynamic feedback improves the effectiveness of profile updates, (ii) when dynamic feedback is present, users can identify and remove items that contribute to poor recommendations, (iii) profile update tasks improve perceived accuracy of recommendations and trust in the recommender, regardless of actual recommendation accuracy. VOGIN-IP-LEZING, 16 MARCH 2023

- 34. AFFORDANC ES AND SERENDIPIT Y Smets, Annelien, et al. "Serendipity in Recommender Systems Beyond the Algorithm: A Feature Repository and Experimental Design." 16th ACM Conference on Recommender Systems. CEUR Workshop Proceedings. 2022. VOGIN-IP-LEZING, 16 MARCH 2023

- 35. TAKE HOME • VOGIN-IP-LEZING, 16 MARCH 2023 •Explanations can serve different purposes Different people need different explanations Different situations call for different explanations There is a benefit in interactive explanations (especially for complex domains)

- 36. THANK YOU! Long Term Program (10Y) ROBUST: Trustworthy AI-based Systems for Sustainable Growth. 17 new labs, 85 PhD positions https://icai.ai/icai-labs/ n.tintarev@maastrichtuniversity.nl @Nava@idf.social VOGIN-IP-LEZING, 16 MARCH 2023

- 37. REFERENCES (1/3) Beel, Joeran, and Haley Dixon. "The ‘Unreasonable’ Effectiveness of Graphical User Interfaces for Recommender Systems." Adjunct Proceedings of the 29th ACM Conference on User Modeling, Adaptation and Personalization. 2021. Bilgic, Mustafa, and Raymond J. Mooney. "Explaining recommendations: Satisfaction vs. promotion." Beyond personalization workshop, IUI. Vol. 5. 2005. Kang, Byungkyu, et al. "What am I not seeing? An interactive approach to social content discovery in microblogs." International Conference on Social Informatics. Springer, Cham, 2016. Jin, Yucheng, Bruno Cardoso, and Katrien Verbert. "How do different levels of user control affect cognitive load and acceptance of recommendations?." Jin, Y., Cardoso, B. and Verbert, K., 2017, August. How do different levels of user control affect cognitive load and acceptance of recommendations?. In Proceedings of the 4th Joint Workshop on Interfaces and Human Decision Making for Recommender Systems co-located with ACM Conference on Recommender Systems (RecSys 2017). Vol. 1884. CEUR Workshop Proceedings, 2017. Jin, Yucheng, Nava Tintarev, and Katrien Verbert. "Effects of individual traits on diversity-aware music recommender user interfaces." Proceedings of the 26th Conference on User Modeling, Adaptation and Personalization. 2018. Mulder, Mats, et al. "Operationalizing framing to support multiperspective recommendations of opinion pieces." Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency. 2021. VOGIN-IP-LEZING, 16 MARCH 2023

- 38. REFERENCES (2/3) Najafian, Shabnam, et al. "Factors influencing privacy concern for explanations of group recommendation." Proceedings of the 29th ACM Conference on User Modeling, Adaptation and Personalization. 2021. Parra, Denis, and Peter Brusilovsky. "User-controllable personalization: A case study with SetFusion." International Journal of Human-Computer Studies 78 (2015): 43-67. 🏆 Rieger, A., Draws, T., Theune, M., & Tintarev, N. (2021, August). This Item Might Reinforce Your Opinion: Obfuscation and Labeling of Search Results to Mitigate Confirmation Bias. In Proceedings of the 32nd ACM Conference on Hypertext and Social Media (pp. 189-199). Rieger, A et al. Towards Healthy Online Debate: An Investigation of Debate Summaries and Personalized Persuasive Suggestions. Explainable User Modeling workshop. UMAP’22. (to appear)Schaffer, James, Tobias Hollerer, and John O'Donovan. "Hypothetical recommendation: A study of interactive profile manipulation behavior for recommender systems." The Twenty-Eighth International Flairs Conference. 2015. Di Sciascio, Cecilia, Vedran Sabol, and Eduardo E. Veas. "Rank as you go: User-driven exploration of search results." Proceedings of the 21st international conference on intelligent user interfaces. 2016. 🏆 Tintarev, Nava, and Judith Masthoff. "The effectiveness of personalized movie explanations: An experiment using commercial meta-data." International Conference on Adaptive Hypermedia and Adaptive Web-Based Systems. Springer, Berlin, Heidelberg, 2008. VOGIN-IP-LEZING, 16 MARCH 2023

- 39. REFERENCES (3/3) Tintarev, Nava, John O'donovan, and Alexander Felfernig. "Introduction to the special issue on human interaction with artificial advice givers." ACM Transactions on Interactive Intelligent Systems (TiiS) 6.4 (2016): 1-12. Tintarev, Nava, et al. "Same, Same, but Different: Algorithmic Diversification of Viewpoints in News." Adjunct Publication of the 26th Conference on User Modeling, Adaptation and Personalization. ACM, 2018. Tintarev, Nava, and Judith Masthoff. "Beyond explaining single item recommendations." Recommender Systems Handbook (3rd Ed). Springer, New York, NY, 2022. 711-756. 2022. Verbert, Katrien, et al. "Visualizing recommendations to support exploration, transparency and controllability." Proceedings of the 2013 international conference on Intelligent user interfaces. 2013. VOGIN-IP-LEZING, 16 MARCH 2023

- 40. User profile (PRO) Algorithm parameters (PAR) Recommendations (REC) Sweet spot for cognitive lo Jin et al. 2016

- 41. EVALUATION METHOD Between-subjects Independent variable: settings of user control Dependent variables: Acceptance (ratings) Cognitive load (NASA-TLX), MS, VM Framework Knijnenburg et al.

- 42. CONCLUSION Main effects: REC has lowest cognitive load and highest acceptance Two-way interaction: does not necessarily result in higher cognitive load. Adding an additional control component to PAR increases the acceptance. PRO*PAR has less cognitive load than PRO and PAR Three-way interaction: it increases acceptance, and does not lead to higher cognitive load. Increase interaction times and accuracy High MS (expertise) leads to higher quality, and thereby result in higher acceptance User profile (PRO) Algorithm parameters (PAR) Recommendations (REC) VOGIN-IP-LEZING, 16 MARCH 2023

- 43. VOGIN-IP-LEZING, 16 MARCH 2023

- 44. SOCIAL SEARCH BROWSER CHURCH ET AL, 2010 VOGIN-IP-LEZING, 16 MARCH 2023

- 45. ACADEMIC TALKS VERBERT ET AL. 2013 1) Which of user’s bookmarked papers are also bookmarked by user L. Aroyo 2) Which are bookmarked by L. Aroyo, but not recommended 3) Which are recommended by selected recommenders, and also bookmarked by VOGIN-IP-LEZING, 16 MARCH 2023

- 46. ARTICLES URANK A) Tag Box – keyword summary B) Query Box – Keywords that originated the current ranking state C) Document List – Augmented document titles D) Ranking View – relevance scores E) Document Viewer – title, year, and snippet of document with augmented keywords F) Ranking Controls – wrap buttons for ranking settings Di Sciasco et al., 2016 VOGIN-IP-LEZING, 16 MARCH 2023

- 47. INTERSECTION EXPLORER CARDOSO ET AL. 2018 Choice of algorithm, user, content- features Which of the factors are used in the selection Resulting recs. VOGIN-IP-LEZING, 16 MARCH 2023

Notes de l'éditeur

- Title: Interactive and adaptive explanation interfaces for recommender systems Abstract: Recommender systems such as Amazon, offer users recommendations, or suggestions of items to try or buy. Recommender systems use algorithms that can be categorized as ranking algorithms, which result in an increase in the prominence of some information. Other information (e.g., low rank or low confidence recommendations) is consequently filtered and not shown to people. However, it is often not clear to a user whether the recommender system's advice is suitable to be followed, e.g., whether it is correct, whether the right information was taken into account, whether crucial information is missing. This keynote will describe how explanations can help bridge that gap, what we constitute as helpful explanations, as well as the considerations and external factors we must consider when determining the level of helpfulness. I will introduce the notion of interactive explanation interfaces for recommender systems, which support exploration rather than explaining (potentially poor) recommendations post-hoc. These interfaces support users in exploring the effects of the user model and algorithmic parameters on resulting recommendations. I will also address the importance of user modeling in the design of explanation interfaces. Both the characteristics of the people (e.g., expertise, cognitive capacity) and the situation (e.g., which other people are affected, or which variables are influential) place different requirements on which explanations are useful, also w.r.t. to different presentational choices (e.g., with regard to modality, degree of interactivity, level of detail). Simply put, ``One size does not fit all’’. 45 minutes including Q&A

- Emphasis keywords

- https://www.um.org/umap2021/12-posters/25-the-unreasonable-effectiveness-of-graphical-user-interfaces-for-recommender-systems

- Ways of presenting – list with score, top-N, single one, set of items Variety of domains – some more expected than others

- People had a hard time having an opinion for ”baseline” explanations

- Add references from Recsys

- Effects of personal characteristics on perceptions of diversity, and item acceptance. Yucheng Jin et al, UMAP’18 Perceptions of diversity differed between two visualizations for people with high musical sophistication. They also differed for people with high visual working memory. Individual differences matter!

- Novel algorithms for diverse selection Enrichment to move beyond diversification on `relevance’ Topical (query) relevance Ranked by click-through or popularity Previous consumption (e.g., LFP) Which diversity metrics can we develop on the basis of debatability metadata? Which diversification strategies can we model? What is the accuracy, and coverage of different diversification models? Can we further improve models of diversification by considering individual users?

- Touch back on exploratory aims Diifferent people need different expllanations There is a benefit in interaction

- Lightweight interventions, but we can have repeat interactions In order to study enduring effects, we developed an interactive mockup that simulates a search engine. This allows for repeat interactions and recording behavior as it evolves. And we have just completed running a first study and I look forward to seeing how this and other interactive interfaces enable us to study how learning occurs, and how meta-cognition develops.

- Left to right: 1) user’s profile items 2) top-k similar users; 3) top-n recommendations. Adds, deletes, or re-rates produce updated recommendations The dark blue lines appear when a user clicks a node and show provenance data for recommendations and the nearest neighbors who contributed to them. Clicking a movie recommendation on the right side of the page opens the movie information page on Rottentomatoes.com.

- We used the Spotify API~\footnote{\url{https://developer.spotify.com/web-api}, accessed June 2018.} to design a music recommender system and to present the user controls for three distinct recommender components. We leveraged the algorithm introduced in Section \ref{sec:general-algorithm} to control the recommendation process as well as our user interface and user controls. \begin{figure*} \centering \includegraphics[width=1\columnwidth]{figures/exp1/vis1} \caption{a): the recommendation source shows available top artists, tracks and genre tags. b): the recommendation processor enables users to adjust the weight of the input data type and individual data items. c): play-list style recommendations. Some UI controls are disabled in specific settings of user control, e.g., the sliders in b) are grayed out in the setting 5: REC*PRO. }~\label{fig:vis1} \end{figure*} We created four scenarios for the user task of selecting music, with each scenario represented by setting a pair of audio feature values between 0.0 and 1.0. We set a value for each scenario based on the explanation of audio feature value in the Spotify API. The used scenarios include: \textit{``Rock night - my life needs passion" }assigning attribute ``energy" between 0.6 and 1.0; \textit{``Dance party - dance till the world ends"} setting ``danceability" between 0.6 and 1.0; \textit{``A joyful after all exams"} with ``danceability" between 0.6 and 1.0; \textit{``Cannot live without hip-hop"} with ``speechiness" from 0.33 to 0.66. subsubsection{User interface} The user interface of the recommender is featured with ``drag and drop" interactions. The interface consists of three parts, as presented in Figure~\ref{fig:vis1}. \begin{itemize} \item[(a)] The \emph{user profile} works as a warehouse of source data, such as top artists, top tracks, and top genres, generated from past listening history. \item[(b)] The \emph{algorithm parameters} shows areas in which source items can be dropped from part (a). The dropped data are bound to UI controls such as sliders or sortable lists for weight adjustment. It also contains an additional info view to inspect details of selected data items. \item[(c)] The \emph{recommendations}: the recommended results are shown in a play-list style. \end{itemize} As presented in Figure~\ref{fig:vis1}, we use three distinct colors to represent the recommendation source data as visual cues: brown for artists, green for tracks, and blue for genres. Additional source data for a particular type is loaded by clicking the \textbf{``+''} icon next to the title of the source data type. Likewise, we use the same color schema to code the seeds (a), selected source data and data type slider (b), and recommendations (c). As a result, the visual cues show the relation among the data in three steps of the recommendation process. When users click on a particular data item in the recommendation processor, the corresponding recommended items are highlighted, and an additional info view displays its details. \subsubsection{User controls} %The interactive recommendation framework proposed by ~\cite{he2016interactive} defines three main components of interactive recommenders. %KV omitted and referred to our own framework Based on our framework described in Section \ref{sec:UIframework}, we defined three user control components in our study: (1) user profile (PRO), (2) algorithm parameters (PAR), (3) recommendations (REC) (see Table~\ref{tab:levelofcontrol}). \begin{table} \centering \begin{tabular}{p{14em} p{6em} p{15em}} {\small \textit{Components}} & {\small \textit{Control levels}} & {\small \textit{User controls}}\\ \midrule \small{Algorithm parameters (\textbf{PAR})} & \small{High} & \small{Modify the weight of the selected or generated data in the recommender engine} \\ \small{User profile (\textbf{PRO})} & \small{Middle} & \small{Select which user profile will be used in the recommender engine and check additional info of the user profile} \\ \small{Recommendations (\textbf{REC})} & \small{Low} & \small{Remove and sort recommendations} \\ \bottomrule \end{tabular} \caption{The three types of user control employed in our study.}~\label{tab:levelofcontrol} \end{table} ~\paragraph{Control for algorithm parameters (\textbf{PAR}).} This type of control allows users to tweak the influence of different underlying algorithms. To support this level of control, multiple UI components are developed to adjust the weight associated with the type of data items, or the weight associated with an individual data item. Users are able to specify their preferences for each data type by manipulating a slider for each data type. By sorting the list of dropped data items, users can set the weight of each item in this list (Figure~\ref{fig:vis1}b). ~\paragraph{Control for user profile (\textbf{PRO}).} This type of control influences the seed items used for recommendation. A drag and drop interface allows users to intuitively add a new source data item to update the recommendations (Figure~\ref{fig:vis1}a). When a preferred source item is dropped to the recommendation processor, a progress animation will play until the end of the processing. Users are also able to simply remove a dropped data item from the processor by clicking the corresponding \textbf{``x''} icon. Moreover, by selecting an individual item, users can inspect its detail: artists are accompanied by their name, an image, popularity, genres, and number of followers, tracks are shown with their name, album cover, and audio clip, and genres are accompanied by a play-list whose name contains the selected genre tag. ~\paragraph{Control for recommendations (\textbf{REC}).} This type of control influences the recommended songs directly. Since the order of items in a list may affect the experience of recommendations~\citep{zhao2017toward}, manipulations on recommendations include reordering tracks in a play-list. It also allows users to remove an unwanted track from a play-list. When doing so, a new recommendation candidate replaces the removed item. The action of removing can be regarded as a kind of implicit feedback to recommendations. Although a rating function has been implemented for each item in a play-list, the rating data is not used to update the user's preference for music recommendations. Therefore, user rating is not considered as a user control for the purposes of this study.

- We employed a between-subjects study to investigate the effects of interactions among different user control on acceptance, perceived diversity, and cognitive load. We consider each of three user control components as a variable. By following the 2x2x2 factorial design we created eight experimental settings (Table~\ref{tab:table1}), which allows us to analyze three main effects, three two-way interactions, and one three-way interaction. We also investigate which specific \textit{personal characteristics} (musical sophistication, visual memory capacity) influence acceptance and perceived diversity. Each experimental setting is evaluated by a group of participants (N=30). Of note, to minimize the effects of UI layout, all settings have the same UI and disable the unsupported UI controls, e.g., graying out sliders. As shown in section ~\ref{evaluation questions}, we employed Knijnenburg et al.'s framework~\citep{knijnenburg2012explaining} to measure the six subjective factors, perceived quality, perceived diversity, perceived accuracy, effectiveness, satisfaction, and choice difficulty~\citep{knijnenburg2012explaining}. In addition, we measured cognitive load by using a classic cognitive load testing questionnaire, the NASA-TLX~\footnote{https://humansystems.arc.nasa.gov/groups/tlx}. It assesses the cognitive load on six aspects: mental demand, physical demand, temporal demand, performance, effort, and frustration. The procedure follows the design outlined in the general methodology (c.f., Section \ref{sec:general-procedure}). The \textit{experimental task} is to compose a play-list for the chosen scenario by interacting with the recommender system. Participants were presented with play-list style recommendations (Figure~\ref{fig:vis1}c). Conditions were altered on a between-subjects basis. Each participant was presented with only one setting of user control. For each setting, initial recommendations are generated based on the selected top three artists, top two tracks, and top one genre. According to the controls provided in a particular setting, participants were able to manipulate the recommendation process.

- Main effects: REC has lowest cgload and highest acceptance Two-way: All the settings that combine two control components do not lead to significantly higher cognitive load than using only one control component. combing multiple control components potentially increases acceptance without increasing cognitive load significantly. visual memory is not a significant factor that affects the cognitive load of controlling recommender systems. In other words, controlling the more advanced recommendation components in this study does not seem to demand a high visual memory. In addition, we did not find an effect of visual memory on acceptance (or perceived accuracy and quality). One possible explanation is that users with higher musical so- phistication are able to leverage different control components to explore songs, and this influences their perception of recommenda- tion quality, thereby accepting more songs. Our results show that the settings of user control significantly influence cognitive load and recommendation acceptance. We discuss the results by the main effects and interaction effects in a 2x2x2 factorial design. Moreover, we discuss how visual memory and musical sophistication affect cognitive load, perceived diversity, and recommendation acceptance. \subsubsection{Main effects} We discuss the main effects of three control components. Increased control level; from control of recommendations (REC) to user profile (PRO) to algorithm parameters (PAR); leads to higher cognitive load (see Figure \ref{fig:margin}c). The increased cognitive load, in turn, leads to lower interaction times. Compared to the control of algorithm parameters (PAR) or user profile (PRO), the control of recommendations (REC) introduces the least cognitive load and supports users in finding songs they like. We observe that most existing music recommender systems only allow users to manipulate the recommendation results, e.g., users provide feedback to a recommender through acceptance. However, the control of recommendations is a limited operation that does not allow users to understand or control the deep mechanism of recommendations. \subsubsection{Two-way interaction effects} Adding multiple controls allows us to improve on existing systems w.r.t. control, and do not necessarily result in higher cognitive load. Adding an additional control component to algorithm parameters increases the acceptance of recommended songs significantly. Interestingly, all the settings that combine two control components do \textit{not} lead to significantly higher cognitive load than using only one control component. We even find that users' cognitive load is significantly \textit{lower} for (PRO*PAR) than (PRO, PAR), which shows a benefit of combining user profile and algorithm parameters in user control. Moreover, combing multiple control components potentially increases acceptance without increasing cognitive load significantly. Arguably, it is beneficial to combine multiple control components in terms of acceptance and cognitive load. \subsubsection{Three-way interaction effects} The interaction of PRO*PAR*REC tends to increase acceptance (see Figure \ref{fig:margin}a), and it does not lead to higher cognitive load (see Figure \ref{fig:margin}c). Moreover, it also tends to increase interaction times and accuracy. Therefore, we may consider having three control components in a system. Consequently, we answer the research question. \textbf{RQ1}: \textit{The UI setting (user control, visualization, or both) has a significant effect on recommendation acceptance?} It seems that combining PAR with a second control component or combing three control components increases acceptance significantly. %KV: this paragraph refers to different RQs: either rephrase or omit? -SOLVED \subsubsection{Effects of personal characteristics} Having observed the trends across all users, we survey the difference in cognitive load and item acceptance due to personal characteristics. We study two kinds of characteristics: visual working memory and musical sophistication. \paragraph{Visual working memory} The SEM model suggests that visual memory is not a significant factor that affects the cognitive load of controlling recommender systems. The cognitive load for the type of controls used may not be strongly affected by individual differences in visual working memory. In other words, controlling the more advanced recommendation components in this study does not seem to demand a high visual memory. In addition, we did not find an effect of visual memory on acceptance (or perceived accuracy and quality). Finally, the question items for diversity did not converge in our model, so we are not able to make a conclusion about the influence of visual working memory on diversity. \paragraph{Musical sophistication} Our results imply that high musical sophistication allows users to perceive higher recommendation quality, and may thereby be more likely to accept recommended items. However, higher musical sophistication also increases choice difficulty, which may negatively influence acceptance. One possible explanation is that users with higher musical sophistication are able to leverage different control components to explore songs, and this influences their perception of recommendation quality, thereby accepting more songs. Finally, the question items for diversity did not converge in our model, so we are not able to make a conclusion about the influence of musical sophistication on diversity.

- Tag Box – keyword summary. Query Box – Keywords that originated the current ranking state. Document List – Augmented document titles Ranking View – relevance scores Document Viewer – title, year, and snippet of document with augmented keywords Ranking Controls – wrap buttons for ranking settings