2018 MUMS Fall Course - Statistical Representation of Model Input (EDITED) - Ralph Smith, October 2, 2018

•

0 j'aime•108 vues

The document discusses statistical representation of random inputs in continuum models. It provides examples of representing random fields using the Karhunen-Loeve expansion, which expresses a random field as the sum of orthogonal deterministic basis functions and random variables. Common choices for the covariance function in the expansion include the radial basis function and limiting cases of fully correlated and uncorrelated fields. The covariance function can be approximated from samples of the random field to enable representation in applications.

Signaler

Partager

Signaler

Partager

Télécharger pour lire hors ligne

Recommandé

This lecture presented as part of Model Uncertainty: Statistical and Mathematical fall course. 2018 MUMS Fall Course - Statistical and Mathematical Techniques for Sensitivi...

2018 MUMS Fall Course - Statistical and Mathematical Techniques for Sensitivi...The Statistical and Applied Mathematical Sciences Institute

This lecture presented as part of Model Uncertainty: Statistical and Mathematical fall course. 2018 MUMS Fall Course - Bayesian inference for model calibration in UQ - Ralp...

2018 MUMS Fall Course - Bayesian inference for model calibration in UQ - Ralp...The Statistical and Applied Mathematical Sciences Institute

This talk was presented as part of the Trends and Advances in Monte Carlo Sampling Algorithms Workshop.QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...

QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...The Statistical and Applied Mathematical Sciences Institute

This talk was presented as part of the Trends and Advances in Monte Carlo Sampling Algorithms Workshop.QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...

QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...The Statistical and Applied Mathematical Sciences Institute

This talk was presented as part of the Trends and Advances in Monte Carlo Sampling Algorithms Workshop.QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...

QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...The Statistical and Applied Mathematical Sciences Institute

This lecture presented as part of Model Uncertainty: Statistical and Mathematical fall course. 2018 MUMS Fall Course - Mathematical surrogate and reduced-order models - Ral...

2018 MUMS Fall Course - Mathematical surrogate and reduced-order models - Ral...The Statistical and Applied Mathematical Sciences Institute

This lecture presented as part of Model Uncertainty: Statistical and Mathematical fall course. 2018 MUMS Fall Course - Sampling-based techniques for uncertainty propagation...

2018 MUMS Fall Course - Sampling-based techniques for uncertainty propagation...The Statistical and Applied Mathematical Sciences Institute

Recommandé

This lecture presented as part of Model Uncertainty: Statistical and Mathematical fall course. 2018 MUMS Fall Course - Statistical and Mathematical Techniques for Sensitivi...

2018 MUMS Fall Course - Statistical and Mathematical Techniques for Sensitivi...The Statistical and Applied Mathematical Sciences Institute

This lecture presented as part of Model Uncertainty: Statistical and Mathematical fall course. 2018 MUMS Fall Course - Bayesian inference for model calibration in UQ - Ralp...

2018 MUMS Fall Course - Bayesian inference for model calibration in UQ - Ralp...The Statistical and Applied Mathematical Sciences Institute

This talk was presented as part of the Trends and Advances in Monte Carlo Sampling Algorithms Workshop.QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...

QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...The Statistical and Applied Mathematical Sciences Institute

This talk was presented as part of the Trends and Advances in Monte Carlo Sampling Algorithms Workshop.QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...

QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...The Statistical and Applied Mathematical Sciences Institute

This talk was presented as part of the Trends and Advances in Monte Carlo Sampling Algorithms Workshop.QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...

QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...The Statistical and Applied Mathematical Sciences Institute

This lecture presented as part of Model Uncertainty: Statistical and Mathematical fall course. 2018 MUMS Fall Course - Mathematical surrogate and reduced-order models - Ral...

2018 MUMS Fall Course - Mathematical surrogate and reduced-order models - Ral...The Statistical and Applied Mathematical Sciences Institute

This lecture presented as part of Model Uncertainty: Statistical and Mathematical fall course. 2018 MUMS Fall Course - Sampling-based techniques for uncertainty propagation...

2018 MUMS Fall Course - Sampling-based techniques for uncertainty propagation...The Statistical and Applied Mathematical Sciences Institute

This talk was presented as part of the Trends and Advances in Monte Carlo Sampling Algorithms Workshop.QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...

QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...The Statistical and Applied Mathematical Sciences Institute

Many mathematical models use a large number of poorly-known parameters as inputs. Quantifying the influence of each of these parameters is one of the aims of sensitivity analysis. Global Sensitivity Analysis is an important paradigm for understanding model behavior, characterizing uncertainty, improving model calibration, etc. Inputs’ uncertainty is modeled by a probability distribution. There exist various measures built in that paradigm. This tutorial focuses on the so-called Sobol’ indices, based on functional variance analysis. Estimation procedures will be presented, and the choice of the designs of experiments these procedures are based on will be discussed. As Sobol’ indices have no clear interpretation in the presence of statistical dependences between inputs, it also seems promising to measure sensitivity with Shapley effects, based on the notion of Shapley value, which is a solution concept in cooperative game theory.Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...

Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...The Statistical and Applied Mathematical Sciences Institute

Multidimensional integrals may be approximated by weighted averages of integrand values. Quasi-Monte Carlo (QMC) methods are more accurate than simple Monte Carlo methods because they carefully choose where to evaluate the integrand. This tutorial focuses on how quickly QMC methods converge to the correct answer as the number of integrand values increases. The answer may depend on the smoothness of the integrand and the sophistication of the QMC method. QMC error analysis may assumes the integrand belongs to a reproducing kernel Hilbert space or may assume that the integrand is an instance of a stochastic process with known covariance structure. These two approaches have interesting parallels. This tutorial also explores how the computational cost of achieving a good approximation to the integral depends on the dimension of the domain of the integrand. Finally, this tutorial explores methods for determining how many integrand values are needed to satisfy the error tolerance. Relevant software is described.Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...

Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...The Statistical and Applied Mathematical Sciences Institute

A fundamental numerical problem in many sciences is to compute integrals. These integrals can often be expressed as expectations and then approximated by sampling methods. Monte Carlo sampling is very competitive in high dimensions, but has a slow rate of convergence. One reason for this slowness is that the MC points form clusters and gaps. Quasi-Monte Carlo methods greatly reduce such clusters and gaps, and under modest smoothness demands on the integrand they can greatly improve accuracy. This can even take place in problems of surprisingly high dimension. This talk will introduce the basics of QMC and randomized QMC. It will include discrepancy and the Koksma-Hlawka inequality, some digital constructions and some randomized QMC methods that allow error estimation and sometimes bring improved accuracy.Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...

Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...The Statistical and Applied Mathematical Sciences Institute

We research behavior and sharp bounds for the zeros of infinite sequences of polynomials orthogonal with respect to a Geronimus perturbation of a positive Borel measure on the real line.Zeros of orthogonal polynomials generated by a Geronimus perturbation of meas...

Zeros of orthogonal polynomials generated by a Geronimus perturbation of meas...Edmundo José Huertas Cejudo

Contenu connexe

Tendances

This talk was presented as part of the Trends and Advances in Monte Carlo Sampling Algorithms Workshop.QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...

QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...The Statistical and Applied Mathematical Sciences Institute

Many mathematical models use a large number of poorly-known parameters as inputs. Quantifying the influence of each of these parameters is one of the aims of sensitivity analysis. Global Sensitivity Analysis is an important paradigm for understanding model behavior, characterizing uncertainty, improving model calibration, etc. Inputs’ uncertainty is modeled by a probability distribution. There exist various measures built in that paradigm. This tutorial focuses on the so-called Sobol’ indices, based on functional variance analysis. Estimation procedures will be presented, and the choice of the designs of experiments these procedures are based on will be discussed. As Sobol’ indices have no clear interpretation in the presence of statistical dependences between inputs, it also seems promising to measure sensitivity with Shapley effects, based on the notion of Shapley value, which is a solution concept in cooperative game theory.Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...

Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...The Statistical and Applied Mathematical Sciences Institute

Multidimensional integrals may be approximated by weighted averages of integrand values. Quasi-Monte Carlo (QMC) methods are more accurate than simple Monte Carlo methods because they carefully choose where to evaluate the integrand. This tutorial focuses on how quickly QMC methods converge to the correct answer as the number of integrand values increases. The answer may depend on the smoothness of the integrand and the sophistication of the QMC method. QMC error analysis may assumes the integrand belongs to a reproducing kernel Hilbert space or may assume that the integrand is an instance of a stochastic process with known covariance structure. These two approaches have interesting parallels. This tutorial also explores how the computational cost of achieving a good approximation to the integral depends on the dimension of the domain of the integrand. Finally, this tutorial explores methods for determining how many integrand values are needed to satisfy the error tolerance. Relevant software is described.Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...

Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...The Statistical and Applied Mathematical Sciences Institute

A fundamental numerical problem in many sciences is to compute integrals. These integrals can often be expressed as expectations and then approximated by sampling methods. Monte Carlo sampling is very competitive in high dimensions, but has a slow rate of convergence. One reason for this slowness is that the MC points form clusters and gaps. Quasi-Monte Carlo methods greatly reduce such clusters and gaps, and under modest smoothness demands on the integrand they can greatly improve accuracy. This can even take place in problems of surprisingly high dimension. This talk will introduce the basics of QMC and randomized QMC. It will include discrepancy and the Koksma-Hlawka inequality, some digital constructions and some randomized QMC methods that allow error estimation and sometimes bring improved accuracy.Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...

Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...The Statistical and Applied Mathematical Sciences Institute

Tendances (20)

Reinforcement Learning: Hidden Theory and New Super-Fast Algorithms

Reinforcement Learning: Hidden Theory and New Super-Fast Algorithms

Density theorems for anisotropic point configurations

Density theorems for anisotropic point configurations

Quantitative norm convergence of some ergodic averages

Quantitative norm convergence of some ergodic averages

QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...

QMC Program: Trends and Advances in Monte Carlo Sampling Algorithms Workshop,...

A Szemeredi-type theorem for subsets of the unit cube

A Szemeredi-type theorem for subsets of the unit cube

Subgradient Methods for Huge-Scale Optimization Problems - Юрий Нестеров, Cat...

Subgradient Methods for Huge-Scale Optimization Problems - Юрий Нестеров, Cat...

Variants of the Christ-Kiselev lemma and an application to the maximal Fourie...

Variants of the Christ-Kiselev lemma and an application to the maximal Fourie...

Random Matrix Theory and Machine Learning - Part 1

Random Matrix Theory and Machine Learning - Part 1

Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...

Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...

The proof complexity of matrix algebra - Newton Institute, Cambridge 2006

The proof complexity of matrix algebra - Newton Institute, Cambridge 2006

Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...

Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...

Doubly Accelerated Stochastic Variance Reduced Gradient Methods for Regulariz...

Doubly Accelerated Stochastic Variance Reduced Gradient Methods for Regulariz...

Geometric and viscosity solutions for the Cauchy problem of first order

Geometric and viscosity solutions for the Cauchy problem of first order

Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...

Program on Quasi-Monte Carlo and High-Dimensional Sampling Methods for Applie...

Phase diagram at finite T & Mu in strong coupling limit of lattice QCD

Phase diagram at finite T & Mu in strong coupling limit of lattice QCD

Similaire à 2018 MUMS Fall Course - Statistical Representation of Model Input (EDITED) - Ralph Smith, October 2, 2018

We research behavior and sharp bounds for the zeros of infinite sequences of polynomials orthogonal with respect to a Geronimus perturbation of a positive Borel measure on the real line.Zeros of orthogonal polynomials generated by a Geronimus perturbation of meas...

Zeros of orthogonal polynomials generated by a Geronimus perturbation of meas...Edmundo José Huertas Cejudo

Very often one has to deal with high-dimensional random variables (RVs). A high-dimensional RV can be described by its probability density (\pdf) and/or by the corresponding probability characteristic functions (\pcf), or by a function representation. Here the interest is mainly to compute characterisations like the entropy, or

relations between two distributions, like their Kullback-Leibler divergence, or more general measures such as $f$-divergences,

among others. These are all computed from the \pdf, which is often not available directly, and it is a computational challenge to even represent it in a numerically feasible fashion in case the dimension $d$ is even moderately large. It is an even stronger numerical challenge to then actually compute said characterisations in the high-dimensional case.

In this regard, in order to achieve a computationally feasible task, we propose to represent the density by a high order tensor product, and approximate this in a low-rank format.Low rank tensor approximation of probability density and characteristic funct...

Low rank tensor approximation of probability density and characteristic funct...Alexander Litvinenko

I do overview of methods for computing KL expansion. For tensor grid one can use high-dimensional Fourier, for non-tensor --- hierarchical matrices.Hierarchical matrices for approximating large covariance matries and computin...

Hierarchical matrices for approximating large covariance matries and computin...Alexander Litvinenko

Similaire à 2018 MUMS Fall Course - Statistical Representation of Model Input (EDITED) - Ralph Smith, October 2, 2018 (20)

Zeros of orthogonal polynomials generated by a Geronimus perturbation of meas...

Zeros of orthogonal polynomials generated by a Geronimus perturbation of meas...

Random Matrix Theory and Machine Learning - Part 3

Random Matrix Theory and Machine Learning - Part 3

Scattering theory analogues of several classical estimates in Fourier analysis

Scattering theory analogues of several classical estimates in Fourier analysis

Low rank tensor approximation of probability density and characteristic funct...

Low rank tensor approximation of probability density and characteristic funct...

Hierarchical matrices for approximating large covariance matries and computin...

Hierarchical matrices for approximating large covariance matries and computin...

An Efficient Boundary Integral Method for Stiff Fluid Interface Problems

An Efficient Boundary Integral Method for Stiff Fluid Interface Problems

Plus de The Statistical and Applied Mathematical Sciences Institute

Recently, the machine learning community has expressed strong interest in applying latent variable modeling strategies to causal inference problems with unobserved confounding. Here, I discuss one of the big debates that occurred over the past year, and how we can move forward. I will focus specifically on the failure of point identification in this setting, and discuss how this can be used to design flexible sensitivity analyses that cleanly separate identified and unidentified components of the causal model.Causal Inference Opening Workshop - Latent Variable Models, Causal Inference,...

Causal Inference Opening Workshop - Latent Variable Models, Causal Inference,...The Statistical and Applied Mathematical Sciences Institute

I will discuss paradigmatic statistical models of inference and learning from high dimensional data, such as sparse PCA and the perceptron neural network, in the sub-linear sparsity regime. In this limit the underlying hidden signal, i.e., the low-rank matrix in PCA or the neural network weights, has a number of non-zero components that scales sub-linearly with the total dimension of the vector. I will provide explicit low-dimensional variational formulas for the asymptotic mutual information between the signal and the data in suitable sparse limits. In the setting of support recovery these formulas imply sharp 0-1 phase transitions for the asymptotic minimum mean-square-error (or generalization error in the neural network setting). A similar phase transition was analyzed recently in the context of sparse high-dimensional linear regression by Reeves et al.2019 Fall Series: Special Guest Lecture - 0-1 Phase Transitions in High Dimen...

2019 Fall Series: Special Guest Lecture - 0-1 Phase Transitions in High Dimen...The Statistical and Applied Mathematical Sciences Institute

Many different measurement techniques are used to record neural activity in the brains of different organisms, including fMRI, EEG, MEG, lightsheet microscopy and direct recordings with electrodes. Each of these measurement modes have their advantages and disadvantages concerning the resolution of the data in space and time, the directness of measurement of the neural activity and which organisms they can be applied to. For some of these modes and for some organisms, significant amounts of data are now available in large standardized open-source datasets. I will report on our efforts to apply causal discovery algorithms to, among others, fMRI data from the Human Connectome Project, and to lightsheet microscopy data from zebrafish larvae. In particular, I will focus on the challenges we have faced both in terms of the nature of the data and the computational features of the discovery algorithms, as well as the modeling of experimental interventions. Causal Inference Opening Workshop - Causal Discovery in Neuroimaging Data - F...

Causal Inference Opening Workshop - Causal Discovery in Neuroimaging Data - F...The Statistical and Applied Mathematical Sciences Institute

Bayesian Additive Regression Trees (BART) has been shown to be an effective framework for modeling nonlinear regression functions, with strong predictive performance in a variety of contexts. The BART prior over a regression function is defined by independent prior distributions on tree structure and leaf or end-node parameters. In observational data settings, Bayesian Causal Forests (BCF) has successfully adapted BART for estimating heterogeneous treatment effects, particularly in cases where standard methods yield biased estimates due to strong confounding.

We introduce BART with Targeted Smoothing, an extension which induces smoothness over a single covariate by replacing independent Gaussian leaf priors with smooth functions. We then introduce a new version of the Bayesian Causal Forest prior, which incorporates targeted smoothing for modeling heterogeneous treatment effects which vary smoothly over a target covariate. We demonstrate the utility of this approach by applying our model to a timely women's health and policy problem: comparing two dosing regimens for an early medical abortion protocol, where the outcome of interest is the probability of a successful early medical abortion procedure at varying gestational ages, conditional on patient covariates. We discuss the benefits of this approach in other women’s health and obstetrics modeling problems where gestational age is a typical covariate.Causal Inference Opening Workshop - Smooth Extensions to BART for Heterogeneo...

Causal Inference Opening Workshop - Smooth Extensions to BART for Heterogeneo...The Statistical and Applied Mathematical Sciences Institute

Difference-in-differences is a widely used evaluation strategy that draws causal inference from observational panel data. Its causal identification relies on the assumption of parallel trends, which is scale-dependent and may be questionable in some applications. A common alternative is a regression model that adjusts for the lagged dependent variable, which rests on the assumption of ignorability conditional on past outcomes. In the context of linear models, Angrist and Pischke (2009) show that the difference-in-differences and lagged-dependent-variable regression estimates have a bracketing relationship. Namely, for a true positive effect, if ignorability is correct, then mistakenly assuming parallel trends will overestimate the effect; in contrast, if the parallel trends assumption is correct, then mistakenly assuming ignorability will underestimate the effect. We show that the same bracketing relationship holds in general nonparametric (model-free) settings. We also extend the result to semiparametric estimation based on inverse probability weighting. Causal Inference Opening Workshop - A Bracketing Relationship between Differe...

Causal Inference Opening Workshop - A Bracketing Relationship between Differe...The Statistical and Applied Mathematical Sciences Institute

We develop sensitivity analyses for weak nulls in matched observational studies while allowing unit-level treatment effects to vary. In contrast to randomized experiments and paired observational studies, we show for general matched designs that over a large class of test statistics, any valid sensitivity analysis for the weak null must be unnecessarily conservative if Fisher's sharp null of no treatment effect for any individual also holds. We present a sensitivity analysis valid for the weak null, and illustrate why it is conservative if the sharp null holds through connections to inverse probability weighted estimators. An alternative procedure is presented that is asymptotically sharp if treatment effects are constant, and is valid for the weak null under additional assumptions which may be deemed reasonable by practitioners. The methods may be applied to matched observational studies constructed using any optimal without-replacement matching algorithm, allowing practitioners to assess robustness to hidden bias while allowing for treatment effect heterogeneity.Causal Inference Opening Workshop - Testing Weak Nulls in Matched Observation...

Causal Inference Opening Workshop - Testing Weak Nulls in Matched Observation...The Statistical and Applied Mathematical Sciences Institute

The world of health care is full of policy interventions: a state expands eligibility rules for its Medicaid program, a medical society changes its recommendations for screening frequency, a hospital implements a new care coordination program. After a policy change, we often want to know, “Did it work?” This is a causal question; we want to know whether the policy CAUSED outcomes to change. One popular way of estimating causal effects of policy interventions is a difference-in-differences study. In this controlled pre-post design, we measure the change in outcomes of people who are exposed to the new policy, comparing average outcomes before and after the policy is implemented. We contrast that change to the change over the same time period in people who were not exposed to the new policy. The differential change in the treated group’s outcomes, compared to the change in the comparison group’s outcomes, may be interpreted as the causal effect of the policy. To do so, we must assume that the comparison group’s outcome change is a good proxy for the treated group’s (counterfactual) outcome change in the absence of the policy. This conceptual simplicity and wide applicability in policy settings makes difference-in-differences an appealing study design. However, the apparent simplicity belies a thicket of conceptual, causal, and statistical complexity. In this talk, I will introduce the fundamentals of difference-in-differences studies and discuss recent innovations including key assumptions and ways to assess their plausibility, estimation, inference, and robustness checks.Causal Inference Opening Workshop - Difference-in-differences: more than meet...

Causal Inference Opening Workshop - Difference-in-differences: more than meet...The Statistical and Applied Mathematical Sciences Institute

We present recent advances and statistical developments for evaluating Dynamic Treatment Regimes (DTR), which allow the treatment to be dynamically tailored according to evolving subject-level data. Identification of an optimal DTR is a key component for precision medicine and personalized health care. Specific topics covered in this talk include several recent projects with robust and flexible methods developed for the above research area. We will first introduce a dynamic statistical learning method, adaptive contrast weighted learning (ACWL), which combines doubly robust semiparametric regression estimators with flexible machine learning methods. We will further develop a tree-based reinforcement learning (T-RL) method, which builds an unsupervised decision tree that maintains the nature of batch-mode reinforcement learning. Unlike ACWL, T-RL handles the optimization problem with multiple treatment comparisons directly through a purity measure constructed with augmented inverse probability weighted estimators. T-RL is robust, efficient and easy to interpret for the identification of optimal DTRs. However, ACWL seems more robust against tree-type misspecification than T-RL when the true optimal DTR is non-tree-type. At the end of this talk, we will also present a new Stochastic-Tree Search method called ST-RL for evaluating optimal DTRs.Causal Inference Opening Workshop - New Statistical Learning Methods for Esti...

Causal Inference Opening Workshop - New Statistical Learning Methods for Esti...The Statistical and Applied Mathematical Sciences Institute

A fundamental feature of evaluating causal health effects of air quality regulations is that air pollution moves through space, rendering health outcomes at a particular population location dependent upon regulatory actions taken at multiple, possibly distant, pollution sources. Motivated by studies of the public-health impacts of power plant regulations in the U.S., this talk introduces the novel setting of bipartite causal inference with interference, which arises when 1) treatments are defined on observational units that are distinct from those at which outcomes are measured and 2) there is interference between units in the sense that outcomes for some units depend on the treatments assigned to many other units. Interference in this setting arises due to complex exposure patterns dictated by physical-chemical atmospheric processes of pollution transport, with intervention effects framed as propagating across a bipartite network of power plants and residential zip codes. New causal estimands are introduced for the bipartite setting, along with an estimation approach based on generalized propensity scores for treatments on a network. The new methods are deployed to estimate how emission-reduction technologies implemented at coal-fired power plants causally affect health outcomes among Medicare beneficiaries in the U.S.Causal Inference Opening Workshop - Bipartite Causal Inference with Interfere...

Causal Inference Opening Workshop - Bipartite Causal Inference with Interfere...The Statistical and Applied Mathematical Sciences Institute

Laine Thomas presented information about how causal inference is being used to determine the cost/benefit of the two most common surgical surgical treatments for women - hysterectomy and myomectomy.Causal Inference Opening Workshop - Bridging the Gap Between Causal Literatur...

Causal Inference Opening Workshop - Bridging the Gap Between Causal Literatur...The Statistical and Applied Mathematical Sciences Institute

We provide an overview of some recent developments in machine learning tools for dynamic treatment regime discovery in precision medicine. The first development is a new off-policy reinforcement learning tool for continual learning in mobile health to enable patients with type 1 diabetes to exercise safely. The second development is a new inverse reinforcement learning tools which enables use of observational data to learn how clinicians balance competing priorities for treating depression and mania in patients with bipolar disorder. Both practical and technical challenges are discussed.Causal Inference Opening Workshop - Some Applications of Reinforcement Learni...

Causal Inference Opening Workshop - Some Applications of Reinforcement Learni...The Statistical and Applied Mathematical Sciences Institute

The method of differences-in-differences (DID) is widely used to estimate causal effects. The primary advantage of DID is that it can account for time-invariant bias from unobserved confounders. However, the standard DID estimator will be biased if there is an interaction between history in the after period and the groups. That is, bias will be present if an event besides the treatment occurs at the same time and affects the treated group in a differential fashion. We present a method of bounds based on DID that accounts for an unmeasured confounder that has a differential effect in the post-treatment time period. These DID bracketing bounds are simple to implement and only require partitioning the controls into two separate groups. We also develop two key extensions for DID bracketing bounds. First, we develop a new falsification test to probe the key assumption that is necessary for the bounds estimator to provide consistent estimates of the treatment effect. Next, we develop a method of sensitivity analysis that adjusts the bounds for possible bias based on differences between the treated and control units from the pretreatment period. We apply these DID bracketing bounds and the new methods we develop to an application on the effect of voter identification laws on turnout. Specifically, we focus estimating whether the enactment of voter identification laws in Georgia and Indiana had an effect on voter turnout.Causal Inference Opening Workshop - Bracketing Bounds for Differences-in-Diff...

Causal Inference Opening Workshop - Bracketing Bounds for Differences-in-Diff...The Statistical and Applied Mathematical Sciences Institute

Beth Ann Griffin presented information about how her company, Rand Corporation, uses their research to develop effective gun policy.Causal Inference Opening Workshop - Assisting the Impact of State Polcies: Br...

Causal Inference Opening Workshop - Assisting the Impact of State Polcies: Br...The Statistical and Applied Mathematical Sciences Institute

We study experimental design in large-scale stochastic systems with substantial uncertainty and structured cross-unit interference. We consider the problem of a platform that seeks to optimize supply-side payments p in a centralized marketplace where different suppliers interact via their effects on the overall supply-demand equilibrium, and propose a class of local experimentation schemes that can be used to optimize these payments without perturbing the overall market equilibrium. We show that, as the system size grows, our scheme can estimate the gradient of the platform’s utility with respect to p while perturbing the overall market equilibrium by only a vanishingly small amount. We can then use these gradient estimates to optimize p via any stochastic first-order optimization method. These results stem from the insight that, while the system involves a large number of interacting units, any interference can only be channeled through a small number of key statistics, and this structure allows us to accurately predict feedback effects that arise from global system changes using only information collected while remaining in equilibrium.Causal Inference Opening Workshop - Experimenting in Equilibrium - Stefan Wag...

Causal Inference Opening Workshop - Experimenting in Equilibrium - Stefan Wag...The Statistical and Applied Mathematical Sciences Institute

We discuss a general roadmap for generating causal inference based on observational studies used to general real world evidence. We review targeted minimum loss estimation (TMLE), which provides a general template for the construction of asymptotically efficient plug-in estimators of a target estimand for realistic (i.e, infinite dimensional) statistical models. TMLE is a two stage procedure that first involves using ensemble machine learning termed super-learning to estimate the relevant stochastic relations between the treatment, censoring, covariates and outcome of interest. The super-learner allows one to fully utilize all the advances in machine learning (in addition to more conventional parametric model based estimators) to build a single most powerful ensemble machine learning algorithm. We present Highly Adaptive Lasso as an important machine learning algorithm to include.

In the second step, the TMLE involves maximizing a parametric likelihood along a so-called least favorable parametric model through the super-learner fit of the relevant stochastic relations in the observed data. This second step bridges the state of the art in machine learning to estimators of target estimands for which statistical inference is available (i.e, confidence intervals, p-values etc). We also review recent advances in collaborative TMLE in which the fit of the treatment and censoring mechanism is tailored w.r.t. performance of TMLE. We also discuss asymptotically valid bootstrap based inference. Simulations and data analyses are provided as demonstrations.Causal Inference Opening Workshop - Targeted Learning for Causal Inference Ba...

Causal Inference Opening Workshop - Targeted Learning for Causal Inference Ba...The Statistical and Applied Mathematical Sciences Institute

We describe different approaches for specifying models and prior distributions for estimating heterogeneous treatment effects using Bayesian nonparametric models. We make an affirmative case for direct, informative (or partially informative) prior distributions on heterogeneous treatment effects, especially when treatment effect size and treatment effect variation is small relative to other sources of variability. We also consider how to provide scientifically meaningful summaries of complicated, high-dimensional posterior distributions over heterogeneous treatment effects with appropriate measures of uncertainty.Causal Inference Opening Workshop - Bayesian Nonparametric Models for Treatme...

Causal Inference Opening Workshop - Bayesian Nonparametric Models for Treatme...The Statistical and Applied Mathematical Sciences Institute

Climate change mitigation has traditionally been analyzed as some version of a public goods game (PGG) in which a group is most successful if everybody contributes, but players are best off individually by not contributing anything (i.e., “free-riding”)—thereby creating a social dilemma. Analysis of climate change using the PGG and its variants has helped explain why global cooperation on GHG reductions is so difficult, as nations have an incentive to free-ride on the reductions of others. Rather than inspire collective action, it seems that the lack of progress in addressing the climate crisis is driving the search for a “quick fix” technological solution that circumvents the need for cooperation.2019 Fall Series: Special Guest Lecture - Adversarial Risk Analysis of the Ge...

2019 Fall Series: Special Guest Lecture - Adversarial Risk Analysis of the Ge...The Statistical and Applied Mathematical Sciences Institute

This seminar discussed ways in which to produce professional academic writing, from academic papers to research proposals or technical writing in general.2019 Fall Series: Professional Development, Writing Academic Papers…What Work...

2019 Fall Series: Professional Development, Writing Academic Papers…What Work...The Statistical and Applied Mathematical Sciences Institute

Machine learning (including deep and reinforcement learning) and blockchain are two of the most noticeable technologies in recent years. The first one is the foundation of artificial intelligence and big data, and the second one has significantly disrupted the financial industry. Both technologies are data-driven, and thus there are rapidly growing interests in integrating them for more secure and efficient data sharing and analysis. In this paper, we review the research on combining blockchain and machine learning technologies and demonstrate that they can collaborate efficiently and effectively. In the end, we point out some future directions and expect more researches on deeper integration of the two promising technologies.2019 GDRR: Blockchain Data Analytics - Machine Learning in/for Blockchain: Fu...

2019 GDRR: Blockchain Data Analytics - Machine Learning in/for Blockchain: Fu...The Statistical and Applied Mathematical Sciences Institute

In this talk, we discuss QuTrack, a Blockchain-based approach to track experiment and model changes primarily for AI and ML models. In addition, we discuss how change analytics can be used for process improvement and to enhance the model development and deployment processes. 2019 GDRR: Blockchain Data Analytics - QuTrack: Model Life Cycle Management f...

2019 GDRR: Blockchain Data Analytics - QuTrack: Model Life Cycle Management f...The Statistical and Applied Mathematical Sciences Institute

Plus de The Statistical and Applied Mathematical Sciences Institute (20)

Causal Inference Opening Workshop - Latent Variable Models, Causal Inference,...

Causal Inference Opening Workshop - Latent Variable Models, Causal Inference,...

2019 Fall Series: Special Guest Lecture - 0-1 Phase Transitions in High Dimen...

2019 Fall Series: Special Guest Lecture - 0-1 Phase Transitions in High Dimen...

Causal Inference Opening Workshop - Causal Discovery in Neuroimaging Data - F...

Causal Inference Opening Workshop - Causal Discovery in Neuroimaging Data - F...

Causal Inference Opening Workshop - Smooth Extensions to BART for Heterogeneo...

Causal Inference Opening Workshop - Smooth Extensions to BART for Heterogeneo...

Causal Inference Opening Workshop - A Bracketing Relationship between Differe...

Causal Inference Opening Workshop - A Bracketing Relationship between Differe...

Causal Inference Opening Workshop - Testing Weak Nulls in Matched Observation...

Causal Inference Opening Workshop - Testing Weak Nulls in Matched Observation...

Causal Inference Opening Workshop - Difference-in-differences: more than meet...

Causal Inference Opening Workshop - Difference-in-differences: more than meet...

Causal Inference Opening Workshop - New Statistical Learning Methods for Esti...

Causal Inference Opening Workshop - New Statistical Learning Methods for Esti...

Causal Inference Opening Workshop - Bipartite Causal Inference with Interfere...

Causal Inference Opening Workshop - Bipartite Causal Inference with Interfere...

Causal Inference Opening Workshop - Bridging the Gap Between Causal Literatur...

Causal Inference Opening Workshop - Bridging the Gap Between Causal Literatur...

Causal Inference Opening Workshop - Some Applications of Reinforcement Learni...

Causal Inference Opening Workshop - Some Applications of Reinforcement Learni...

Causal Inference Opening Workshop - Bracketing Bounds for Differences-in-Diff...

Causal Inference Opening Workshop - Bracketing Bounds for Differences-in-Diff...

Causal Inference Opening Workshop - Assisting the Impact of State Polcies: Br...

Causal Inference Opening Workshop - Assisting the Impact of State Polcies: Br...

Causal Inference Opening Workshop - Experimenting in Equilibrium - Stefan Wag...

Causal Inference Opening Workshop - Experimenting in Equilibrium - Stefan Wag...

Causal Inference Opening Workshop - Targeted Learning for Causal Inference Ba...

Causal Inference Opening Workshop - Targeted Learning for Causal Inference Ba...

Causal Inference Opening Workshop - Bayesian Nonparametric Models for Treatme...

Causal Inference Opening Workshop - Bayesian Nonparametric Models for Treatme...

2019 Fall Series: Special Guest Lecture - Adversarial Risk Analysis of the Ge...

2019 Fall Series: Special Guest Lecture - Adversarial Risk Analysis of the Ge...

2019 Fall Series: Professional Development, Writing Academic Papers…What Work...

2019 Fall Series: Professional Development, Writing Academic Papers…What Work...

2019 GDRR: Blockchain Data Analytics - Machine Learning in/for Blockchain: Fu...

2019 GDRR: Blockchain Data Analytics - Machine Learning in/for Blockchain: Fu...

2019 GDRR: Blockchain Data Analytics - QuTrack: Model Life Cycle Management f...

2019 GDRR: Blockchain Data Analytics - QuTrack: Model Life Cycle Management f...

Dernier

Making communications land - Are they received and understood as intended? webinar

Thursday 2 May 2024

A joint webinar created by the APM Enabling Change and APM People Interest Networks, this is the third of our three part series on Making Communications Land.

presented by

Ian Cribbes, Director, IMC&T Ltd

@cribbesheet

The link to the write up page and resources of this webinar:

https://www.apm.org.uk/news/making-communications-land-are-they-received-and-understood-as-intended-webinar/

Content description:

How do we ensure that what we have communicated was received and understood as we intended and how do we course correct if it has not.Making communications land - Are they received and understood as intended? we...

Making communications land - Are they received and understood as intended? we...Association for Project Management

Mehran University Newsletter is a Quarterly Publication from Public Relations OfficeMehran University Newsletter Vol-X, Issue-I, 2024

Mehran University Newsletter Vol-X, Issue-I, 2024Mehran University of Engineering & Technology, Jamshoro

Dernier (20)

Making communications land - Are they received and understood as intended? we...

Making communications land - Are they received and understood as intended? we...

Basic Civil Engineering first year Notes- Chapter 4 Building.pptx

Basic Civil Engineering first year Notes- Chapter 4 Building.pptx

Mixin Classes in Odoo 17 How to Extend Models Using Mixin Classes

Mixin Classes in Odoo 17 How to Extend Models Using Mixin Classes

ICT role in 21st century education and it's challenges.

ICT role in 21st century education and it's challenges.

Asian American Pacific Islander Month DDSD 2024.pptx

Asian American Pacific Islander Month DDSD 2024.pptx

Unit-IV; Professional Sales Representative (PSR).pptx

Unit-IV; Professional Sales Representative (PSR).pptx

2018 MUMS Fall Course - Statistical Representation of Model Input (EDITED) - Ralph Smith, October 2, 2018

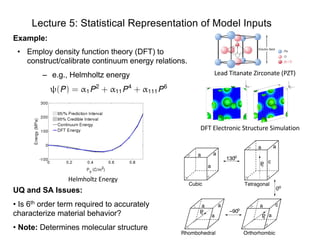

- 1. Lecture 5: Statistical Representation of Model Inputs Example: Lead Titanate Zirconate (PZT) DFT Electronic Structure Simulation Helmholtz Energy (P) = ↵1P2 + ↵11P4 + ↵111P6 UQ and SA Issues: • Is 6th order term required to accurately characterize material behavior? • Note: Determines molecular structure • Employ density function theory (DFT) to construct/calibrate continuum energy relations. – e.g., Helmholtz energy 0o P0 P0 a aa c a a Cubic Tetragonal P0 a a a Rhombohedral a a c 130 Orthorhombic o −90o

- 2. Quantum-Informed Continuum Models DFT Electronic Structure Simulation Broad Objective: • Use UQ/SA to help bridge scales from quantum to system Lead Titanate Zirconate (PZT) (P) = ↵1P2 + ↵11P4 + ↵111P6 UQ and SA Issues: • Is 6th order term required to accurately characterize material behavior? • Note: Determines molecular structure Objectives: • Employ density function theory (DFT) to construct/calibrate continuum energy relations. – e.g., Helmholtz energy Helmholtz Energy Note: • Linearly parameterized

- 3. Example 2: Pressurized Water Reactors (PWR) Models: •Involve neutron transport, thermal-hydraulics, chemistry. •Inherently multi-scale, multi-physics. CRUD Measurements: Consist of low resolution images at limited number of locations.

- 4. Thermo-Hydraulic Equations: Mass, momentum and energy balance for fluid Challenges: • Codes can have 15-30 closure relations and up to 75 parameters. • Codes and closure relations often ”borrowed” from other physical phenomena; e.g., single phase fluids, airflow over a car (CFD code STAR-CCM+) • Calibration necessary and closure relations can conflict. • Inference of random fields requires high- (infinite-) dimensional theory. Notes: • Similar relations for gas and bubbly phases • Surrogate models must conserve mass, energy, and momentum • Many parameters are spatially varying and represented by random fields @ @t (↵f ⇢f ) + r · (↵f ⇢f vf ) = - ↵f ⇢f @vf @t + ↵f ⇢f vf · rvf + r · R f + ↵f r · + ↵f rpf = -FR - F + (vf - vg)/2 + ↵f ⇢f g @ @t (↵f ⇢f ef ) + r · (↵f ⇢f ef vf + Th) = (Tg - Tf )H + Tf f -Tg(H - ↵gr · h) + h · rT - [ef + Tf (s⇤ - sf )] -pf ✓ @↵f @t + r · (↵f vf ) + ⇢f ◆ Pressurized Water Reactors (PWR)

- 5. Representation of Random Inputs Example 1: Consider the Helmholtz energy (P) = ↵1P2 + ↵11P4 + ↵111P6 with frequency-dependent random parameters (P, !, f) = ↵1(f, !)P2 + ↵11(f, !)P4 + ↵111(f, !)P6 Challenge 1: Difficult to work with probabilities associated with random events ! 2 ⌦. Solution: Every realization ! 2 ⌦ yields a value q 2 Q ⇢ . Work in image of Challenge 2: How do we represent random fields; e.g., ↵1(f, !) – that are infinite-dimensional? Solution: Develop a representation and approximation framework probability space ( , B( ), ⇢(q)) instead of (⌦, F, P).

- 6. Example and Motivation Example 2: Heat equation Motivation: Consider @⇢ @t = ↵ @2 ⇢ @x2 , 0 < x < L , t > 0 ⇢(t, 0) = ⇢(t, L) = 0 t > 0 T(0, x) = ⇢0(x) 0 < x < L @T @t = @ @x ✓ ↵(x, !) @T @x ◆ + f(t, x) , -1 < x < 1, t > 0 T(t, -1, !) = T`(!) , T(t, 1, !) = Tr (!) t > 0 T(0, x, !) = T0(!) - 1 < x < 1

- 7. Example and Motivation Motivation: Consider @⇢ @t = ↵ @2 ⇢ @x2 , 0 < x < L , t > 0 ⇢(t, 0) = ⇢(t, L) = 0 t > 0 T(0, x) = ⇢0(x) 0 < x < L Separation of Variables: Take Then X 00 (x) - cX(x) = 0 X(0) = X(L) = 0 and ˙T(t) = c↵T(t) ) T(t) = ec↵t Note: Heat decays – Mathematical argument If c > 0, this implies that X(x) = k = 0. Thus c < 0 so we take c = - 2 where > 0. ZL 0 [XX 00 - cX2 ]dx = - ZL 0 ⇥ (X 0 )2 + cX2 ⇤ dx = 0

- 8. Motivation Boundary Value Problem: X 00 (x) - cX(x) = 0 X(0) = X(L) = 0 Solution: X(x) = A cos( x) + B sin( x) X(0) = 0 ) A = 0 X(L) = 0 ) L = n⇡ Thus Xn(x) = Bn sin( nx) , n = n⇡ L , Bn 6= 0 Initial Condition: ⇢(t, x) = 1X n=1 Bne-↵ 2 nt sin( nx) General Solution: ⇢0(x) = ⇢(0, x) = 1X n=1 Bn sin( nx) ) ZL 0 ⇢0(x) sin( mx)dx = ZL 0 1X n=1 Bn sin( nx) sin( mx)dx ) Bn = 2 L ZL 0 ⇢0(x) sin( nx)dx

- 9. Motivation Boundary Value Problem: X 00 (x) - cX(x) = 0 X(0) = X(L) = 0 Initial Condition: ⇢(t, x) = 1X n=1 Bne-↵ 2 nt sin( nx) General Solution: Example: ⇢0(x) = sin ⇣⇡x L ⌘ Bn = 2 L ZL 0 ⇢0(x) sin( nx)dx Bn = 2 L ZL 0 sin ⇣⇡x L ⌘ sin ⇣n⇡x L ⌘ dx = 2 L · L 2 , n = 1 0 , n 6= 1 Note Decay! L General Solution: ⇢(t, x) = e-↵(⇡/L)2 t sin(⇡x/L)

- 10. Random Field Representation Random Fields: Strategy – Represent random field in terms of mean function and covariance function ↵(x, !) ↵(x) Finite-Dimensional: V = 2 6 6 6 6 4 var(X1) cov(X1, X2) · · · cov(X2, X1) var(X2) ... ... var(Xp) 3 7 7 7 7 5 Note: Infinite-dimensional for functions Examples: Short versus long-range interactions Note: Limiting Behavior: D = [-1, 1] c(x, y) 1. c(x, y) = 2 e-|x-y|/L (i) L ! 1 ) c(x, y) = 1 Fully correlated so cannot truncated (ii) L ! 0 ) c(x, y) = (x - y) Uncorrelated so easy to truncate normalizes

- 11. Random Field Representation Examples: Gaussian 1-D Wiener Process • Used to model Brownian motion • Can solve eigenvalue problem explicitly Properties of c(x,y): 1. Finite-dimensional: e.g., C = V symmetric and positive definite C = ⇤ -1 = ⇤ T = 2 6 4 1 · · · p 3 7 5 2 6 4 1 ... p 3 7 5 2 6 4 1 ... p 3 7 5 = ⇥ 1 1 , ... , p p ⇤ 2 6 4 1 ... p 3 7 5 = p X p=1 n n ( n )T 2. c(x, y) = min(x, y) 3. c(x, y) = 2 e-(x-y)2 /2L2 MATLAB: covariance_exp.m, covariance_min.m, covariance_Gaussian.m

- 12. Random Field Representation Mercer’s Theorem: (Infinite Dimensional) – If c(x,y) is symmetric and positive definite, it can be expressed as where and Z D n(x) m(x)dx = mn Karhunen-Loeve Expansion: ↵(x, !) = ¯↵(x) + 1X n=1 p n n(x)Qn(!) Note: Eigenfunctions are orthonormal c(x, y) = 1X n=1 n n(x) n(y) Z D c(x, y) n(y)dy = n n(x) for x 2 D

- 13. Random Field Representation Karhunen-Loeve Expansion: ↵(x, !) = ¯↵(x) + 1X n=1 p n n(x)Qn(!) Statistical Properties: Take ↵(x, !) = ¯↵(x) + (x, !) (x, !) = 1X n=1 p n n(x)Qn(!) ) (x, !) (y, !) = 1X n=1 1X m=1 Qn(!)Qm(!) p n m n(x) m(y) Recall: For random variables X,Y cov(X, Y] = E[XY] - E[X]E[Y] Notation: E[Y] = hYi = Z y⇢(y)dy where (x, !) has zero mean and covariance function c(x, y). Take

- 14. Random Field Representation Statistical Properties: Because (x, !) has zero mean, Since eigenfunctions are orthogonal, Multiplication by `(x) and integration yields k Z D k (x) `(x)dx = 1X n=1 hQn(!)Qk (!)i p n k n` ) k k` = p k ` hQk (!)Q`(!)i Note: k = ` ) hQk (!)Q`(!)i = 1 k 6= ` ) hQk (!)Q`(!)i = 0 ) hQk (!)Q`(!)i = k` c(x, y) = E[ (x, !) (y, !)] = h (x, !) (y, !)i = 1X n=1 1X m=1 hQn(!)Qm(!)i p n m n(x) m(y) k k (x) = Z D c(x, y) k (y)dy = 1X n=1 hQn(!)Qk (!)i p n k n(x)

- 15. Random Field Representation where Karhunen-Loeve Expansion: ↵(x, !) = ¯↵(x) + 1X n=1 p n n(x)Qn(!) Result: The random variables satisfy (i) E[Qn] = 0 (ii) E[QnQm] = mn Zero mean Mutually orthogonal and uncorrelated Question: How do we choose c(x,y) and compute solutions to Z D c(x, y) n(y)dy = n n(x) for x 2 D Z D c(x, y) n(y)dy = n n(x) for x 2 D

- 16. Common Choices for c(x,y) 1. Radial Basis Function: so Note: L is correlation length, which quantifies smoothness or relation between values of x and y. Analytic Solution: n = 2L 1+L2w2 n , if n is even, 2L 1+L2v2 n , if n is odd, n(x) = 8 >>< >>: sin(wnx) q 1- sin(2wn) 2wn , if n is even, cos(vnx) q 1+ sin(2vn) 2vn , if n is odd Note: wn and vn are the solutions of the transcendental equations Lw + tan(w) = 0 , for even n, 1 - Lv tan(v) = 0 , for odd n Z1 -1 e-|x-y|/L n(y)dy = n n(x) c(x, y) = e-|x-y|/L , D = [-1, 1]

- 17. Common Choices for c(x,y) 1. Radial Basis Function: so Note: L is correlation length Limiting Cases: Recall: Then Take n(x) = n n(x) ) n = 1 for all n Note: Because uncorrelated, we cannot truncate series! Z D n(y)dy = n n(x) = kn 1(x) = 1(y) = p 2 2 1 = 2 n = 0 for n = 2, 3, ... (i) c(x, y) = 1 Fully correlated (L ! 1) c(x, y) = 1 = 1X n=1 n n(x) n(y) (ii) c(x, y) = (x - y) Uncorrelated (L ! 0) c(x, y) = e-|x-y|/L , D = [-1, 1] Z1 -1 e-|x-y|/L n(y)dy = n n(x)

- 18. Construction of c(x,y) Question: If we know underlying distribution can we approximate the covariance function c(x,y)? Yes … via sampling! ! 2 ⌦, Example: Consider the Helmholtz energy ↵(P, !) = ↵1(!)P2 + ↵11(!)P4 + ↵111(!)P6 and take x = P for x = P 2 [0, 1] Note: Assume we can evaluate for various polarizations and values from the underlying distribution ↵(xj , !k ) xj = Pj !k Required Steps: • Approximation of the covariance function c(x,y) • Approximation of the eigenvalue problem with Z D n(x) m(x)dx = mn Z D c(x, y) n(y)dy = n n(x) for x 2 D

- 19. Construction of c(x,y) Step 1: Approximation of covariance function c(x,y) For NMC Monte Carlo samples !k , covariance function approximated by where the centered field is ↵c(x, !k ) = ↵(x, !k ) - ↵(x) and the mean is ¯↵(x) ⇡ 1 NMC NMCX j=1 ↵(x, !j ) c(x, y) ⇡ cNMC (x, y) = 1 NMC - 1 NMCX k=1 ↵c(x, !k )↵c(y, !k )

- 20. Construction of c(x,y) Step 2 (Nystrom’s Method): Approximate eigenvalue problem with Z D n(x) m(x)dx = mn Z D c(x, y) n(y)dy = n n(x) for x 2 D Consider composite quadrature rule with Nquad points and weights {(xj , wj )}. Discretized Eigenvalue Problem: Nquad X j=1 c(xi , xj ) n(xj )wj = n n(xi ) , i = 1, ... , Nquad Matrix Eigenvalue Problem: CW n = n n where i n = n(xi ) Cij = c(xi , xj ) W = diag(w1, ... , wNquad ) Symmetric Matrix Eigenvalue Problem: W1/2 CW1/2 en = n en where W1/2 = diag( p w1, ... , p wNquad ) eT n en = 1 ) T n W 1 = 1 en = W1/2 n ) n = W-1/2 en

- 21. Algorithm to Approximate c(x,y) Inputs: (i) Quadrature formula with nodes and weights {(xj , wj )} (ii) Functions evaluations {↵(xj , !k )} , j = 1, ... , Nquad , k = 1, ... , NMc Output: Eigenvalues, eigenvectors and KL modes (1) Center the process ↵c(xi , !k ) = ↵(xi , !k ) - 1 NMC NMCX j=1 ↵(xi , !j ) for i = 1, ... , Nquad and k = 1, ... , NMC. 2) Form covariance matrix C = [Cij ] that discretizes covariance function c(x, y) Cij = 1 NMC - 1 NMCX k=1 ↵c(xi , !k )↵c(xj , !k ) for i, j = 1, ... , Nquad .

- 22. Algorithm to Approximate c(x,y) Output: Eigenvalues, eigenvectors and KL modes (3) Let W = diag(w1, ... , wNquad ) and solve W1/2 Cw1/2 en = n en for n = 1, ... , Nquad . (4) Compute the eigenvectors n = W-1/2 en. (5) Exploit the decay in the eigenvalues n to choose a KL truncation level NKL and compute discretized KL modes Qn(!). Consider ↵(x, !) ⇡ ¯↵(x) + NKLX n=1 p n n(x)Qn(!) ) ↵c(x, !) ⇡ NKLX n=1 p n n(x)Qn(!) ) Qn(!) = 1 p n Z D ↵c(x, !) n(x)dx ⇡ 1 p n Nquad X j=1 wj ↵c(xj , !) j n. (1)

- 23. Algorithm to Approximate c(x,y) Output: Eigenvalues, eigenvectors and KL modes (6) Sample !k and construct surrogate eQn(!k ); e.g., polynomial, spectral poly- nomial, Gaussian process. Example: Consider the Helmholtz energy ↵(x, !) = ↵1(!)x2 + ↵11x4 + ↵111x6 for x 2 [0, 1] Mean Values: Based on DFT ¯↵1 = -389.4 , ¯↵11 = 761.3 , ¯↵111 = 61.5. Distribution: ↵ = [↵1, ↵11, ↵111] ⇠ U([↵1`, ↵1r ] ⇥ [↵2`, ↵2r ] ⇥ [↵3`, ↵3r ]) where ↵1` = ¯↵1 - 0.2 ¯↵1, ↵1r = ¯↵ + 0.2 ¯↵1 with similar intervals for ↵11 and ↵111 Eigenvalues: 1 = 417.88, 2 = 1.2 and 3 = 0.009 so truncate series at NKL = 3 MATLAB: covariance_construct.m

- 24. Example and Motivation Example 2: Heat equation Note: Well-posedness requires Take @T @t = @ @x ✓ ↵(x, !) @T @x ◆ + f(t, x) , -1 < x < 1, t > 0 T(t, -1, !) = T`(!) , T(t, 1, !) = Tr (!) t > 0 T(0, x, !) = T0(!) - 1 < x < 1 0 < ↵min 6 ↵(x, !) 6 ↵max ↵(x, !) = ↵min + e ¯↵(x)+ P1 n=1 p n n(x)Qn(!) Parameters: Q = [T`, TR, T0, Q1, ... , QN ]